💡 주제 : Dependency Parsing

📌 핵심

- Task : 문장의 문법적인 구성, 구문을 분석

- Dependency Parsing : 단어 간 관계를 파악하여 단어의 수식 (문법) 구조를 도출해내기

📌 목차

1. Dependency Parsing 이란

(1) Parsing

✔ 정의

- 각 문장의 문법적인 구성이나 구문을 분석하는 과정

- 주어진 문장을 이루는 단어 혹은 구성 요소의 관계를 결정하는 방법으로, parsing의 목적에 따라 Consitituency parsing과 Dependency parsing으로 구분

✔ 비교

- 토크나이징 : 문장이 들어오면 의미를 가진 단위로 쪼개주는 것

- pos-tagging : 토큰들에 품사 tag 를 붙여주는 과정

- Paring : 문장 분석 결과가 Tree 형태로 나오는 것

(2) Constituency Parsing (18강)

✔ 정의

- 문장을 구성하는 구(phrase) 를 파악하여 문장 구조를 분석 → 문장의 외형적인 구조를 파악

- 영어와 같은 어순이 고정적인 언어에서 주로 사용

- 재귀적으로 적용이 가능

- (단어) - (구) - (문장)

✔ 기본 가정

- 문장이 특정 단어(토큰) 들로 뭉쳐져 이루어져 있다고 보는 것

(3) Dependency Parsing ⭐⭐

✔ 정의

- 문장의 전체적인 구성/구조 보다는 각 개별단어 간의 '의존관계' 또는 '수식관계' 와 같은 단어간 관계를 파악하는 것이 목적

- 한국어와 같은 자유 어순을 가지거나 문장 성분 생략이 가능한 언어에서 선호하는 방식

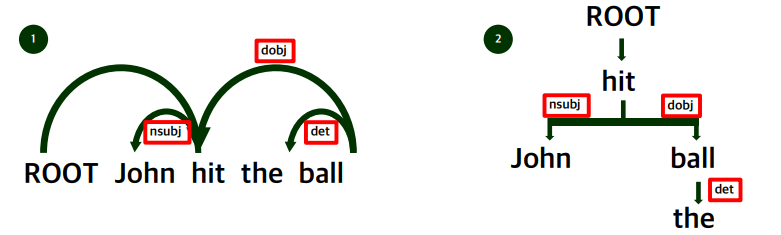

✔ 결과 형태

- 개별 단어 간의 관계를 파악하여 '화살표' 와 '라벨' 로 관계를 표시

- 수식받는 단어를 head 혹은 governor 라고 부르고, 수식하는 단어를 dependent 혹은 modifier 라고 부른다.

- nsubj, dobj, det 과 같은 레이블을 통해 개별 단어 사이의 수식관계를 표시한다.

👀 핵심 : Constituency parsing 은 문장 구조를 파악하기 위한 방법, Dependency parsing 은 단어 간 관계를 파악하기 위한 방법

2. Dependency Parsing 이 필요한 이유

👀 인간은 작은 단어들을 큰 단어로 조합하면서 복잡한 아이디어를 표현하고 전달한다.

👀 문장의 의미를 보다 정확하게 파악하기 위하여, Parsing 을 통해 모호성을 없애자!

(1) Phrase Attachment Ambiguity

- 형용사구, 동사구, 전치사구 등이 어떤 단어를 수식하는지에 따라 의미가 달라지는 모호성

(2) Coordination Scope Ambiguity

- 특정 단어가 수식하는 대상의 범위가 달라짐에 따라 의미가 변하는 모호성

- 중의적으로 해석될 여지가 있음

👀 우리가 영어를 읽을 때 / 로 끊어읽고 수식받는 부분을 괄호쳐서 화살표로 이어주듯, 언어를 올바르게 이해하기 위해서 문장의 구조를 이해할 필요가 있으며 무엇과 무엇이 연결되어 있는지에 대한 이해가 필요하다.

3. Dependency Grammar

(1) Structure

- Seqence 와 Tree 의 두 가지 형태로 표현이 가능하다.

✔ 규칙

- 화살표는 수식을 받는 단어 (head) 에서 수식을 하는 단어 (dependent) 로 향한다.

- 예시. The ball 👉 the 는 수식하는 단어, ball 은 수식 받는 단어이므로 ball 에서 the 방향으로 화살표

- 화살표 위의 label 은 단어간 문법적 관계 (dependency) 를 의미하며 화살표는 순환하지 않는다 → Tree 형태로 표현할 수 있는 이유

- 어떠한 단어의 수식도 받지 않는 단어는 가상의 노드인 ROOT 의 dependent 로 만들어 모든 단어가 최소 1개 노드의 dependent 가 되도록 한다.

(2) 보편적인 특징

✔ 특징

- Bilexical affinities : 두 단어 사이의 실제 의미가 드러나는 관계 ( discussion → issue 는 그럴듯한 관계임 )

- Dependency distance : dependency 의 거리를 의미하며 주로 가까운 위치에서 dependent 관계가 형성됨

- Intervening material : 마침표, 세미클론같은 구두점을 넘어 dependent 한 관계가 형성되지는 않음

- valency of heads : head 의 좌우측에 몇개의 dependents 를 가질 것인가

(3) Tree Bank

- Tree bank 연구 : 짧은 문장의 극성을 예측하고 문장의 어순을 무시하는 BoW 접근 방식을 취하여 어려운 부정 예제를 분류하는 효율적인 모델을 생성했다.

- Tree bank dataset 은 사람이 직접 문장들의 dependency 를 파악하여 dependency structure 를 구성한 데이터셋

- 영어 외에 다양한 언어들에 대해서 생성했다.

- 감성분석 작업의 이진 분류 정확도가 상승하는 결과를 보였다.

4. Dependency Paring 방법

(1) Graph Based

- 가능한 의존 관계를 모두 고려한 뒤 가장 확률이 높은 구문분석 트리를 선택

- 모든 가능한 경우의 트리 수를 고려하기 때문에 속도는 느리지만 정확도는 높음

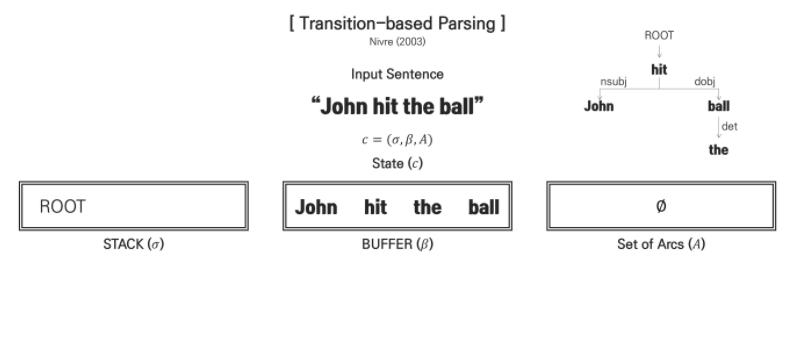

(2) Transition Based

✔ 정의

- 두 단어의 의존여부를 차례대로 결정 하며 점진적으로 구문 분석 트리를 구성

- 문장에 존재하는 sequence 를 차례대로 입력하면서 단어 사이에 존재하는 dependency 를 결정해나아감

- Graph based 방법에 비해 속도는 빠르지만 sequence 라는 한 방향으로만 분석이 이루어지기 때문에 모든 경우의 수를 고려하진 못하여 정확도는 낮음

(2)-1. Greedy transition-based parsing

✔ 3가지 구조

- BUFFER : 문장에 포함된 단어 토큰들이 입력되어 있는 곳으로 단어의 입출력 구조는 Fitst in First Out 이다.

- STACK : buffer 에서 out 된 단어들이 들어오는 곳으로 초기에는 ROOT 만 존재하며 단어의 입출력 구조는 Last in Last Out 이다. 이때 ROOT 토큰은 BUFFER 가 비워질 때까지 결정 대상이 되지 않는다.

- Set of Arcs : parsing 의 결과물이 담기게 되는 곳 (초기에는 공집합 상태)

- 문장이 입력되면 이 3가지 구조를 거쳐 output 이 도출되는 parsing 이 이루어진다.

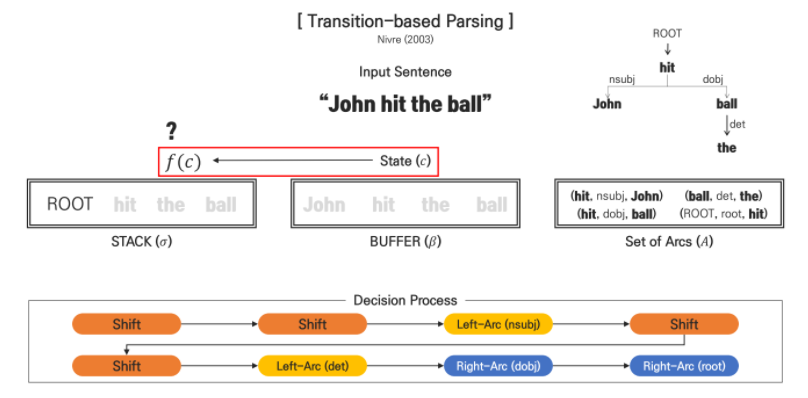

✔ State(c) , c = (buffer, stack, set of arcs)

- 모든 decision 은 State c 를 input 으로 하는 함수 f(c) 를 통해 이루어지며, 이때 함수 f 는 dependency 를 결정 (화살표 방향, 의존 관계 label) 하게 되는 함수로 SVM, NN 등이 사용될 수 있다.

✔ Decision

- Shift : buffer 에서 stack 으로 이동하는 경우

- Right - Arc : Stack 의 두번째 단어에서 첫번째 단어로 가는 것으로 우측으로 dependency 가 결정되는 경우

- Left - Arc : Stack 의 첫 번째 단어에서 두번째 단어로 가는 것으로 좌측으로 dependency 가 결정되는 경우

✔ Embedding

- state 를 함수의 input 으로 받기 위한 state 임베딩 과정이 필요하게 된다.

- 2005 년에 발표된 논문에서 제시된 방법 : Indicator feature 조건들을 만족하면 1 , 아니면 0 으로 표현

- s1 👉 stack 의 첫번째 단어 , b1 👉 buffer 의 첫번째 단어 , lr() 👉 괄호안 단어의 left child 단어 , rc() 👉 괄호안 단어의 right child 단어 , w 👉 단어, t 👉 태깅 같은 notation 의 의미를 잘 알아두어야함!

- 보통 하나의 state 를 10^6 차원의 벡터로 표현하게 됨

➕ 강의에서는 'I ate fish' 로 과정을 설명함

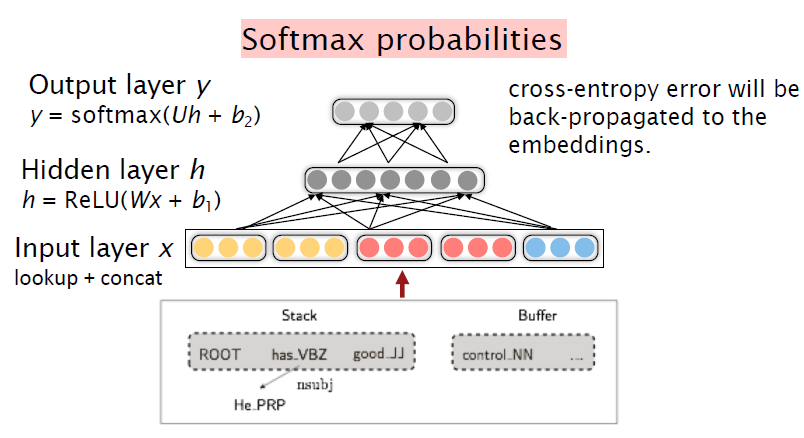

(2)-2. Neural Dependency Parser ⭐ ⭐

✔ Chen and Manning (2014) 논문에서 발표된 모델

✔ dense feature 를 사용한 신경망 기반의 trainsition-based parser 를 제안하여 속도와 성능을 모두 향상

✔ word vector , POS, arc decision 을 입력하여 각 feature vector 를 concatenate 한다.

✔ Input layer

- word, POS tag, arc labels 가 input 으로 입력된다.

1. word feature

- STACK과 BUFFER의 TOP 3 words (6개) + STACK TOP 1, 2 words의 첫번째, 두번째 left & right child word (8개) + STACK TOP 1,2 words의 left of left & right of right child word (8개)

2. POS tags feature

- word features 의 각 POS tag 들 (18개)

3. Arc labels

- word features 의 각 arc - label 들 (18개)

✔ Embedding

✔ Hidden Layer

- 일반적인 Feed forward network

- 활성화 함수로 cube function 을 사용 👉 word, POS tag, arc-label 간 상호작용을 반영할 수 있음

- cube function을 적용하게 되면 input으로 들어가는 3개의 feature인 word, POS tag, arc-label의 조합이 계산되면서 feature간의 상호관계를 파악할 수 있다. xi*xj*xk 👉 다른 비선형 함수 대비 성능이 좋은 것으로 소개됨

✔ Output layer

- 은닉층을 거친 feature vector 를 linear projection 한 후 소프트맥스 함수를 적용한다.

- softmax 함수를 통해 가능한 label 의 모든 경우의 수에 대한 확률을 구하게 되고, Shift, Left-Arc, Right-Arc 중 가장 높은 확률로 분류될 decision 이 선택되게 된다.

✔ 성능비교

1. Ablation studies 연구 결과

- cube function 이 다른 활성화함수보다 높은 성능을 기록

- Pre trained word vector (word2vec) 을 사용한 것이 random initialization 보다 높은 성능을 기록

- Word, Pos, label 3가지 정보를 모두 활용하는 것이 가장 높은 성능을 기록

2. POS and Label Embedding

- random initialization 된 Pos tag 와 Arc-label 의 학습이 진행되며 의미적인 유사성이 내포된다.

- t-SNE 을 통해 2차원 공간상에 표현되었을 때 유사한 요소들이 가까이 위치한 것을 확인해볼 수 있다.

3. Tree bank 데이터셋 실험 결과

- UAS : Arc 방향만 예측

- LAS : Arc 방향과 label 까지 예측 (의사결정 개수가 늘어남)

- Parsing 의 성능을 비교해보면 첫번째 Parser (Trainsition-based with conventional features) 의 경우 Graph-based 보다 훨씬 빠르지만 성능이 조금 낮음

- 마지막의 신경망을 활용한 모델이 Graph-based 와 성능은 유사하면서도 속도는 빠름!

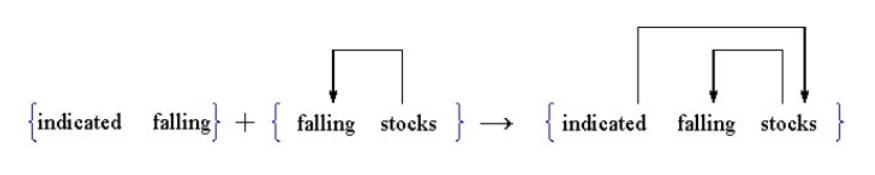

추가 parsing model

➕ Dynamic programming

- 긴문장이 있으면 그 문장들을 몇개로 나누어 하위 문자열에 대한 하위 트리를 만들고 최종적으로 그것들을 합치는 parsing 방식

➕ Constraint Satisfaction

- 문법적 제한 조건을 초기에 설정하고 그 조건을 만족하면 남기고 못하면 제거하여 조건을 만족시키는 단어만 parsing 하는 방식

👀 더 찾아보기 : projectivity 투사성 ) dependency arc 가 서로 겹치지 않는 성질. non-projectivity 의 경우엔?

📌 실습 자료

👀 spacy 라이브러리 (한국어 지원 x)

자연어처리(NLP) 29일차 (spaCy 소개)

2019.08.06

omicro03.medium.com

spacy를 이용해서 자연어처리하자.

intro

frhyme.github.io

Hitchhiker's Guide to NLP in spaCy

Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources

www.kaggle.com

🌱 (이미지) 소속된 동아리 week5 세션 참고 자료 : https://github.com/Ewha-Euron/2022-1-Euron-NLP

'1️⃣ AI•DS > 📗 NLP' 카테고리의 다른 글

| [cs224n] 7강 내용 정리 (0) | 2022.04.21 |

|---|---|

| [cs224n] 6강 내용 정리 (0) | 2022.03.24 |

| [cs224n] 4강 내용 정리 (0) | 2022.03.18 |

| NLP deep learning (0) | 2022.03.15 |

| [cs224n] 3강 내용 정리 (0) | 2022.03.14 |

댓글