📌 교내 '인공지능' 수업을 통해 공부한 내용을 정리한 것입니다.

1️⃣ 복습

① FC backpropagation

👉 dy(L) 에서 dz(L+1) 이 삽입되는 부분 이해할 것!

💨 dy(L) = (0-0t) * f'(zk) * W = dz(L+1) * W

💨 dz(L+1) = (0-0t) * f'(zk) = dy(L+1) * f'(zk)

💨 최종 끝단 layer dy(L+1) = dC/dy(L+1) = d {1/2*(0-0t)^2} / dy(L+1) = d {1/2*(0-0t)^2} / d0t = (0-0t)

👻 by chain rule

- activation gradient : dL / dy(L) = local gradient * weight

- local graidnet : dL / dz(L)

- weight gradient : dL / dW(L) = local gradient * input

⭐ activation gradient 가 뒤 레이어에서부터 앞 레이어로 전달되고 그를 통해 계산된 local gradient 를 이용해 weight gradient 를 구하는 과정 이해하기

⭐ Transpose 로 차원 맞춰주는거 잊지 않기

② CONV forward in Python

👻 CONV 연산 기본 (in 3D conv)

- input channel = filter channel

- filter 의 개수 = output channel

- Output feature map 의 크기 구하는 공식

- W2 = (W1 - F + 2P) / S +1

- H2 = (H1 - F + 2P) / S +1

- Maxpooling 연산 결과

- (W1 - Ps) / S + 1

- Ps 는 pooling size

👻 Alexnet

- Convolution (feature extraction) : filter 에 상응하는 피처를 이미지에서 뽑아내는 과정

- Dense layer (classification) : 추출된 피처 데이터 분포에서 linear classification 을 수행

👻 python 으로 CNN forward 연산 구현

- O : output , W : weight (=filter 의 원소값) , I : input , S : stride , B : bias , K : filter (필터 하나를 가리킴)

- Total Cost : W2 * H2 * K * C * F * F

2️⃣ CNN backpropagation

① CNN backpropagation

(1) forward ex with C=1, K=1, S=1, P=0

💨 연산 예시 : O(00) 구하는 과정

- x=0, y=0 이고 (p,q) → (0,0), (1,0), (0,1), (1,1) 에 대해 연산을 수행하면 i00*k00 + i10*k10 + i01*k01 + i11*k11 의 결과가 얻어진다.

(2) backward

- FC layer 의 역전파 과정과 유사하다. (by chain rule)

💨 dO/dI 와 dO/dk 를 구하는 것이 관건!

(2)-a. Find dO/dk

😎 loss 에 대한 kernel (weight) gradient 결과는

input 과 activation gradient dL/dO 의 합성곱 연산과 같다.

(2)-b. Find dO/dI

😎 loss 에 대한 input gradient 결과는

원소 값이 180 도 방향으로 위치한 weight 와

activation gradient dL/dO 의 합성곱 연산과 같다.

(2)-c. summary

② Maxpooling backpropagation

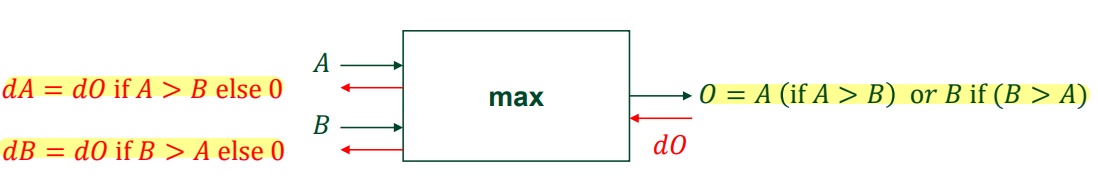

(1) A와 B에 대한 max 연산의 역전파 구해보기

- dA : A가 B 보다 클 때 O=A 가 되고, A에 대해 미분하면 1 (dO) 가 된다.

- dB : B가 A 보다 클 때 O=B 가 되고, B에 대해 미분하면 1 (dO) 가 된다.

- 즉, max 에 해당하는 값에만 output gradient 가 전달된다.

(2) maxpooling 에 대한 역전파

- (1) 에서 도출된 아이디어를 바탕으로 동일하게 적용

- forward 에서 최대값에 해당되는 원소의 위치를 기억해두었다가 backward 에서 기억해둔 위치에만 output gradient 를 전달한다.

③ batch gradient descent in CNN

1. 매우 작은 숫자로 weight 와 bias 의 초기값을 설정한다.

2. For the ⭐ entire training samples :

a. Forward propagation : calculate the batch of output values

b. Compute Loss L

c. Backpropagate errors through network

d. Update weights and biases

3. network 가 잘 훈련될때까지 2번 과정을 반복한다.

그러나 전체 데이터셋에 대해 훈련을 진행하면

한번 weight update 시 전체 데이터 개수만큼 forward 를 해야하므로

시간이 오래걸린다. (👀 실제로 이렇게는 안함)

④ Stochastic gradient descent in CNN

(1) SGD

1. 매우 작은 숫자로 weight 와 bias 의 초기값을 설정한다.

2. For ⭐ a mini-batch of randomly chosen training samples :

a. Forward propagation : calculate the mini-batch of output values

b. Compute Loss L

c. Backpropagate errors through network

d. Update weights and biases

3. 1 epoch, 즉 전체 훈련셋을 모두 거칠때까지 2번 과정을 반복한다.

4. network 가 잘 훈련될 때까지 2번과 3번 과정을 반복한다.

- 랜덤하게 mini - batch 훈련 데이터셋을 추출한다. 이때 이전 단계에 사용한 데이터는 제외한다.

- 미니배치 크기 만큼의 훈련 데이터를 가져와 그만큼의 가중치 업데이트를 진행한다.

- 한 에포크 당 미니배치 개수만큼의 가중치 업데이트가 발생한다.

(2) SGD vs gradient descent

👀 SGD가 GD 보단...

- 훈련 속도가 빠르다.

- randomness 에 의해서 local min 으로부터 빠져나올 가능성이 높아 global min 에 도달할 수 있어 최종 성능이 좋게 나오는 경향이 있다.

'1️⃣ AI•DS > 📒 딥러닝' 카테고리의 다른 글

| [인공지능] Regularization (0) | 2022.04.26 |

|---|---|

| [인공지능] 다양한 CNN 모델 (0) | 2022.04.26 |

| [인공지능] CNN (0) | 2022.04.23 |

| [인공지능] DNN (0) | 2022.04.23 |

| [인공지능] MLP (0) | 2022.04.21 |

댓글