📌 파이썬 머신러닝 완벽가이드 공부 내용 정리

📌 실습 코드

https://colab.research.google.com/drive/1UzQNyu-rafb1SQEDcQCeCyYO54ECgULT?usp=sharing

08. 텍스트 분석.ipynb

Colaboratory notebook

colab.research.google.com

1️⃣ 텍스트 분석의 이해

👀 개요

💡 NLP 와 텍스트 마이닝

✔ NLP

- 인간의 언어를 이해하고 해석하는데 중점을 두고 발전

- 텍스트 마이닝을 향상하게 하는 기반 기술

- 기계번역, 질의응답 시스템 등

✔ 텍스트 마이닝

- 비정형 텍스트에서 의미있는 정보를 추출하는 것에 중점

1. 텍스트 분류 : 문서가 특정 분류 또는 카테고리에 속하는 것을 예측하는 기법

ex. 신문 기사 카테고리 분류, 스팸메일 검출과 같은 지도학습

2. 감성분석 : 텍스트에서 나타나는 감정/판단/기분 등 주관적 요소를 분석

ex. 제품 리뷰 분석, 의견분석 등 비지도 학습 & 지도학습 적용

3. 텍스트 요약 : 중요한 주제나 중심 사상을 추출하는 기법

ex. 토픽 모델링

4. 텍스트 군집화와 유사도 측정 : 비슷한 유형의 문서에 대해 군집화/유사도 측정 수행

💡 텍스트 분석의 이해

✔ 피처 추출 (피처 벡터화)

- 텍스트를 단어 기반의 다수의 피처로 추출하고, 이 피처에 단어 빈도수와 같은 숫자값을 부여 👉 텍스트는 단어의 조합인 벡터값으로 표현

- 텍스트를 벡터값을 가지는 피처로 변환하는 것은 머신러닝 모델을 적용하기 전에 수행해야할 매우 중요한 요소이다.

- 대표적인 피처 벡터화 변환 방법 : BoW, Word2Vec

✔ 분석 수행 프로세스

1. 텍스트 사전 준비작업 (전처리)

- 대/소문자 변경, 특수문자 삭제 등의 클렌징

- 단어 등의 토큰화 작업

- 의미없는 단어 제거 작업

- 어근 추출 (Stemming, Lemmatization)

2. 피처 벡터화/추출

- 가공된 텍스트에서 피처를 추출하고 벡터 값을 할당한다.

- BoW 👉 Count 기반, TF-IDF 기반

- Word2vec

3. ML 모델 수립 및 학습/예측/평가

💡 분석 패키지

✔ NLTK

- 대표적인 파이썬 NLP 패키지

- 다양한 데이터세트와 서브 모듈을 가지고 있으며 NLP 의 거의 모든 영역을 커버하고 있음

- 수행 속도 측면에서 아쉬움 : 실제 대량 데이터 기반에서는 제대로 활용되지 못함

✔ Gensim

- 토픽모델링 분야에서 가장 두각을 나타내는 패키지

- Word2Vec 구현 등 다양한 기능 제공

✔ SpaCy

- 뛰어난 수행 성능, 최근 가장 주목받는 패키지

🏃♀️ 한국어 자연어 처리 : KoNLPy

2️⃣ 텍스트 전처리

👀 개요

💡 클렌징

✔ 불필요한 문자, 기호등을 사전에 제거하는 작업

✔ HTML, XML 태그나 특정 기호 등을 사전에 제거한다.

💡 정규표현식

💡 텍스트 토큰화

✔ 문장 토큰화

- 문장의 마침표나 개행문자(\n) 등 문장의 마지막을 뜻하는 기호에 따라 분리하는 것이 일반적이다.

- 혹은 정규표현식에 따른 문장 토큰화도 가능하다.

- sent_tokenize( ) 가 반환하는 것은 각 문장으로 구성된 리스트 객체이다.

from nltk import sent_tokenize

import nltk

nltk.download('punkt') # 마침표, 개행 문자 등의 데이터 세트 다운로드

text_sample = 'I ate an apple. \ I ate a banana. \ I worked at home.'

sentences = sent_tokenize(text = text_sample)

print(sentences)

### 📌 결과 ####

['I ate an apple.' , 'I ate a banana.', 'I worked at home.']

✔ 단어 토큰화

- 문장을 단어로 토큰화

- word_tokenize( )

- 기본적으로 공백, 콤마, 마침표, 개행문자 등으로 단어를 분리하나, 정규표현식을 통해 토큰화를 수행할 수도 있다.

- BoW 와 같이 단어의 순서가 중요하지 않다면, 문장 토큰화를 사용하지 않고 단어 토큰화만 사용해도 충분하다.

from nltk import word_tokenize

sentence = 'I ate the pizza.'

words = word_tokenize(sentence)

print(words)

## 📌 결과 ##

['I', 'ate', 'the', 'pizza']

👀 일반적으로, 하나의 문서에 대해 문장 토큰화를 적용하고, 분리된 각 문장에 대해 다시 단어 토큰화를 적용하는 것이 일반적이다.

👀 문장을 단어별로 토큰화할 경우, 문맥적인 의미가 무시된다 👉 n-gram 을 통해 해결을 시도

👀 n-gram : 연속된 n 개 단어를 하나의 토큰화 단위로 분리

ex. ('I', 'ate'), ('ate', 'the'), ('the', 'pizza')

💡 불용어 제거

✔ Stop word

- 분석에 큰 의미가 없는 단어

- is, the, a, will 등 문맥적으로 큰 의미가 없는 단어가 이에 해당한다. 그러나 이러한 단어는 문장 내에 빈번하게 등장하므로, 오히려 중요한 단어로 인지될 수 있기 때문에 이러한 의미없는 단어를 사전에 제거하는 것이 중요하다.

- 언어별로 이러한 불용어들이 목록화 되어 있다.

import nltk

nltk.download('stopwords')

stopwords = nltk.corpus.stopwords.words('english')

all_tokens = []

# 📌 불용어 제거 for 문

for sentence in word_tokens:

filtered_words=[]

# 개별 문장별로 ⭐tokenize된 sentence list⭐ 에 대해 stop word 제거 Loop

for word in sentence:

#소문자로 모두 변환합니다.

word = word.lower()

# tokenize 된 개별 word가 stop words 들의 단어에 포함되지 않으면 word_tokens에 추가

if word not in stopwords:

filtered_words.append(word)

all_tokens.append(filtered_words)

print(all_tokens)

💡 어근 추출

✔ Stemming과 Lemmatization

- 영어의 경우 과거/현재, 3인칭 단수 여부, 진행형 등 매우 많은 조건에 따라 원래 단어가 변화한다.

- stemming 과 lemmatization 은 단어의 원형을 결과로 도출해준다.

- 이때 Lemmatization 이 보다 정교하게 의미론적인 기반에서 단어의 원형을 찾아주는 대신 시간이 오래걸린다는 단점이 있다.

- Stemming 은 단순하게 원형 단어를 찾아준다.

✔ NLTK 클래스

- Stemmer : Porter, Lancaster, Snowball Stemmer

- Lemmatization : WordNetLemmatizer

from nltk.stem import LancasterStemmer

stemmer = LancasterStemmer()

print(stemmer.stem('happier'),stemmer.stem('happiest'))

## 📌 결과 ##

happy happiest

👉 stemmer 는 형용사 happy 에 대한 원형을 제대로 찾지 못한다.

from nltk.stem import WordNetLemmatizer

import nltk

nltk.download('wordnet')

lemma = WordNetLemmatizer()

print(lemma.lemmatize('happier','a'),lemma.lemmatize('happiest','a'))

## 📌 결과 ##

happy happy

👉 Lemmatization 은 보다 정확한 원형 단어를 추출해주기 위하여 단어의 '품사' 를 입력해주어야 한다.

* 동사 : 'v'

* 형용사 : 'a'

3️⃣ BoW

👀 개요

💡 Bag of Words

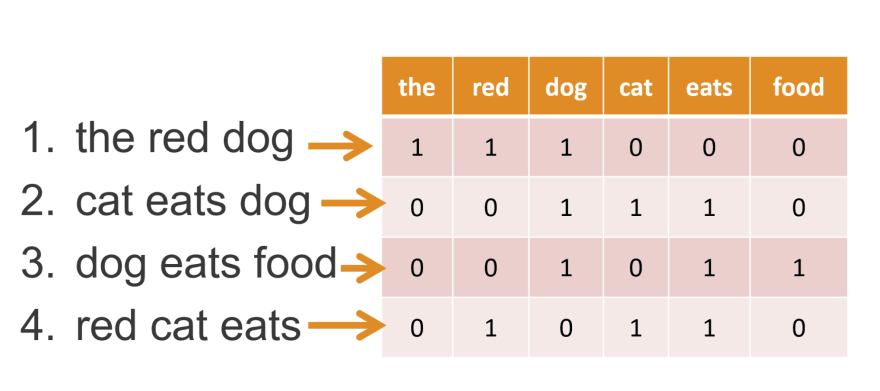

✔ BoW 피처 벡터화

- 문서가 가지는 모든 단어를 문맥이나 순서를 무시하고 일괄적으로 단어에 대해 빈도 값을 부여해 피처 값을 추출하는 모델이다.

- 모든 문장들에 대하여, 모든 단어에서 중복을 제거하고 각 단어를 칼럼 형태로 나열한다.

- 그리고 각 단어에 고유의 인덱스를 부여한 후, 개별 문장에서 해당 단어가 나타나는 횟수를 각 단어에 기재한다.

✔ 장단점

💨 장점

- 쉽고 빠른 모델 구축이 가능

- 단순히 단어의 발생 횟수에 기반하나, 문서의 특징을 잘 잡아내는 모델이라 전통적으로 여러 분야에서 활용도가 높다.

💨 단점

- 문맥 의미 반영 부족 : 단어의 순서를 고려하지 않기 때문에 단어의 문맥적 의미가 무시된다. 이를 보완하기 위해 n-gram 을 사용할 수 있으나 문맥적인 해석에 대해선 제한적이다.

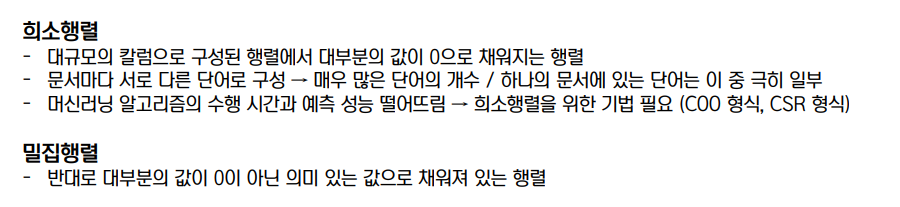

- 희소행렬 sparse 문제 : 문서마다 서로 다른 단어로 구성되기에 문서마다 나타나지 않는 단어가 훨씬 많다. 그러나 단어 행렬에서 (중복되지 않은) 모든 단어가 열에 위치하기 때문에 대부분의 셀의 값이 0으로 채워진다는 단점이 있다. 희소행렬은 일반적으로 ML 알고리즘의 수행 시간과 예측 성능을 떨어뜨리므로 이에 대한 특별한 기법이 마련되어 있다.

👀 밀집행렬 : 대부분의 값이 0이 아닌 의미있는 값으로 채워진 행렬

💡 사이킷런 CountVectorizer, TfidfVectorizer

✔ BOW 의 피처벡터화

- 모든 문서에서 모든 단어를 칼럼 형태로 나열하고 각 문서에서 해당 단어의 횟수나 정규화된 빈도를 값으로 부여하는 데이터세트 모델로 변경하는 것

- M개의 문서, 총 N 개의 단어 👉 MxN 행렬

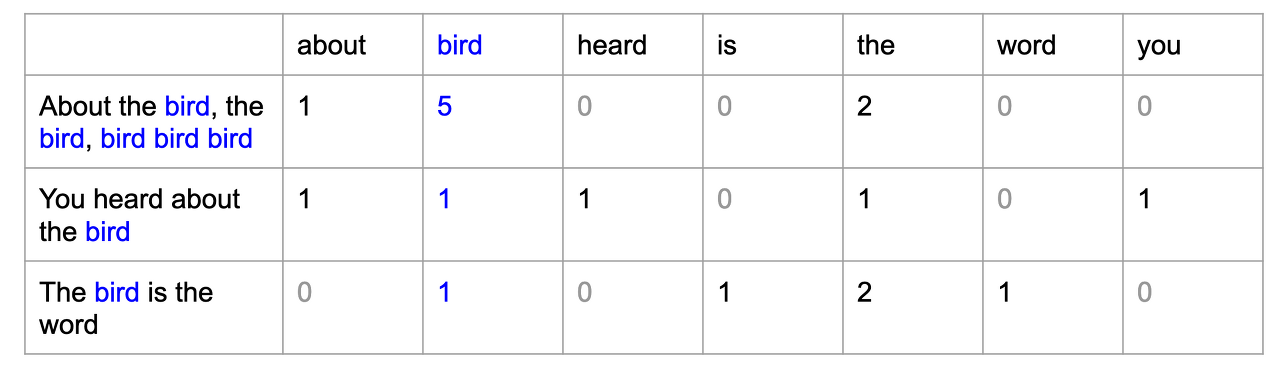

✔ CountVectorizer

- 각 문서에 해당 단어가 나타나는 횟수가 행렬의 각 셀의 값에 해당한다.

- 카운트 값이 높을수록 중요한 단어로 인식된다.

- 카운트만 부여하다보니, 그 문서의 특징을 나타내기 보다는 언어의 특성상 문장에서 자주 사용될 수밖에 없는 단어까지 높은 값을 부여하게 된다는 단점이 있다.

- 사이킷런의 CountVectorizer 클래스는 단지 피처 벡터화만을 수행하진 않으며 소문자 일괄 변환, 토큰화, 스톱 워드 필터링 등의 텍스트 전처리도 함께 수행한다 👉 토크나이징 + 벡터화를 동시에 해준다. (따로 토큰화까지 진행된 형태를 넣어주어도 상관은 없음. 각 문서가 리스트 형태로 구성되어 있기만 하면됨)

>>> from sklearn.feature_extraction.text import CountVectorizer

>>> corpus = [

... 'This is the first document.',

... 'This document is the second document.',

... 'And this is the third one.',

... 'Is this the first document?',

... ]

>>> vectorizer = CountVectorizer()

>>> X = vectorizer.fit_transform(corpus)

>>> vectorizer.get_feature_names_out()

array(['and', 'document', 'first', 'is', 'one', 'second', 'the', 'third',

'this'], ...)

>>> print(X.toarray())

[[0 1 1 1 0 0 1 0 1]

[0 2 0 1 0 1 1 0 1]

[1 0 0 1 1 0 1 1 1]

💨 파라미터

| max_df | ◾ 전체 문서에 걸쳐 너무 높은 빈도수를 가진 단어 피처 제외 👉 max_df = 100 : 전체 문서에 걸쳐 100개 이하로 나타나는 단어만 피처로 추출 👉 max_df = 0.9 : 부동 소수점으로 값을 지정하면 전체 문서에 걸쳐 빈도수 0~90% 까지의 단어만 피처로 추출하고 나머지 상위 5%는 추출하지 않음 |

| min_df | ◾ 전체 문서에 걸쳐 너무 낮은 빈도수를 가진 단어 피처 제외 (보통 2~3으로 설정) ◾ 너무 낮은 빈도로 등장하는 단어는 크게 중요하지 않거나 garbage 성 단어라고 볼 수 있다. 🎈 max_df 와 동작방식이 같음 |

| max_features | 추출하는 피처의 개수를 제한하며 정수로 값을 지정한다. 가장 높은 빈도를 가지는 단어순으로 정렬해 지정한 개수까지만 피처로 추출한다. |

| stop_words | 'english' 로 지정하면 영어 불용어로 지정된 단어는 추출에서 제외한다. |

| n_gram_range | ◾ 단어 순서를 어느정도 보강하기 위한 파라미터 ◾ 튜플 형태로 (범위 최솟값, 범위 최댓값) 을 지정한다. 👉 n_gram_range = (1,1) : 토큰화된 단어를 1개씩 피처로 추출한다. 👉 n_gram_range = (1,2) : 토큰화된 단어를 1개씩, 그리고 순서대로 2개씩 묶어서 추출한다. |

| analyzer | ◾ 피처 추출을 수행한 단위를 지정 ◾ 기본값 : 'word' ◾ character 의 특정 범위를 피처로 만드는 특정한 경우 등을 적용할 때 사용된다. |

| token_pattern | ◾ 토큰화를 수행하는 정규 표현식 패턴을 지정 ◾ '\b\w\w+\b' 가 디폴드 값으로 공백, 개행문자 등으로 구분된 단어분리자 혹은 2문자 이상의 단어를 토큰으로 분리한다. ◾ analyzer='word' 일때만 변경 가능 (보통 디폴트 값을 사용) |

| tokenizer | 토큰화를 별도의 커스텀 함수로 이용시 적용 |

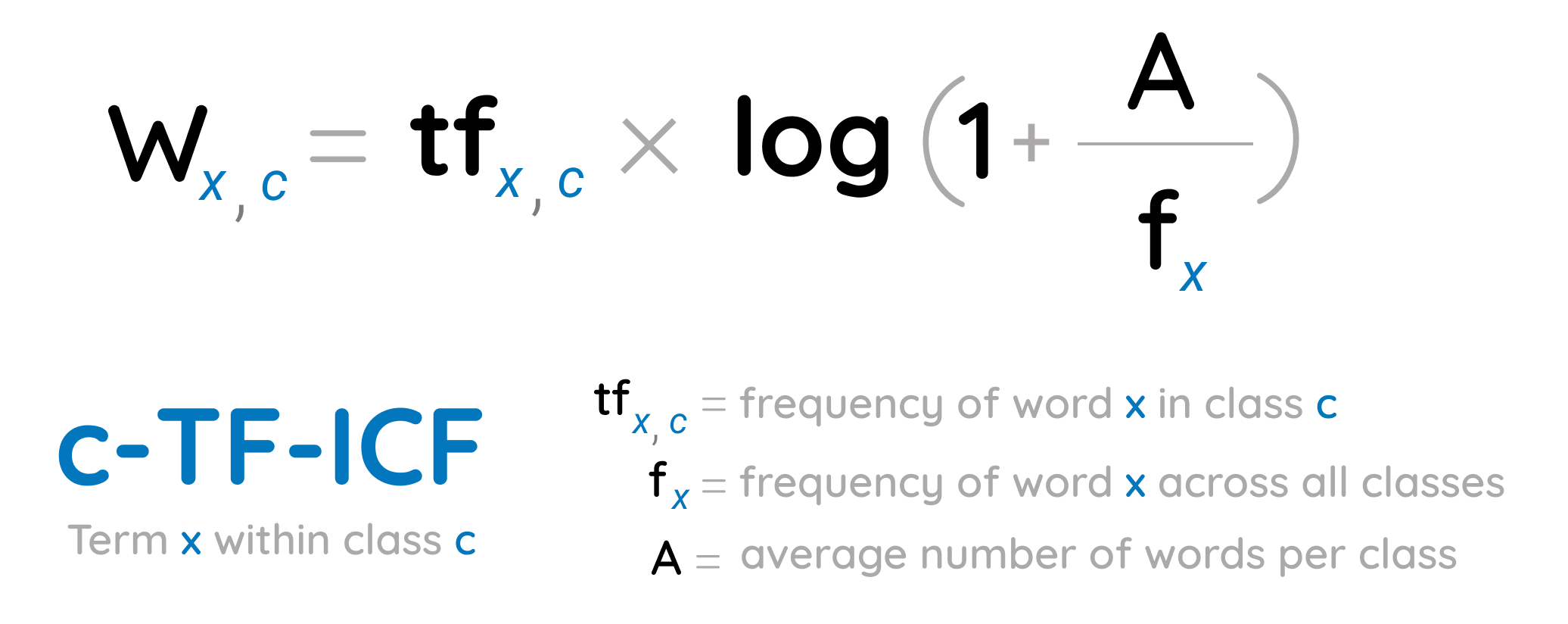

✔ TF-IDF

- 개별 문서에서 자주 나타나는 단어에는 높은 가중치를 주되, 모든 문서에서 전반적으로 자주 나타나는 범용적인 단어에 대해서는 페널티를 주는 방식으로 값을 부여하는 방법이다.

- 문서마다 텍스트가 길고, 문서의 개수가 많은 경우 카운트 방식보다 더 좋은 예측 성능을 보장할 수 있다.

from sklearn.feature_extraction.text import TfidfVectorizer

corpus = [

'you know I want your love',

'I like you',

'what should I do ',

]

tfidfv = TfidfVectorizer().fit(corpus)

print(tfidfv.transform(corpus).toarray())

print(tfidfv.vocabulary_)

## 📌 결과 ##

[[0. 0.46735098 0. 0.46735098 0. 0.46735098 0. 0.35543247 0.46735098]

[0. 0. 0.79596054 0. 0. 0. 0. 0.60534851 0. ]

[0.57735027 0. 0. 0. 0.57735027 0. 0.57735027 0. 0. ]]

{'you': 7, 'know': 1, 'want': 5, 'your': 8, 'love': 3, 'like': 2, 'what': 6, 'should': 4, 'do': 0}

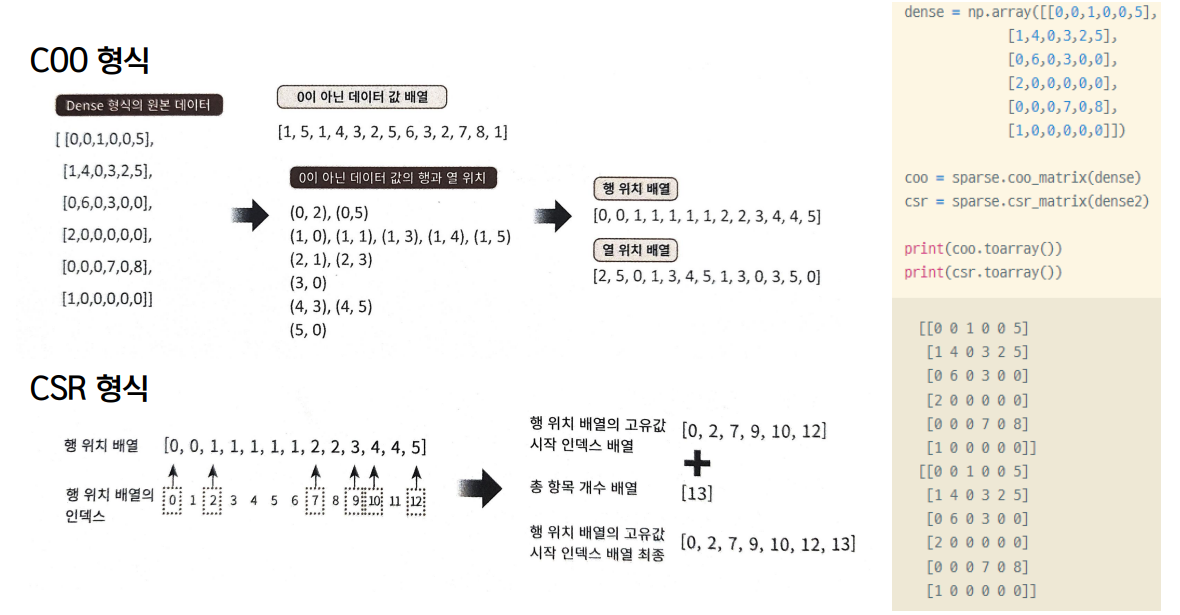

💡 BoW 벡터화를 위한 희소 행렬

- 희소행렬은 너무 많은 불필요한 0 값이 메모리 공간에 할당되어 메모리 공간이 많이 필요하고, 행렬의 크기가 커서 연산 시에도 데이터 엑세스를 위한 시간이 많이 소모된다.

- 희소행렬을 물리적으로 적은 메모리 공간을 차지하도록 변환하는 방법 : COO 형식 , CSR 형식

- 일반적으로 CSR 형식이 더 수행 능력이 뛰어나기 때문에 COO보단 CSR 를 활용한다.

✔ 희소행렬

✔ COO

- 0이 아닌 데이터만 별도의 배열에 저장하고 그 데이터가 가리키는 행과 열의 위치를 별도의 배열로 저장하는 방식

✔ CSR

- COO 형식이 행과 열의 위치를 나타내기 위해 반복적인 위치 데이터를 사용해야 하는 문제점을 해결한 방식

from scipy import sparse

sparse.coo_matrix(data)

sparse.csr_matrix(data)

4️⃣ 텍스트 분류 실습

📌 뉴스 그룹 분류 실습

💡 텍스트 분류

✔ 특정 문서의 분류를 학습 데이터를 통해 학습해 모델을 생성한 뒤 , 이 학습 모델을 이용해 다른 문서의 분류를 예측

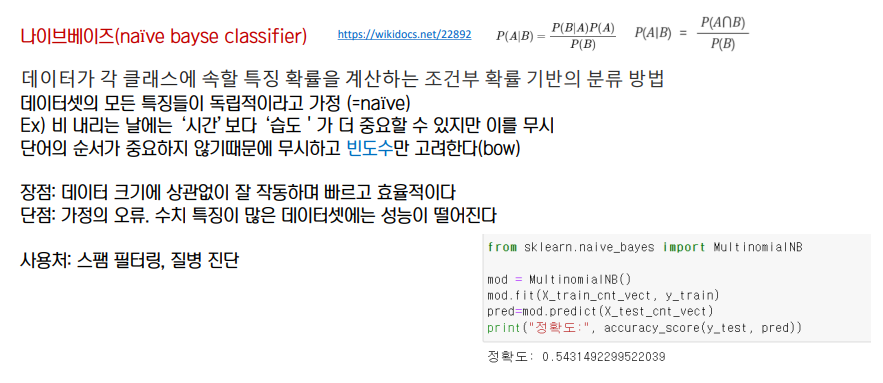

✔ 희소행렬에 분류를 효과적으로 수행할 수 있는 알고리즘

- 로지스틱회귀

- SVM

- 나이브 베이즈

🏃♀️ Countvectorizer , TfidfVectorizer

🏃♀️ model : 로지스틱 회귀

🏃♀️ 하이퍼 파라미터 튜닝 : GridSearchCV, Pipeline

5️⃣ 감성분석

👀 개요

💡 감성분석

✔ 문서의 주관적인 감성/의견/감정/기분 등을 파악하기 위한 방법

✔ 소셜미디어, 여론조사, 온라인 리뷰, 피드백 등 다양한 분야에서 활용됨

✔ 텍스트의 단어와 문맥 👉 감성 수치를 계산 👉 긍정 감성 지수와 부정 감성 지수

지도학습

- 학습 데이터 + 타깃 레이블 값

- 텍스트 분류와 유사함

비지도학습

- Lexicon 이라는 일종의 감성 어휘 사전을 이용한다. (한글 지원 X)

- 감성 어휘 사전을 이용해 문서의 긍정적, 부정적 감성 여부를 판단한다.

- 보통 많은 감성 분석용 데이터는 결정된 레이블 값을 가지고 있지 않다.

💨 Polarity score 감성 지수

- 감성사전은 긍정 또는 부정 감성의 정도를 의미하는 수치를 가지고 있다.

- 감성지수는 단어의 위치나 주변 단어, 문맥, POS (명사/형용사/동사 등의 문법 요소 태깅) 등을 참고해 결정된다.

- NLTK 의 Lexicon 모듈

💡 감성사전

💨 WordNet

- NLP 패키지에서 제공하는 방대한 "시맨틱 분석"을 제공하는 영어 어휘 사전

- 시맨틱 semantic : 문맥상의 의미 → 동일한 단어나 문장이라도 다른 환경과 문맥에서는 다르게 표현되거나 이해될 수 있다.

- WordNet 은 다양한 상황에서 같은 어휘라도 다르게 사용되는 어휘의 시맨틱 정보를 제공한다 👉 품사로 구성된 개별 단어를 Synset 이라는 개념을 이용해 표현한다.

- NLTK 에서 제공하는 감성사전의 예측성능은 그리 좋진 않다. 실제 업무 적용에서는 NLTK 패키지가 아닌 다른 감성 사전을 적용하는 것이 일반적이다.

📑 대표적인 감성사전

- NLTK 의 WordNet

- SentiWordNet : 감성 단어 전용의 WordNet 을 구현한 것

- VADER : 소셜 미디어의 텍스트에 대한 감성분석을 제공하기 위한 패키지

- Pattern : 예측성능에서 가장 주목받는 패키지. 파이썬 2.X 버전에서만 동작한다.

➕ 한글 감성 어휘 사전

◾ KNU : http://dilab.kunsan.ac.kr/knusl.html

◾ KOSAC : http://word.snu.ac.kr/kosac/ , https://github.com/mrlee23/KoreanSentimentAnalyzer

📌 실습

|

from nltk.corpus import wordnet as wn

|

|

from nltk.corpus import sentiwordnet as swn

|

| from nltk.sentiment.vader import SentimentIntensityAnalyzer |

💨 지도학습

텍스트 클렌징(정규표현식) → train/test dataset → 텍스트 벡터화 (Count, TF-IDF) + 분류 모델링 (로지스틱회귀) by Pipeline

💨 비지도학습

🏃♀️ WordNet

from nltk.corpus import wordnet as wn

term = 'present'

synsets = wn.synsets(term)

print('반환 type' , type(synsets))

print('반환 값 개수', len(synsets))

print('반환 값', synsets) #리스트 형태로 반환

###########결과##################

반환 type <class 'list'>

반환 값 개수 18

반환 값 [Synset('present.n.01'), Synset('present.n.02'), Synset('present.n.03'), Synset('show.v.01'), Synset('present.v.02'), Synset('stage.v.01'), Synset('present.v.04'), Synset('present.v.05'), Synset('award.v.01'), Synset('give.v.08'), Synset('deliver.v.01'), Synset('introduce.v.01'), Synset('portray.v.04'), Synset('confront.v.03'), Synset('present.v.12'), Synset('salute.v.06'), Synset('present.a.01'), Synset('present.a.02')]

◾ present.n.01

- n : 명사

- 01 : present 가 명사로서 가지는 여러 의미를 구분하기 위한 인덱스

◾ synset : 하나의 단어가 가질 수 있는 여러가지 시맨틱 정보를 개별 클래스로 나타낸 것

print(synsets[0].name())

print(synsets[0].lexname()) # POS

print(synsets[0].definition()) # 정의

print(synsets[0].lemma_names()) # 부명제

##################결과#################

present.n.01

noun.time

the period of time that is happening now; any continuous stretch of time including the moment of speech

['present', 'nowadays']

◾ WordNet 은 어떤 어휘와 다른 어휘 간의 관계를 유사도로 나타낼 수 있다 : path_similarity()

🏃♀️ SentiWordNet

from nltk.corpus import sentiwordnet as swn

senti_synsets = list(swn.senti_synsets('slow'))

print(type(senti_synsets))

print(len(senti_synsets))

print(senti_synsets)

#############결과################

<class 'list'>

11

[SentiSynset('decelerate.v.01'), SentiSynset('slow.v.02'), SentiSynset('slow.v.03'), SentiSynset('slow.a.01'), SentiSynset('slow.a.02'), SentiSynset('dense.s.04'), SentiSynset('slow.a.04'), SentiSynset('boring.s.01'), SentiSynset('dull.s.08'), SentiSynset('slowly.r.01'), SentiSynset('behind.r.03')]

◾ SentiSynset 객체는 단어의 감성을 나타내는 감성지수와 객관성 (감성과 반대) 를 나타내는 객관성 지수를 나타내고 있다. 어떤 단어가 전혀 감성적이지 않으면 객관성 지수는 1이 되고, 감성 지수는 모두 0이 된다.

father = swn.senti_synset('father.n.01')

print(father.pos_score())

print(father.neg_score())

print(father.obj_score())

#########결과##########

0.0

0.0

1.0

fabulous = swn.senti_synset('fabulous.a.01') #굉장한 이라는 뜻을 가지고 있음

print(fabulous.pos_score())

print(fabulous.neg_score())

print(fabulous.obj_score())

##############결과###################

0.875

0.125

0.0

◾ IMDB 감성평 감성 분석 (코랩 노트북 참고)

(1) 문서를 문장 단위로 분해

(2) 문장을 단어 단위로 토큰화하고 품사태깅

👉 (1), (2) 번은 WordNet 을 이용

(3) 품사 태깅된 단어 기반으로 synset 객체와 senti_synset 객체를 생성

(4) Senti_synsets 에서 감정 긍정/부정 지수를 구하고 이를 모두 합산해 특정 임계치 값 이상일 때 긍정감성으로, 그렇지 않으면 부정 감성으로 분류

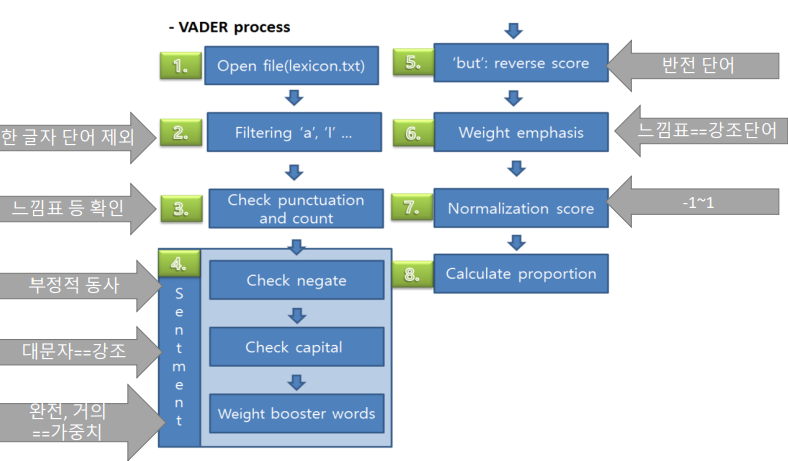

🏃♀️ Vader

◽ 소셜 미디어의 감성분석 목적

from nltk.sentiment.vader import SentimentIntensityAnalyzer

senti_analyzer = SentimentIntensityAnalyzer()

senti_scores = senti_analyzer.polarity_scores(review_df['review'][0]) # IMDB 리뷰 하나만 가지고 감성분석 수행해보기

print(senti_scores)

########결과##########

{'neg': 0.13, 'neu': 0.743, 'pos': 0.127, 'compound': -0.7943}

- polarity_scores() 메서드를 호출해 감성 점수를 구한다. 단어의 위치, 주변단어, 문맥, 품사 POS 등을 참고해 결정된다.

- neg 부정, neu 중립, pos 긍정지수

- compound : neg, neu, pos 를 적절히 조합해 -1 에서 1 사이의 감성 지수를 표현한 값이다. 이 값을 기반으로 긍부정 여부를 결정한다. 보통 0.1 이상이면 긍정으로, 그 이하면 부정으로 판단하는데, 상황에 따라 임계값을 적절히 조정해 예측 성능을 조절할 수 있다.

def vader_polarity(review,threshold=0.1):

analyzer = SentimentIntensityAnalyzer()

scores = analyzer.polarity_scores(review)

# compound 값에 기반하여 threshold 입력값보다 크면 1, 그렇지 않으면 0을 반환

agg_score = scores['compound']

final_sentiment = 1 if agg_score >= threshold else 0

return final_sentiment

# apply lambda 식을 이용하여 레코드별로 vader_polarity( )를 수행하고 결과를 'vader_preds'에 저장

review_df['vader_preds'] = review_df['review'].apply( lambda x : vader_polarity(x, 0.1) )

y_target = review_df['sentiment'].values

vader_preds = review_df['vader_preds'].values

print('#### VADER 예측 성능 평가 ####')

get_clf_eval(y_target, vader_preds)

# 정확도가 SentiWordNet 보다 향상됨 (재현율이 특히 향상됨)

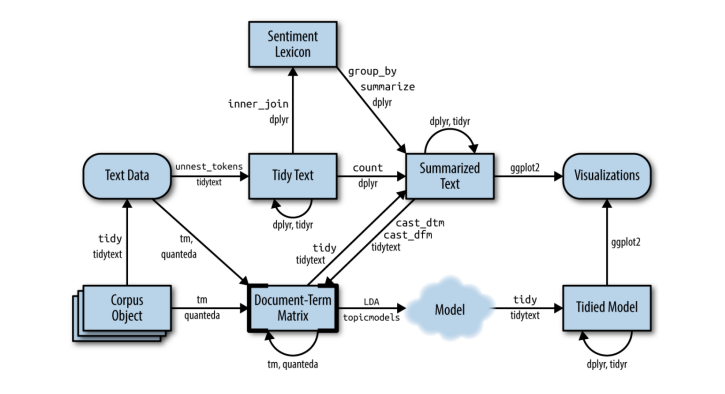

6️⃣ 토픽모델링 - 뉴스그룹

👀 개요

💡 토픽 모델링

✔ 문서 집합에 숨어있는 주제를 찾아내는 것 (비지도학습)

✔ 머신러닝 기반의 토픽 모델링은 숨겨진 주제를 효과적으로 표현할 수 있는 중심 단어를 함축적으로 추출한다.

✔ LSA (Latent semantic analysis) 와 LDA 기법이 자주 사용된다.

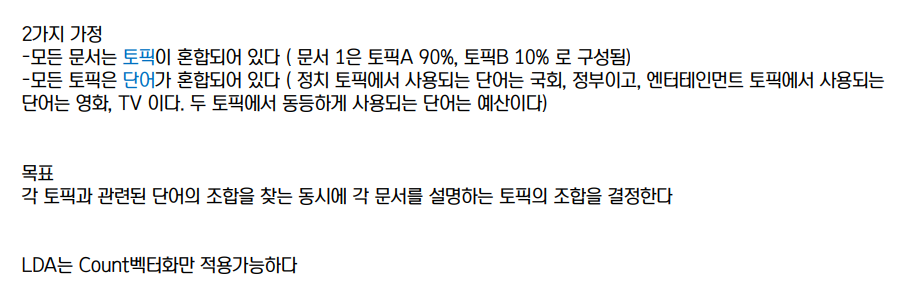

💡 LDA

✔ Latent Dirichlet Allocation

* 차원축소의 LDA 와 약어는 같지만 서로 다른 알고리즘임에 주의할 것

- 사이킷런 LatentDiricheltAllocation 모듈 지원

- LDA 는 Count 기반의 벡터화만 사용한다.

|

from sklearn.decomposition import LatentDirichletAllocation

|

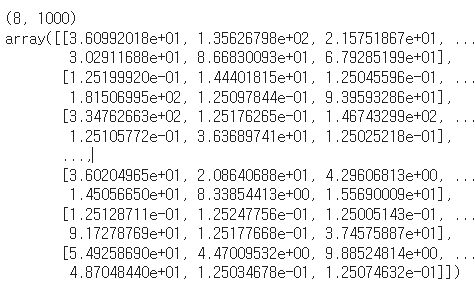

◽ components_ : 개별 토픽별로 각 word 가 얼마나 많이 할당되었는지에 관한 수치를 가진다. 이 값이 높을수록 해당 토픽의 중심 단어가 된다.

lda = LatentDirichletAllocation(n_components=8, random_state=0)

# ⭐ n_components : 토픽 개수

lda.fit(feat_vect)

# feat_vect : 단어 벡터 행렬

print(lda.components_.shape)

lda.components_ # 개별 토픽 (8개) 별로 각 word 피처가 얼마나 그 토픽에 할당되었는지에 대한 수치를 가지고 있다.

# 👉 높은 갚일수록 word 피처는 그 토픽의 중심 단어가 된다.

◽ 8개의 토픽 주제, 1000개의 단어

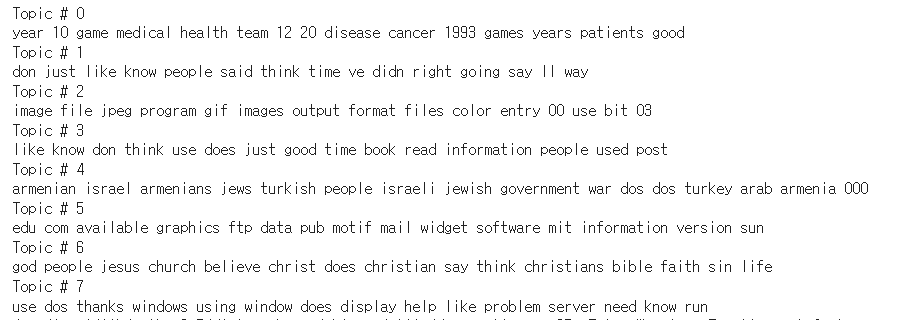

def display_topics(model, feature_names, no_top_words):

for topic_index, topic in enumerate(model.components_):

print('Topic #',topic_index)

# topic_index : #0 ~ #7

# topic : 각 토픽 인덱스에 해당하는 1000개 단어 값

# components_ array에서 가장 값이 큰 순으로 정렬했을 때, 그 값의 array index를 반환.

topic_word_indexes = topic.argsort()[::-1]

top_indexes=topic_word_indexes[:no_top_words]

# top_indexes대상인 index별로 feature_names에 해당하는 word feature 추출 후 join으로 concat

feature_concat = ' '.join([feature_names[i] for i in top_indexes])

print(feature_concat)

# CountVectorizer객체내의 전체 word들의 명칭을 get_features_names( )를 통해 추출

feature_names = count_vect.get_feature_names()

# Topic별 가장 연관도가 높은 word를 15개만 추출

display_topics(lda, feature_names, 15)

# 일반적인 단어가 주를 이루는 경우의 토픽도 있고, topic #2 처럼 컴퓨터 그래픽스와 관련된 주제어가 추출된 토픽도 존재한다.

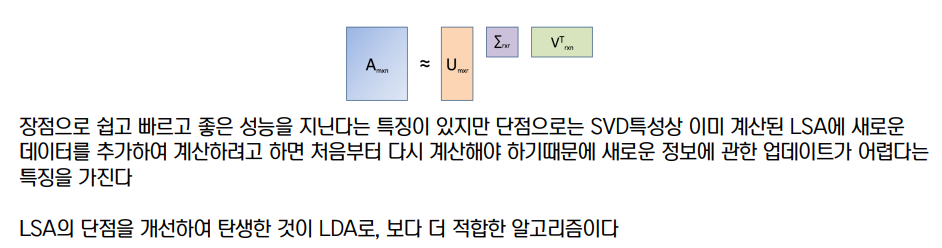

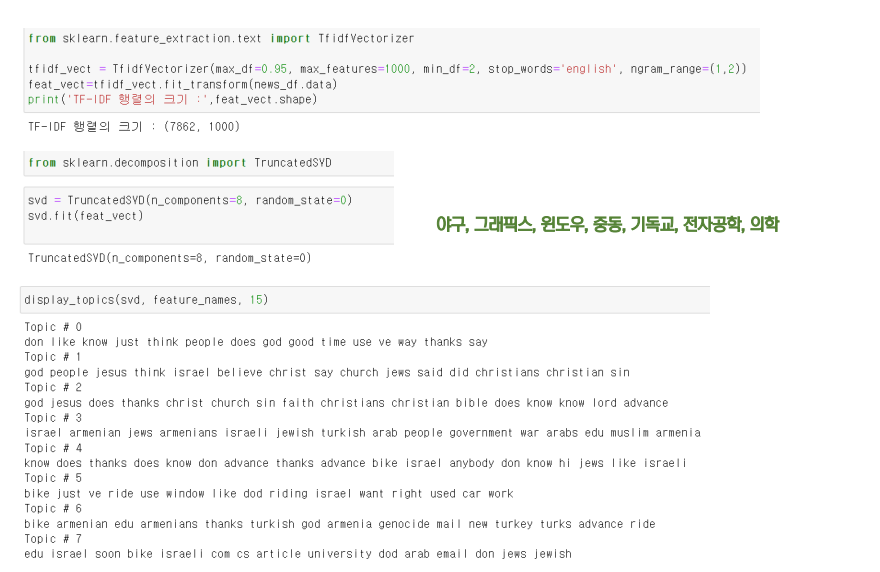

💡 LSA

✔ Latent Semantic Analysis

- BOW 기반의 임베딩은 단어의 빈도수를 이용하지만 단어의 의미는 고려하지 못한다는 단점이 있다.

- 이의 대안으로 Truncated SVD 를 사용하여 차원을 축소시키고 단어의 잠재된 의미를 끌어내는 방법이다.

'1️⃣ AI•DS > 📗 NLP' 카테고리의 다른 글

| [cs224n] 11강 내용 정리 (1) | 2022.05.19 |

|---|---|

| 텍스트 분석 ② (0) | 2022.05.17 |

| [cs224n] 10강 내용 정리 (0) | 2022.05.13 |

| [cs224n] 9강 내용 정리 (0) | 2022.05.09 |

| [cs224n] 8강 내용 정리 (0) | 2022.05.09 |

댓글