💡 주제 : Question Answering

📌 핵심

- Task : QA 질문 응답, reading comprehension, open-domain QA

- SQuAD dataset

- BiDAF , BERT

1️⃣ Introduction

1. Motivation : QA

✔ QA 와 IR system 의 차이

◽ IR = information retrieval 정보검색

💨 QA : Query (specifit) → Answer : 문서에서 정답 찾기

ex. 우리나라 수도는 어디야? - 서울

💨 IR : Query (general) → Document list : 정답을 포함하고 있는 문서 찾기

ex. 김치볶음밥은 어떻게 만들어? - 유튜브 영상 리스트, 블로그 리스트

👉 최근에는 스마트폰, 인공지능 스피커 기반의 정보취득이 많기 때문에 즉각적인 질문에 답하는 QA 모델의 중요성이 높아지고 있다.

사람의 언어로 된 "질문"에 자동적으로 "답"할 수 있는 시스템을 만들기

✔ QA 2 step

1. Finding documents that contain an answer

💨 질문에 대한 정답이 있을 것 같은 문서들 찾기 : Traditional IR/web search 과 관련이 있음

2. Finding an answer in the documents

💨 찾은 문서들 내에서 정답 찾기 : Machine reading comprehension 과 관련이 있음

💨 대부분의 state-of-the-art-question answering 시스템들은 end-to-end train 과 사전훈련된 language 모델 위에 build 된다.

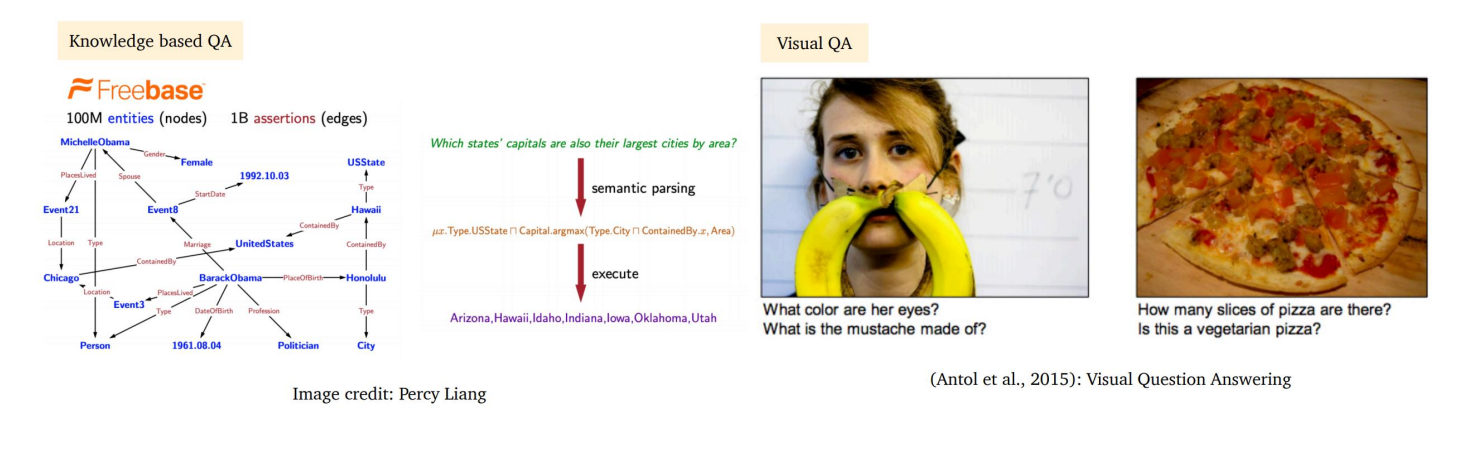

✔ Beyond textual QA problems

💨 오늘날에는 구조화되지 않은 text 에 기반한 질문에 답하는 유형이 주목받고 있다.

◽ Knowledge based QA : 대량의 데이터베이스에 대해서 QA 를 구축

◽ Visual QA : 이미지에 기반한 QA

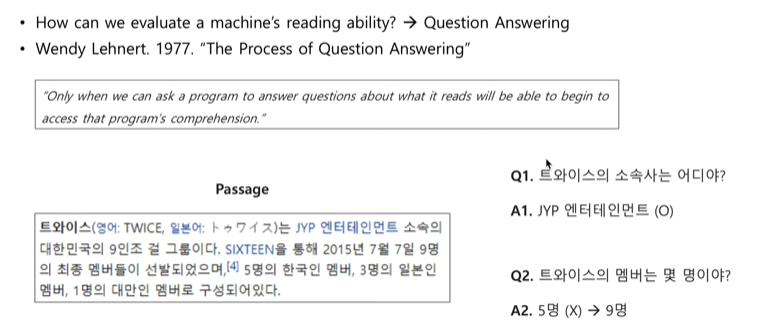

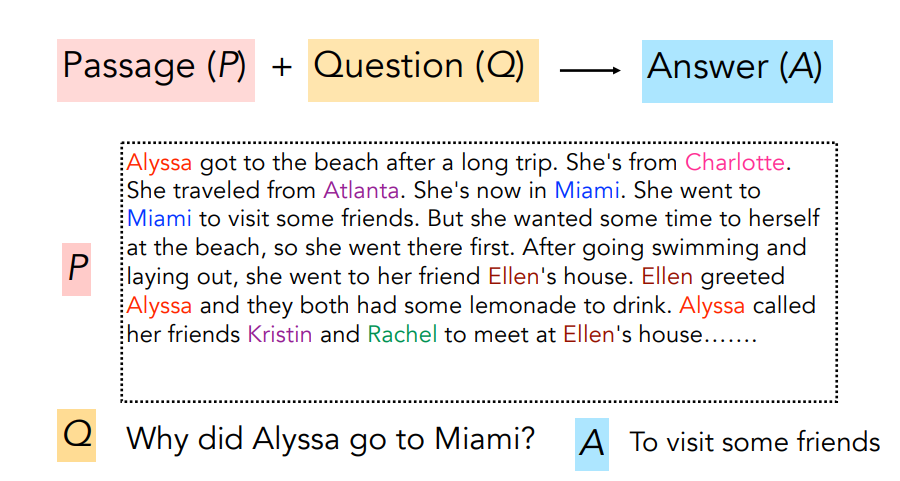

2. Machine Reading comprehension

✔ 정의

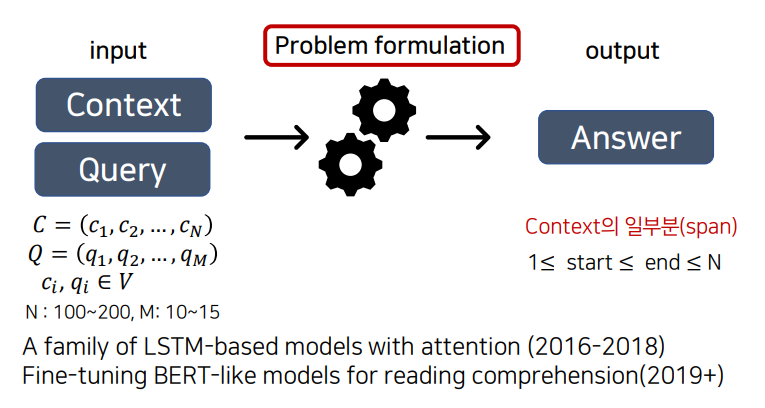

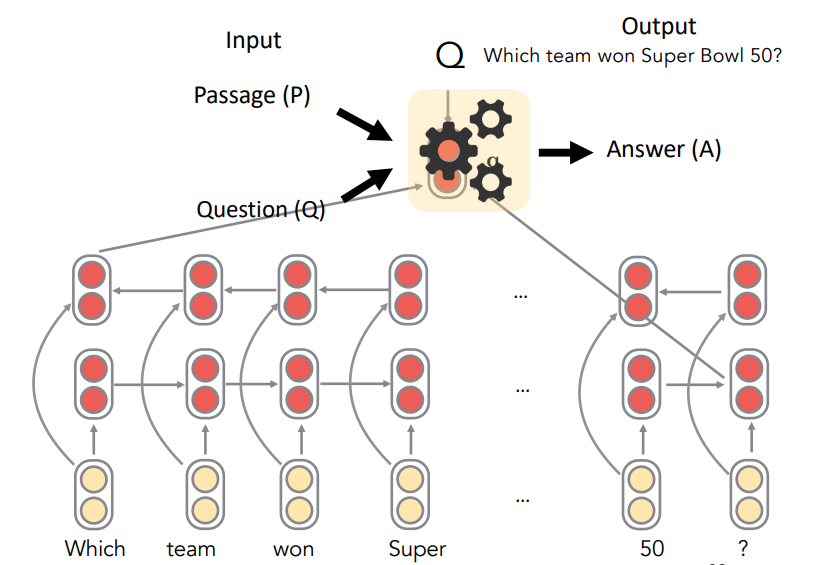

(P,Q) 👉 A : Text 로 이루어진 문단을 이해하고 해당 내용에 대한 질문에 답하자

- 기계가 올바르게 주어진 문서를 이해할 수 있도록 하는 Task

- passage 와 question 이 주어졌을 때, 올바르게 정답을 출력하는지 확인하여 기계의 reading ability 를 측정한다.

- 많은 다른 NLP task 들도 reading comprehension 문제로 단순화시킬 수 있다.

📑 답변 1 → 기계가 질문/문단을 제대로 이해했다고 볼 수 있음

📑 답변 2 → 기계가 질문/문단을 제대로 이해했다고 볼 수 없음

◽ 2015년 이전에는 MRC 에 적합한 데이터셋 (passage, question, answer) 과 NLP 시스템이 존재하지 않았다.

◽ 2015년 이후 MRC 를 위한 데이터셋과 NLP 시스템이 계속해서 등장, 발전하고 있다.

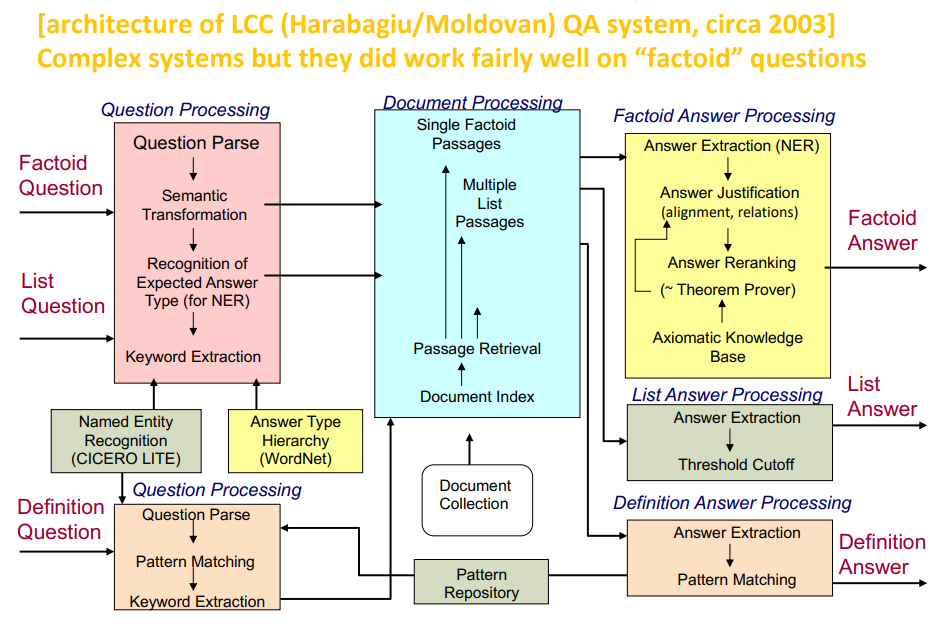

✔ 역사

- 2013년 Machine comprehension : MCT test corpus 를 가지고 지문에 있는 답을 그대로 찾는 문제

◽ Passage (P) : Document, Context

◽ Answer (A) : Extractive AQ, Sub-sequence

◽ 답변은 항상 문단 속 하위 문장 일부로 구성됨

◽ 과거 QA 모델은 주로 NER 기반으로 접근하는 경우가 많았기 때문에 수작업이 많고 복잡한 형태를 띄고 있었다.

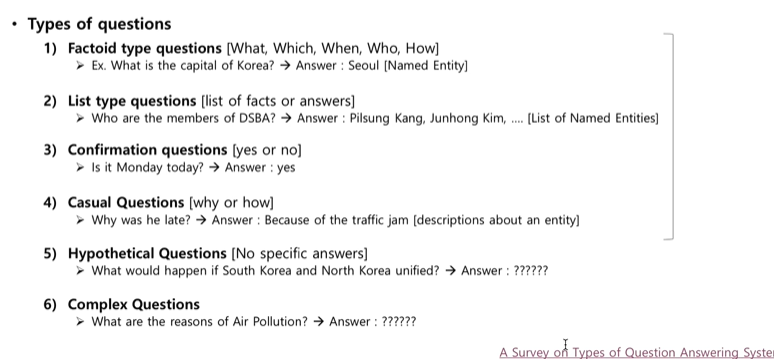

✔ 질문 유형

✔ MT vs MRC

| Machine Translation | Reading Comprehension |

| Source 문장, Target 문장 | Passage, question |

| Autogressive decoder : word by word target 문장 생성 | Two Classifier : 정답의 start, end 위치만 예측 |

| Source 문장의 어떤 단어가 현재의 target 단어와 가장 관련 있을까? | Passage 의 어떤 단어들이 question 의 단어와 가장 관련이 있을까 |

⭐ 두 NLP task 모두 Attention 이 핵심!

3. SQuAD dataset

✔ 정의

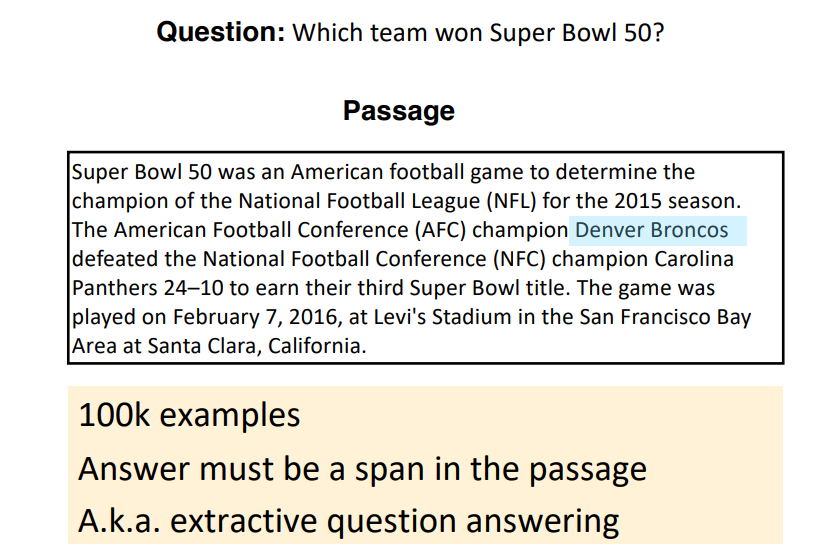

◽ Stanford Question Answering Dataset 👉 large scale supervised dataset

◽ 10만개 이상의 질문-답변 데이터가 존재 , 답변은 반드시 passage 내에서 span 으로 나오도록 (passage 에 등장한 문구여야 한다는 뜻) 구성되어 있다.

◽ SQuAD dataset 이 굉장히 정교하게 구축되어 QA task 에 크게 기여하였고, 지금까지 널리 사용되고 있다.

- Passage : 위키피디아의 100개 ~ 150개 단어로 이루어진 문단들 (= paragraph, context 라고 지칭하기도 함) 👉 passage 내에 정답이 있음

- Question : 클라우드 소스 방법으로 만들어진 질문 - 사람들이 문단을 읽고 질문과 그에 알맞는 답을 만드는 방식 (= query 라고 지칭하기도 함)

- Answer : 3개의 가능한 정답 답안을 넣어줌

- query 가 context 보다 짧음 (N개 토큰 > M개 토큰)

- SQuAD 를 푸는 두 가지 종류의 신경망 모델 : 2018년 이전까지는 LSTM 기반의 attetion 모델이 주를 이루었고, 2019년도 이후 부터는 BERT 와 같은 Pre-trained 된 모델에서 Fine tuning 하는 방식으로 바뀌었다.

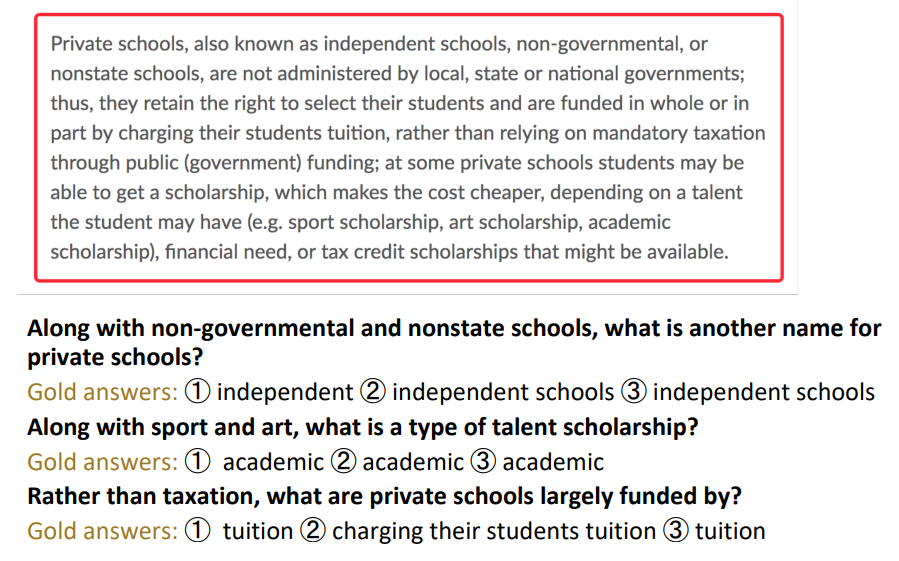

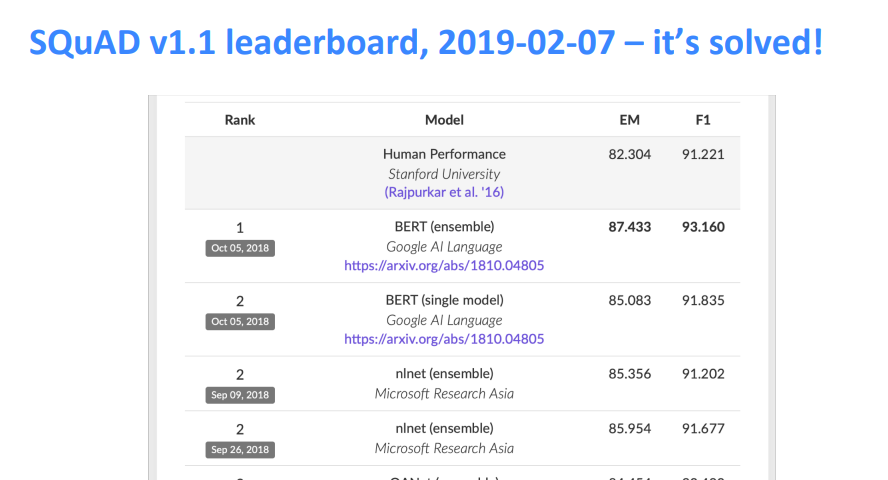

✔ version 1.1

◽ 3 gold answer : 답변 유형의 변형에도 잘 대처하기 위해 세 사람에게 답변을 얻음

◽ 평가지표

- Exact Match : (인간이 만든) 3개 답 중에 하나로 나왔으면 1, 아니면 0으로 binary accuracy 👉 인간이 설정한 답도 유동적이기 때문에 객관적인 지표인 F1 score 도 계산

- F1 : 단어 단위로 구한 F1-score 3개 중에 max one 을 per-question F1-score 로 두고 macro average

- punctuation 과 a,an,the 는 무시한다.

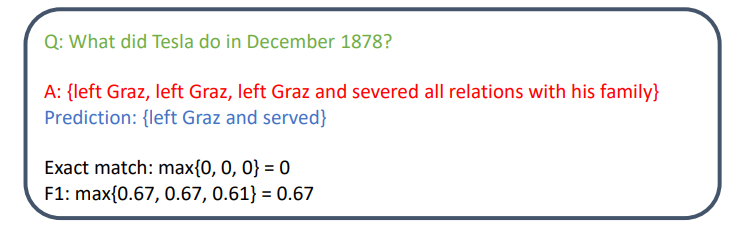

✔ 평가지표 계산 예시

(1) 예측된 답변을 각 gold answer 과 비교한다. 이때 a, an, the, . (구두점) 은 제거한다.

(2) Max score 를 계산한다.

(3) 모든 예제에 대해 EM 과 F1을 평균한 값을 구한다.

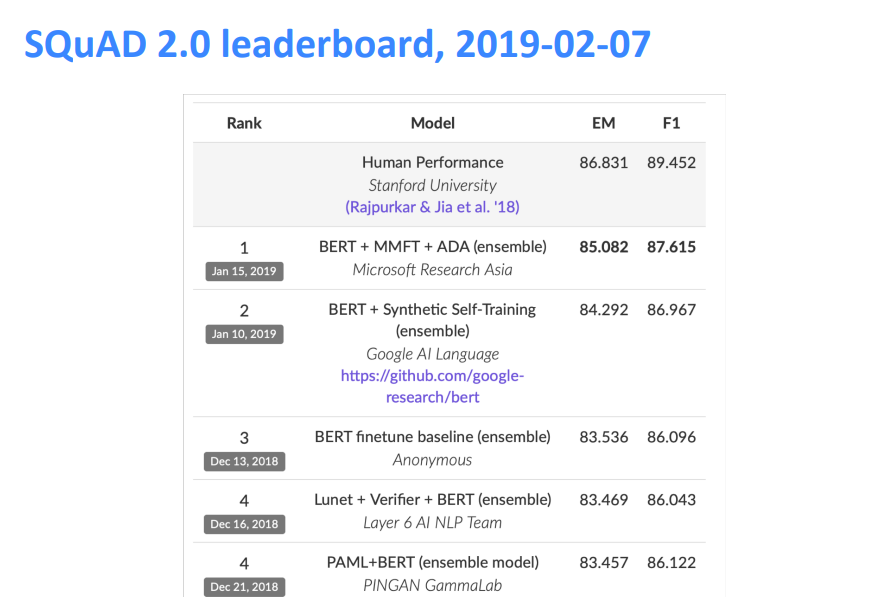

✔ version 2.0 👉 unanswerable question 이 추가됨

◽ 1.1 에서는 모든 질문에 답변이 항상 존재 (answerable) 하다보니, 문단 내에서 문맥을 이해하지 않고 단순히 ranking task 로 작동하는 문제점이 존재함 (답에 근접해 보이는 span 을 찾을 뿐)

◽ v1.1 에 새로운 5만개 이상의 응답 불가능한 (unanswerable) 질문을 병합하였다 : v2.0 에서는 dev/test 데이터 절반은 passage 에 answer 가 포함되어 있고 절반은 포함되어 있지 않다.

◽ 온라인에서 사람들이 unanswerable question 을 직접 생성하여 성능이 높다.

◽ 평가 시 no answer 를 no answer 라고 해야 맞게 예측한 것임 👉 threshold 를 두고 그 이하일 때는 예측한 answer 를 뱉지 않는다.

✔ 한계점

👀 SQuAD 문제를 잘 푼다고 해서 독해를 잘한다고 말할 수 없다.

◽ Span-based answers 만 존재 : yes/no, counting, implicit 질문들에 대한 답변의 구체적인 이유를 찾기 어렵다.

◽ Passage 내에서만 정답을 찾도록 하는 질문구성

- 여러 문서들을 비교해 진짜 정답을 찾아낼 필요가 없음

- 실제 마주하게될 질문-답변 (데이터) 보다, 쉽게 답변을 찾을 수 있는 구조를 가지고 있다는 한계

◽ 동일 지시어 (coreference) 문제를 제외하고는 Multi-fact 문제, 문장 추론 문제가 거의 없다.

🤸♀️ 그럼에도 지금까지 QA 모델에 가장 많이 사용된 well structed, clean 한 데이터셋이다.

✔ KorQuAD 2.0

◽ 한국어 위키백과로 데이터 구축

2️⃣ QA models

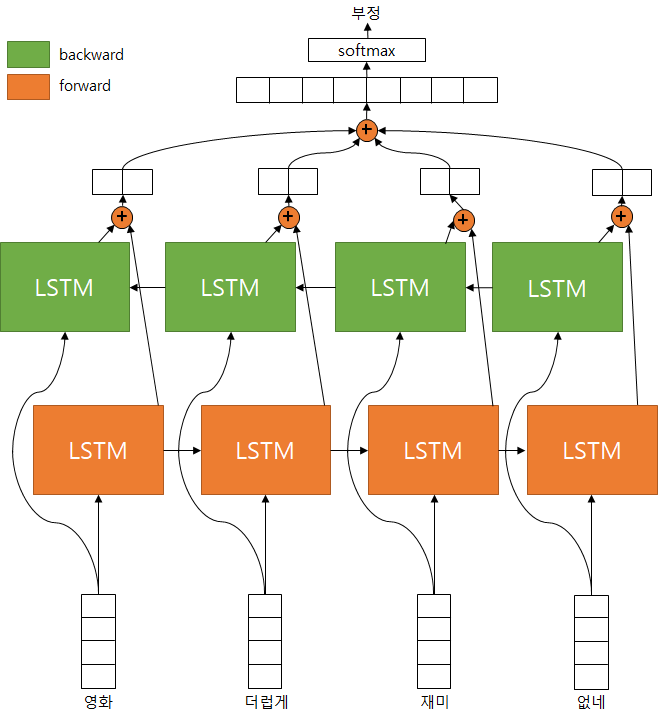

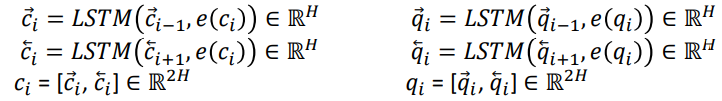

0. Bi-LSTM

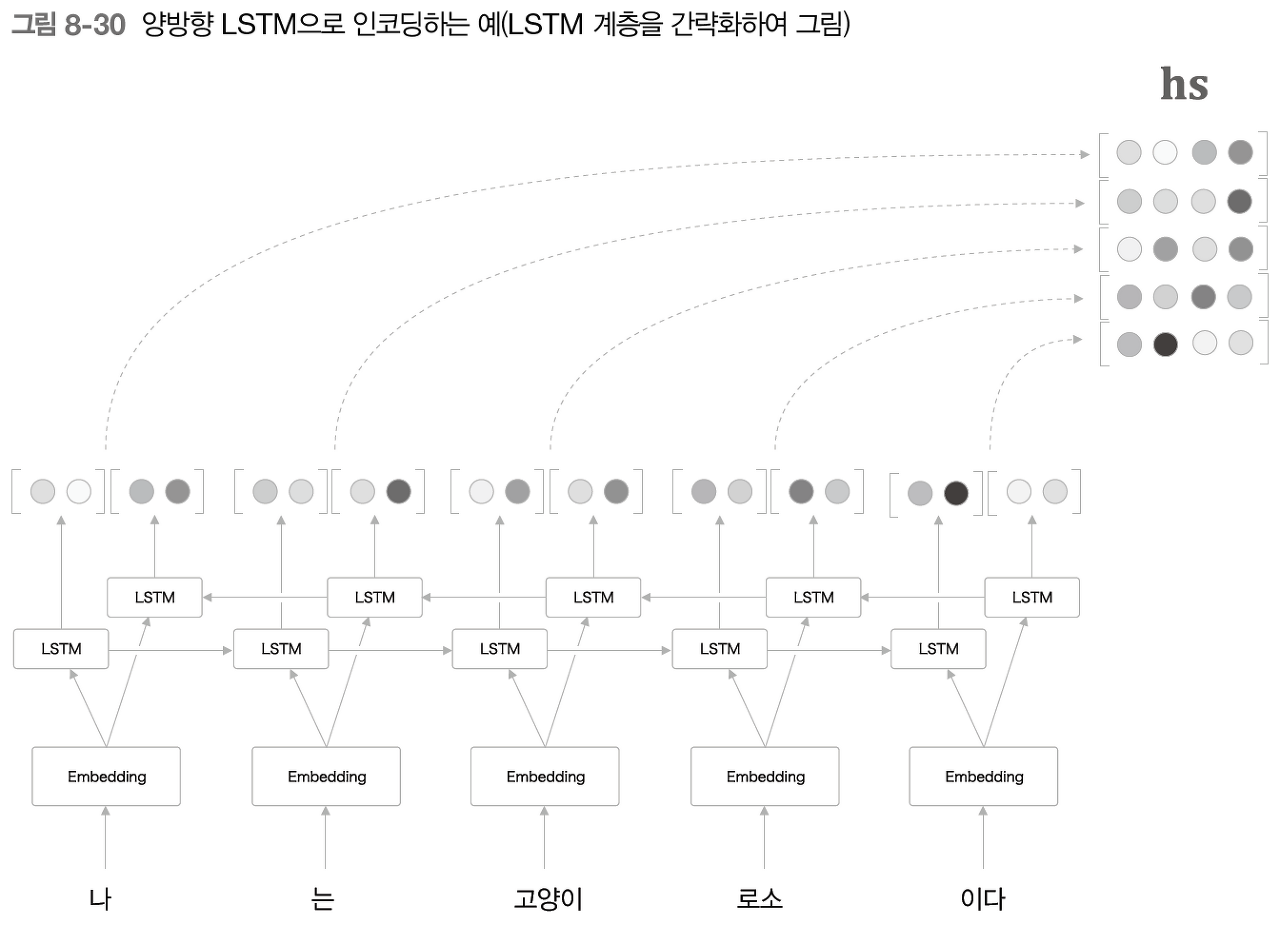

👀 대응하는 단어의 주변정보를 균형있게 담아내기 위해 양방향으로 살펴본다. 양방향 LSTM 은 지금까지의 LSTM 계층에 역방향으로 처리하는 LSTM 계층도 추가한다.

◽ 주황색 블록 : forward 정방향 LSTM, 초록색 블록 : backward 역방향 LSTM

◽ model input 형태 : Tokenizing 과 Embedding 과정이 완료된 sequence 👉 forward, backward 방향의 LSTM 에 각각 입력된다.

◽ forward LSTM Cell 과 backward LSTM Cell 의 은닉값을 Concat (각 timestep별로) 하여, 이후 모든 concat 결과물을 가지고 최종 출력을 뽑게 된다.

1. Stanford attentive reader +

✔ 개요

◽ simplest neural question answering system

◽ Bi-LSTM 구조를 사용해 각 방향의 최종 hidden state 들을 concat 하여 question vector 로 사용한다.

✔ 모델 구조 : 3 parts

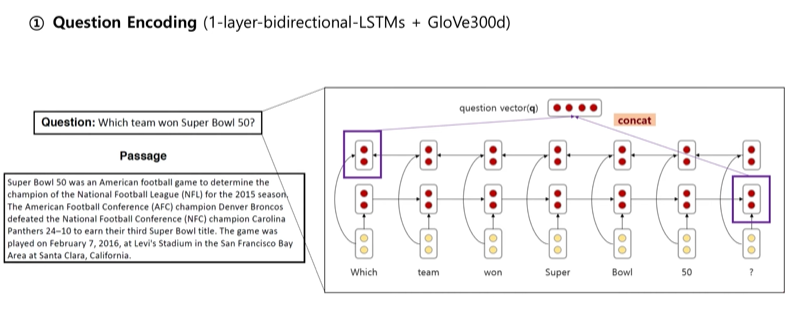

1️⃣ Question vector 생성

(1) Glove 사전 임베딩 사용

(2) One layer 의 Bi-LSTM 사용

(3) 양방향의 마지막 hidden state 들을 concatenate

(4) question vector 생성

- 주어진 문장에 있는 단어들을 사전에 학습된 300 차원의 GloVe word embedding 에서 lookup 을 진행한 후, 1-layer 양방향 LSTM 모델에 집어넣는다. 각 방향의 마지막 hidden state 들을 concatenate 하여 고정된 size 의 question vector 를 얻는다.

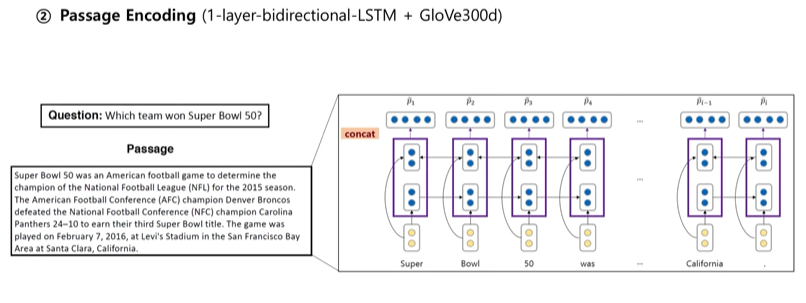

2️⃣ Passage vector 생성

(1) Glove 사전 embedding 사용

(2) One layer Bi-LSTM 사용

(3) 양방향의 hidden state position 별로 concatenate → passage 단어 개수만큼!

(4) Passage vector 생성

- passage 의 각 단어 벡터들도 똑같이 Bi-LSTM 을 사용하여, 각 단어 시점의 두 방향 hidden state 를 concat 하여 passage word vector 로 사용한다.

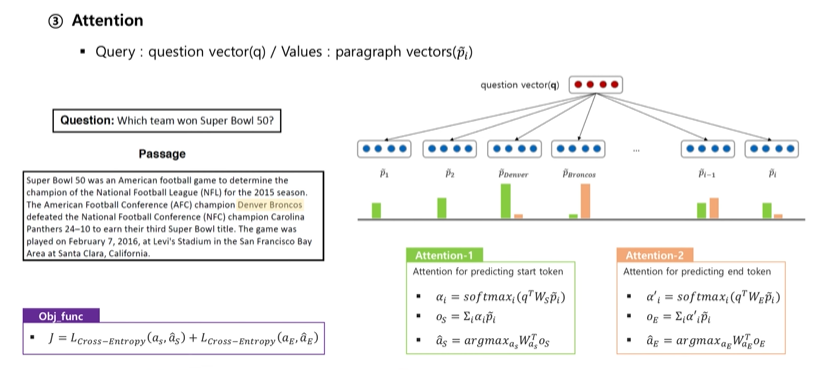

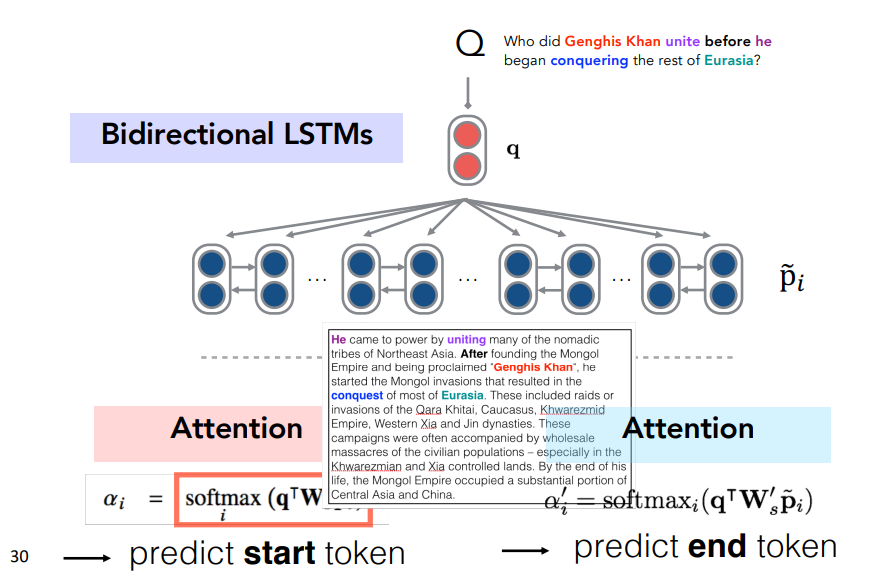

3️⃣ Attention 적용

⭐ passage 에서 어디가 answer 시작이고 끝인지 예측 ⭐

(1) αi : i 개의 p (passage) 벡터와 한 개의 q (question) 벡터를 이용해 attention 을 적용한 후 softmax

(2) Os : αi와 pi 벡터를 곱하여 모두 더함

(3) αs: Os 에 linear transform 을 취함

💨 Attention : 해당 시점에서 예측해야할 단어와 연관이 있는 입력 단어 부분을 좀 더 집중(attention)해서 보기

- 1 개의 question vector 와 i 개의 passage vector 들에 대해 attention 을 적용하여 passage 에서 어디가 answer 시작이고 끝인지를 학습하는 방식

- softmax 를 취한 값인 αi 와 passage vector Pi 를 곱한 뒤, 전부 더하면 output vector Os 가 나오고, 이를 linear transform 을 시켜준 값이 start token 에 대해 예측을 수행한다. 이와 똑같은 방법으로 end token 에 대해서도 예측을 수행한다.

- start token 과 end token 의 loss 값을 더한 것이 최종 목적함수가 되고 이를 줄여 나아가는 방법으로 모델을 학습한다.

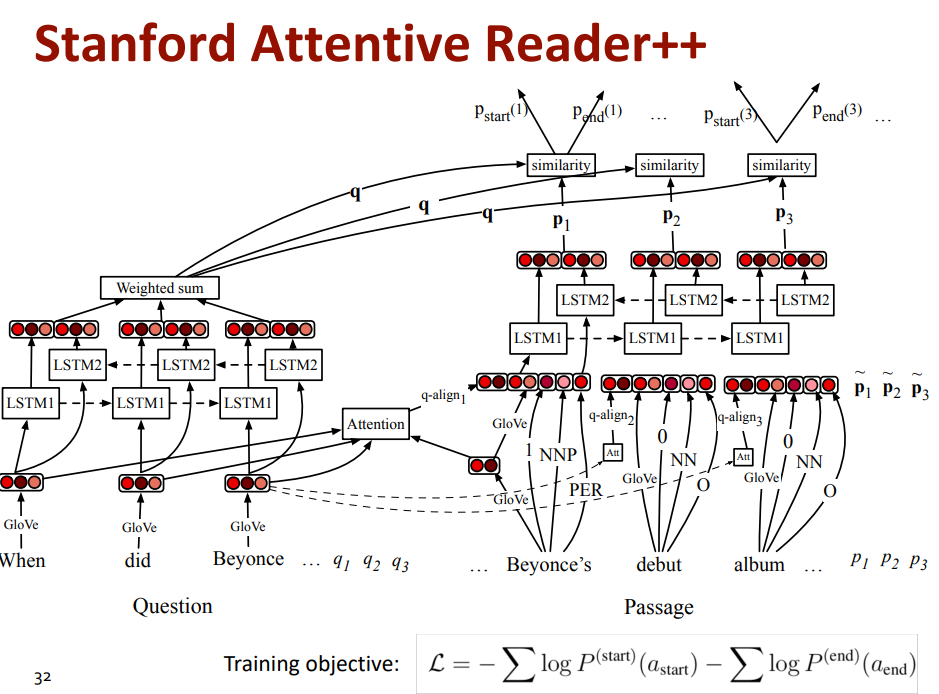

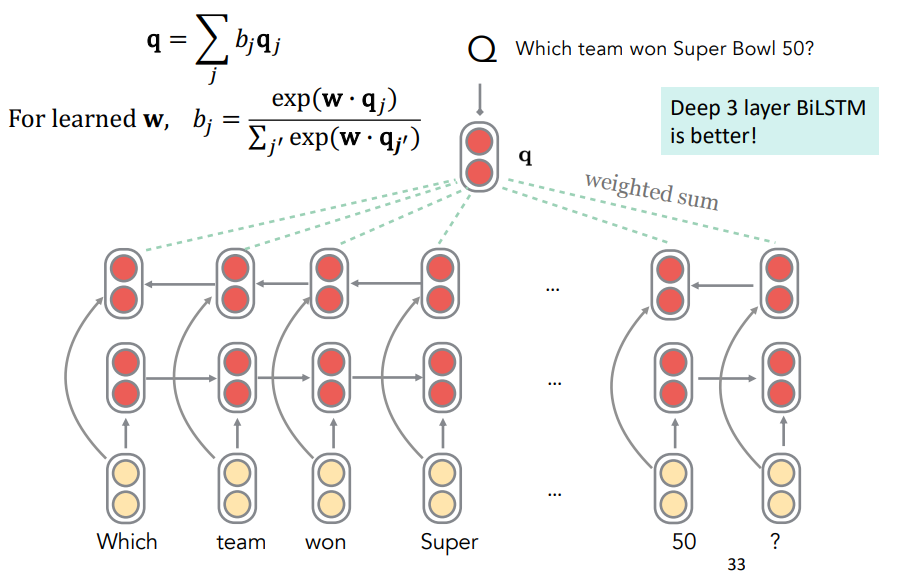

2. Stanford attentive reader ++ (DrQA)

✔ 모델 구조

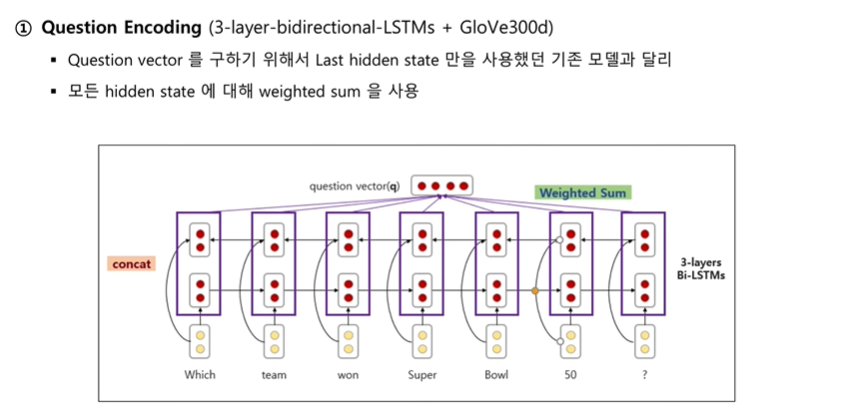

💨 3 layer Bi-LSTM

⭐ 이전에는 최종 hidden state 만 가져왔던 것을 지금은 question의 모든 단어 시점 hidden state 의 attention 을 구해서 그 가중합을 question vector 로 사용

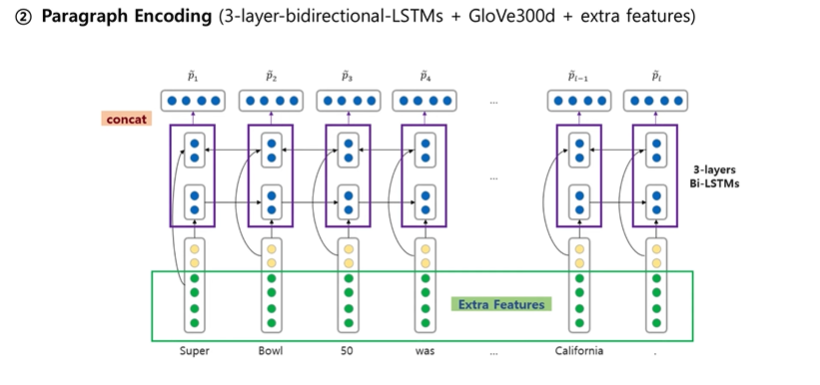

✔ Paragraph Encoding

◾ 3-layer Bi-LSTM

◾ input embedding 에 대해 GloVe vector 만을 사용하는 것이 아닌, 단어별로 별도의 추가 feature 를 붙여주었다 👉 언어학적 특성을 담은 품사정보 (POS) 와 NER 태그들을 원핫인코딩한 벡터로 붙여줌

◾ Unigram probability 추가 👉 Term frequency 반영

◾ EM : 문단 내 해당 단어가 질문에 등장하는지 아닌지를 판단하는 feature

◽ 3 binary features : exact (정확히 일치), uncased (없음), lemma (어근추출을 하게되면 일치하는지 여부)

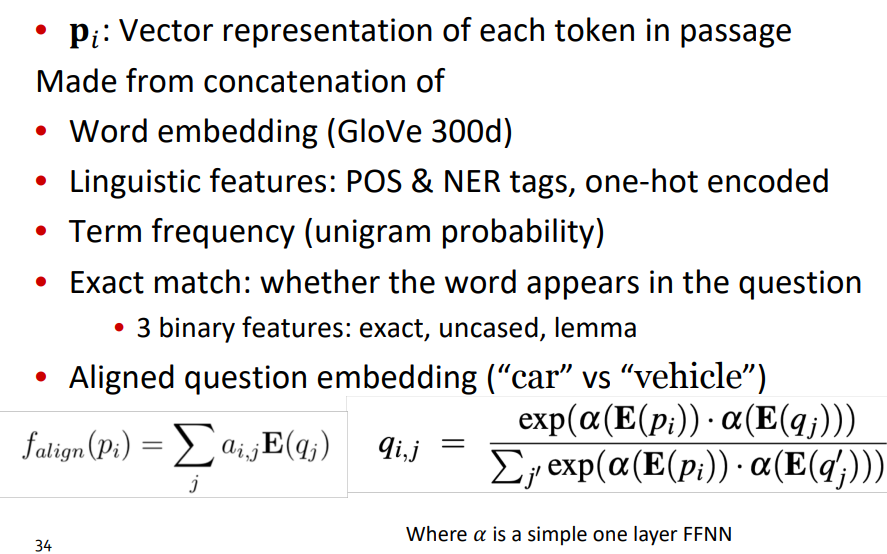

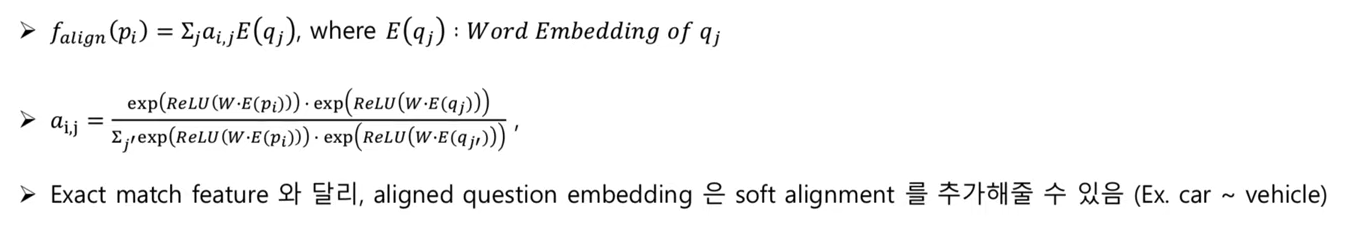

◾ Aligned question embedding

◽ Question 의 단어 qi 와 paragraph 단어의 pi 의 유사도를 포착하기 위함

◽ soft alignmnet : 정확히 단어의 형태가 일치하거나, lemma 를 거쳐도 형태가 일치한 단어는 아니지만 서로 유사한 의미를 가진 단어

◽ soft alignmnet 를 추가해줄 수 있다.

👉 이후 attention 과정은 SAR+ 과 과정이 같음

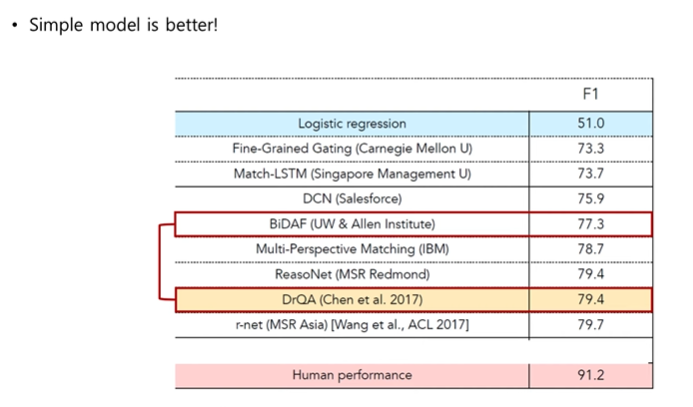

👀 BiDAF 같은 복잡한 구조를 사용하지 않고도 성능이 높았던 모델

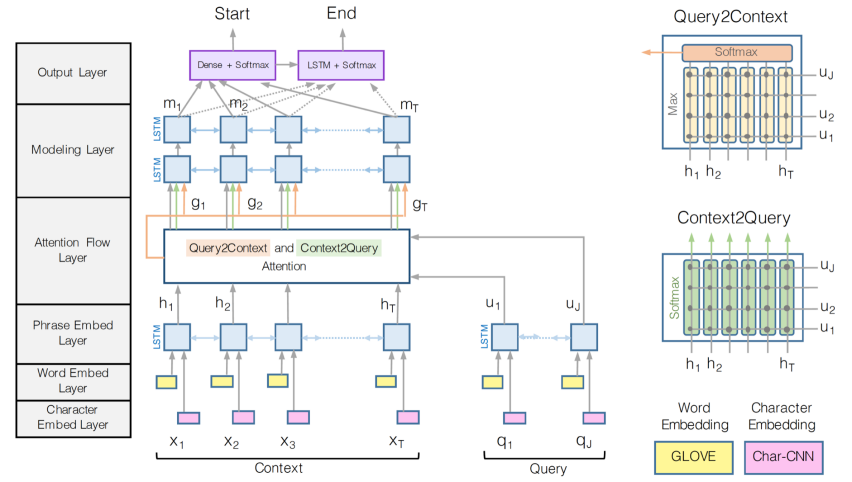

3. BiDAF

✔ 개요

👀 Bidirectional Attention Flow → BERT 가 등장하기 전에 가장 유명한 reading comprehension 모델 중 하나였음

⭐ attention 이 (pharagraph <-> question) 양방향으로 전달되는 점

- Query (question) 과 Context (passage) 사이에 attention flow layer 가 bi-directional 으로 동작하는 것이 핵심

- 각 question word 와 passage word 서로 간의 유사도 기반

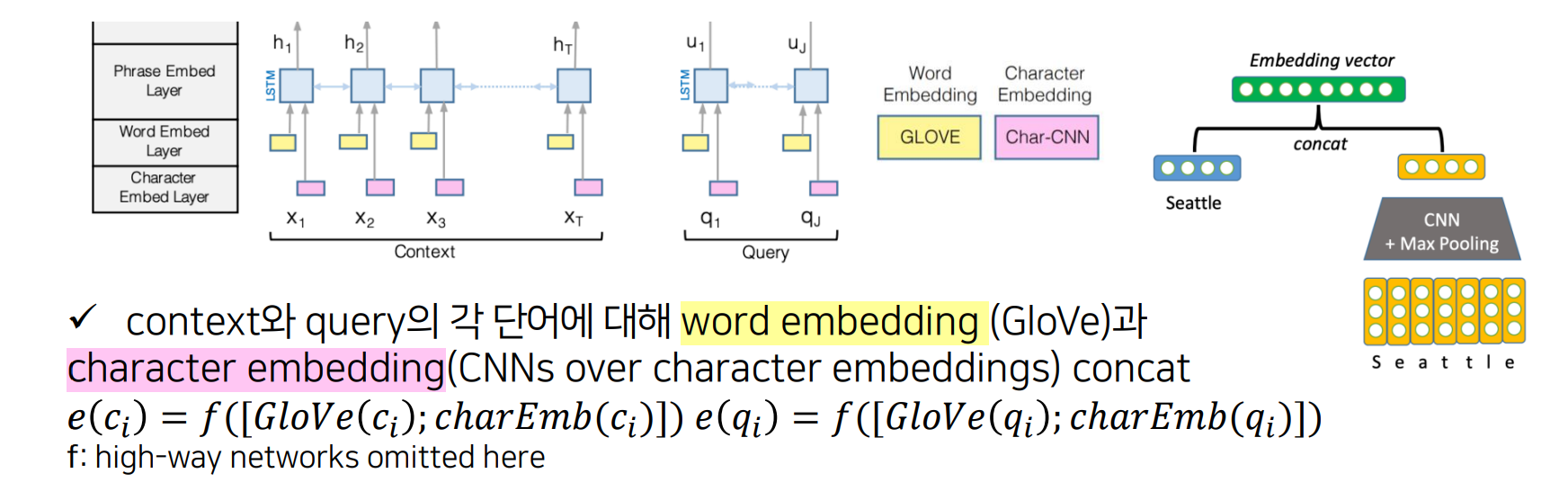

✔ model architecture

① Embedding layer

- Glove 로 임베딩하여 각 단어에 대해 word vector 도출 + Character level CNN 을 통해 고정된 크기의 Character vector 도출 💨 Concatenate

- Concat 한 벡터를 Two-layer Highway Network (f) 를 거친 후 Bi-directional LSTM 에 넣어준다.

- Bi-LSTM 으로 도출된 양방향 hidden state 를 concat 💨 Contextual embedding 생성 → Attention layer 의 입력이 됨

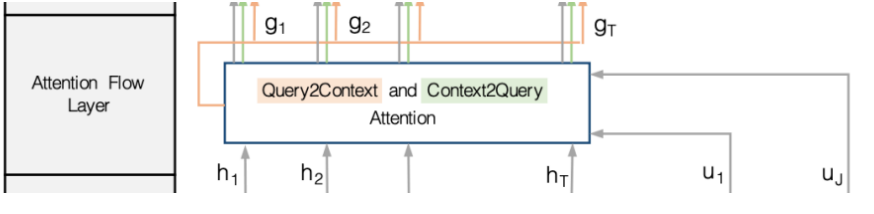

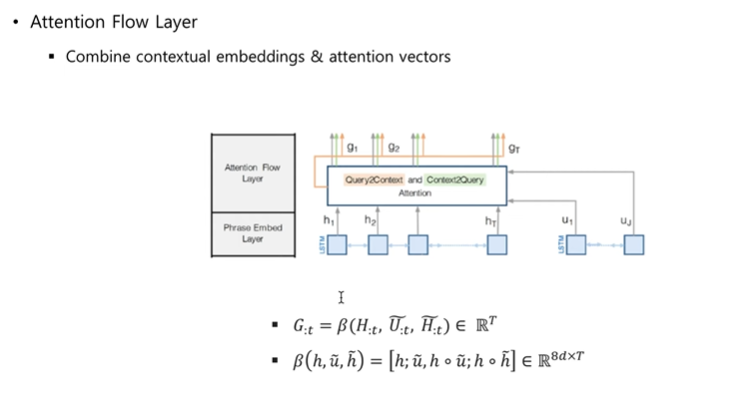

② Attention Flow layer

💨 input : query 와 context 의 contextual vector representation (ci, qj)

💨 output : context 단어들의 query-aware vector representation (gi) , 이전 layer 의 contextual embedding

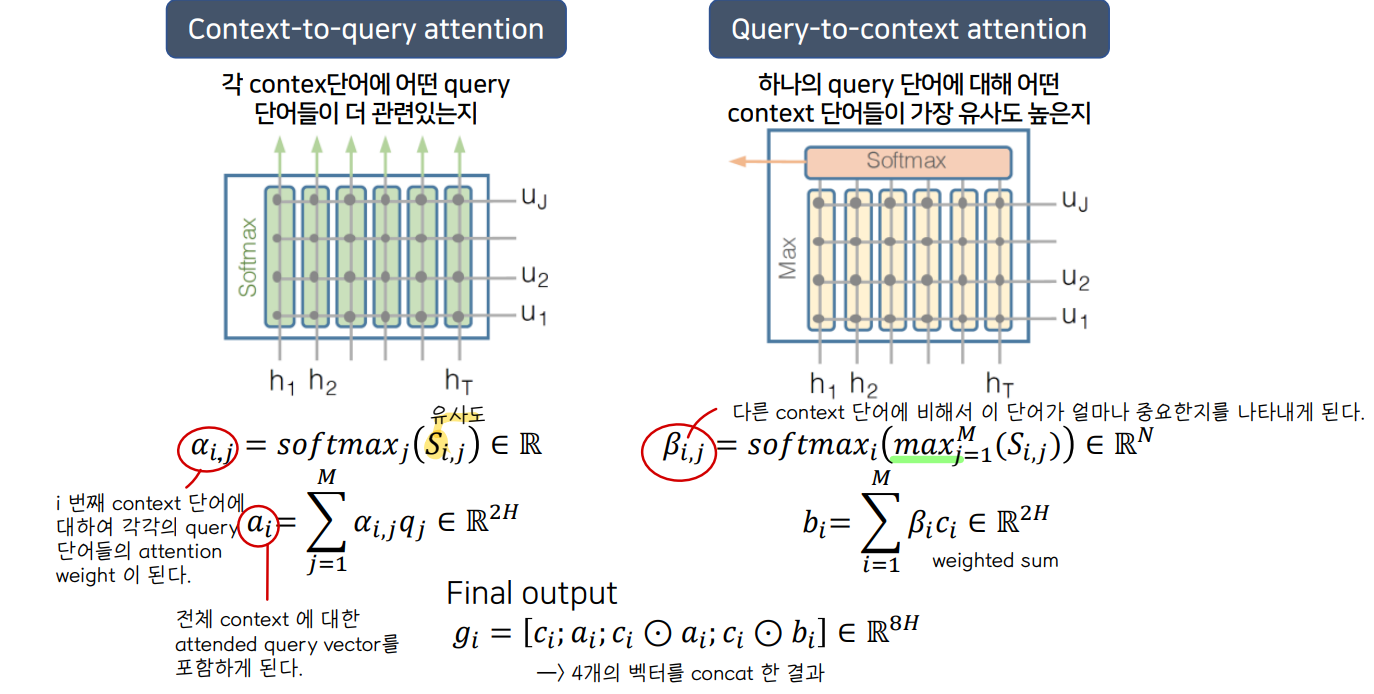

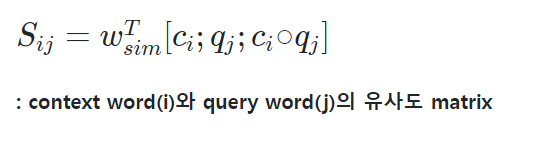

- 모든 (ci, qj) 쌍에 대해 유사도를 계산한다.

- 양방향 attention 을 가능하게 하기 위하여 Shared similarity matrix S 를 사용한다.

- S 는 α 라는 함수를 거쳐 도출된다.

- α : h 와 u 그리고 h 와 u 를 element wise 로 곱한 총 3개의 벡터를 concat 한 후 linear transform 을 해준다.

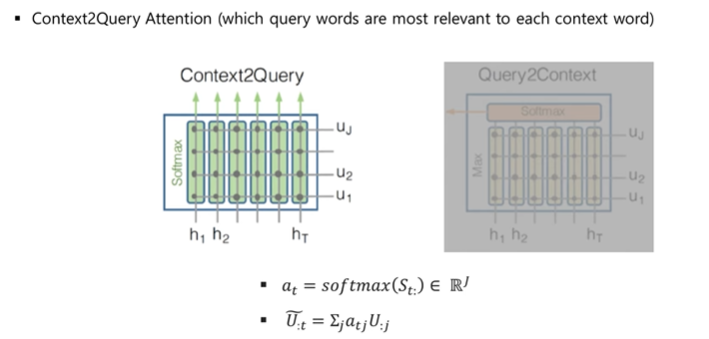

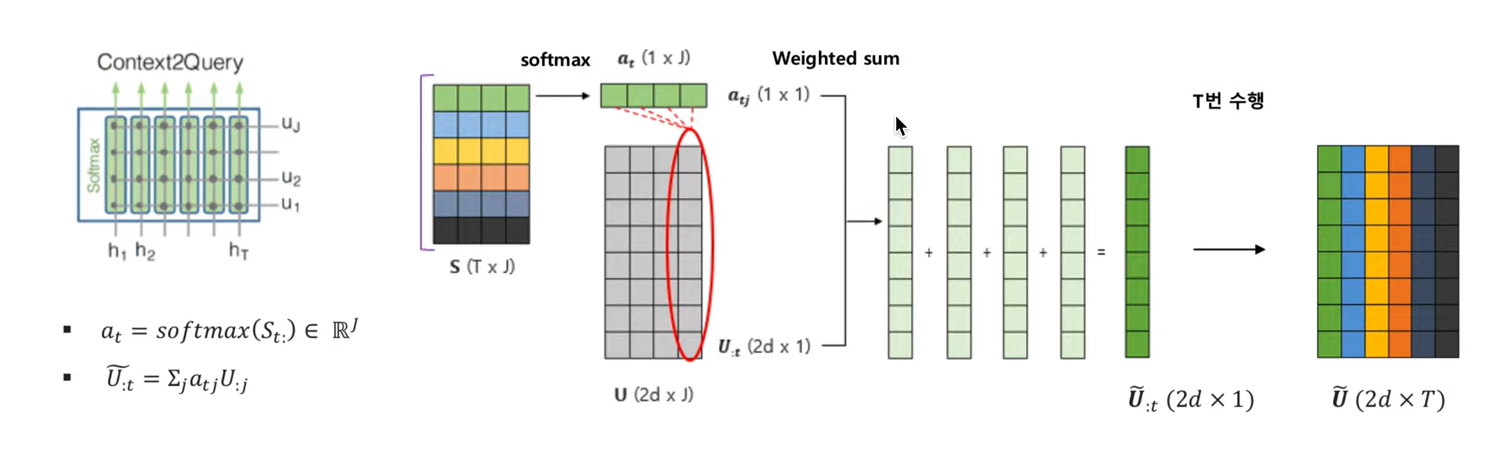

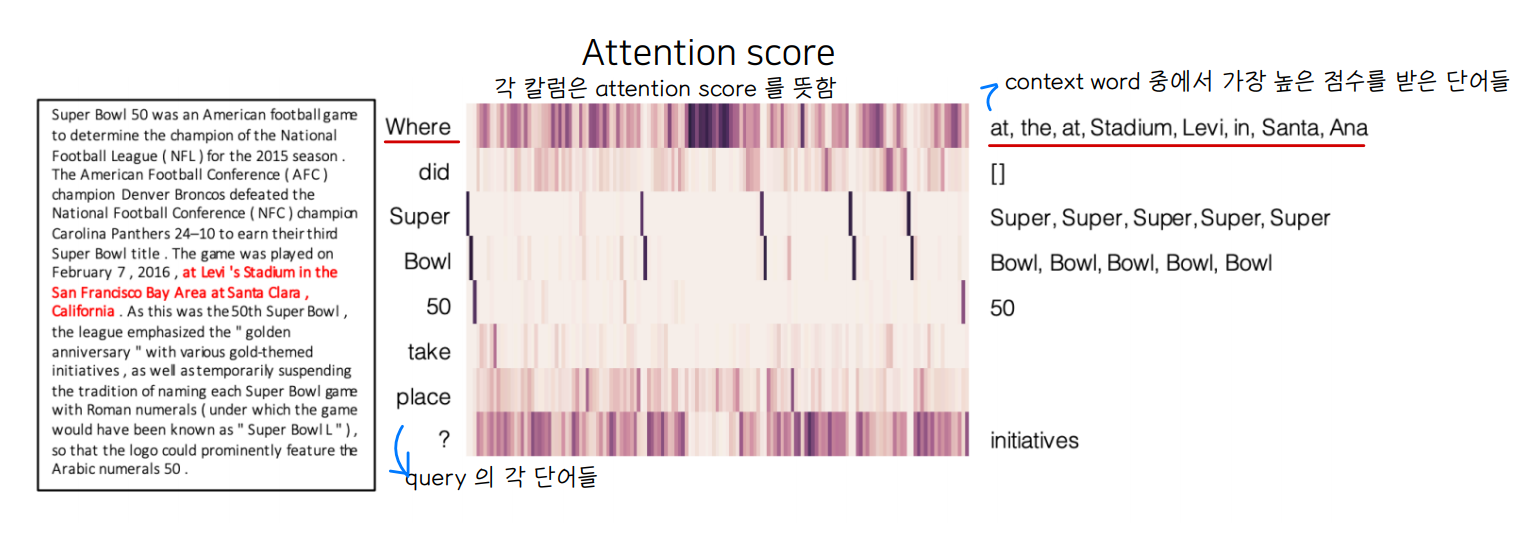

2-(1). Context2Query Attention

◾ 각 context 단어에 대해 가장 유사한 query 단어 찾기 (질문의 어떤 단어들이 ci 와 연관이 있는가)

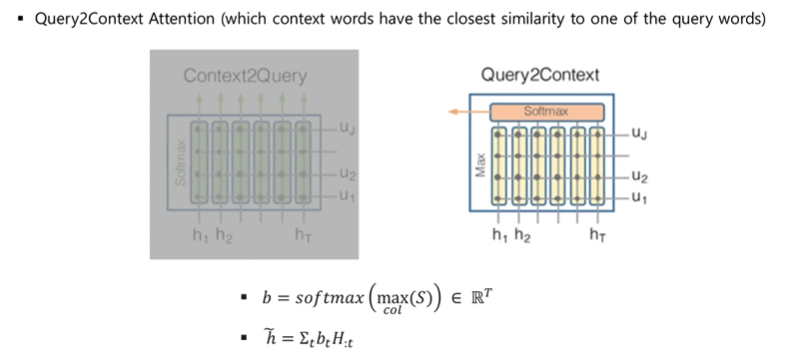

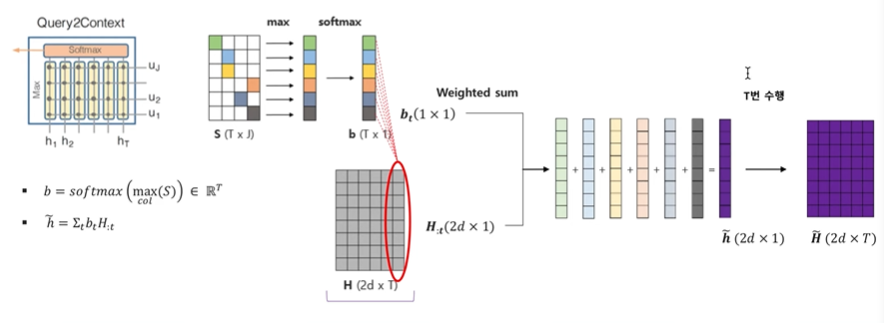

2-(2). Query2Context Attention

◾ query 단어 중 하나와 가장 관련있는 context 단어들 선택하기 (문단에서 어떤 단어들이 질문 단어와 연관이 있는가)

2-(3). Combine contextual embedding , attention vectors

③ start token, end token 예측

💨 Modeling layer

◾ gi 를 또 다른 bidirectional LSTM 의 2개 layer 로 전달

◾ Context 단어들 사이의 interation 을 모델링

💨 Output layer

◾ start, end 의 위치를 예측하는 classifier

◾ Training : start point 의 Negative log likelihood 와 end point 의 Negative log likelihood 의 합

④ Attention visualization

4. ELMO, BERT 👉 2021 version 강의

'1️⃣ AI•DS > 📗 NLP' 카테고리의 다른 글

| 텍스트 분석 ② (0) | 2022.05.17 |

|---|---|

| 텍스트 분석 ① (0) | 2022.05.14 |

| [cs224n] 9강 내용 정리 (0) | 2022.05.09 |

| [cs224n] 8강 내용 정리 (0) | 2022.05.09 |

| [cs224n] 7강 내용 정리 (0) | 2022.04.21 |

댓글