👀 회귀분석

- 데이터 값이 평균과 같은 일정한 값으로 돌아가려는 경향을 이용한 통계학 기법

- 여러 개의 독립변수와 한 개의 종속변수 간의 상관관계를 모델링 하는 기법을 통칭한다.

- 종속변수는 숫자값(연속값) 이다.

- 머신러닝 회귀 예측의 핵심은 '최적의 회귀계수' 를 찾아내는 것!

| 독립변수의 개수 | 회귀 계수의 결합 |

| 1개 : 단일회귀 | 선형 : 선형 회귀 |

| 여러개 : 다중 회귀 | 비선형 : 비선형 회귀 |

- 회귀 분석의 objective : RSS (오차제곱합) 을 최소로하는 회귀 변수 (w) 찾기

03. 경사하강법

📌 개요

💡 경사하강법

- 데이터를 기반으로 알고리즘이 스스로 학습한다는 개념을 가능하게 만들어준 핵심 기법

- 점진적으로 반복적인 계산을 통해 W 파라미터 값을 업데이트하면서 오류 값이 최소가 되는 W 파라미터를 구하는 방식

np.random.seed(0)

# y = 4X + 6 을 근사(w1=4, w0=6). 임의의 값은 노이즈를 위해 만듦

X = 2*np.random.rand(100,1)

y = 6 + 4*X + np.random.randn(100,1)

# 비용함수 만들기

def get_cost(y, y_pred) :

N = len(y)

cost = np.sum(np.square(y-y_pred))/N

return cost

# 가중치 업데이트

def get_weight_updates(w1, w0, X, y, learning_rate=0.01) :

N = len(y)

w1_update = np.zeros_like(w1)

w0_update = np.zeros_like(w0)

y_pred = np.dot(X, w1.T) + w0

diff = y - y_pred

# dot 행렬 연산으로 구하기 위해 행렬 생성

w0_factors = np.ones((N,1))

#업데이트

w1_update = -(2/N)*learning_rate*(np.dot(X.T, diff))

w0_update = -(2/N)*learning_rate*(np.dot(w0_factors.T, diff))

return w1_update, w0_update

# 임력인자 iters 로 주어진 횟수만큼 반복적으로 w1, w0 를 업데이트 적용함

def gradient_descent_steps(X,y,iters=1000) :

# 초기화

w0 = np.zeros((1,1))

w1 = np.zeros((1,1))

for ind in range(iters) :

w1_update, w0_update = get_weight_updates(w1,w0,X,y,learning_rate = 0.01)

w1 = w1 - w1_update

w0 = w0 - w0_update

return w1, w0

w1,w0 = gradient_descent_steps(X,y,iters=1000)

y_pred = w1[0,0] * X + w0

💡 확률적 경사하강법 Stochasitic Gradient Descent

- 전체 데이터가 아닌, 일부 데이터만을 이용해 w 가 업데이트 되는 값을 계산한다.

- 빠른 속도

- 대용량 데이터의 경우 확률적 경사 하강법이나 미니 배치 확률적 경사 하강법을 이용해 최적 비용함수를 도출한다.

- GD 코드에서 batch_size 변수를 추가한 것이 차이

04. 선형회귀실습 - 보스턴 주택 가격 예측

📌 개요

💡 LinearRegression

🔸 파라미터

- fit_intercept : 절편 값을 생성 할것인지 말것인지 지정한다 (T/F) 만일 False 로 지정하면 0으로 지정된다.

- normalize : T/F 로 디폴트는 False 이다. True 이면 회귀를 수행하기 전에 입력 데이터 세트를 정규화한다.

🔸 속성

- coef_ : 회귀계수 출력. Shape 는 (Target 값 개수, 피처개수)

- intercept_ : 절편값 출력

🔸 주의 사항

- 다중 공선성 문제 : 입력변수들끼리 상관관계가 매우 높은 경우 분산이 매우 커져 오류에 매우 민감해짐 👉 독립적인 중요한 피처만 남기고 제거하거나 규제를 적용

- 너무 많은 피처가 다중 공선성 문제를 지니면 PCA 를 통해 차원 축소를 수행하는 것도 고려해볼 수 있다.

🔸 평가지표

- MAE : 실제값과 예측값의 차이를 절댓값으로 변환해 평균

- MSE : 실제값과 예측값의 차이를 제곱하여 평균

- RMSE : MSE 에 루트를 씌운 것

- R^2 : 분산 기반으로 예측 성능을 평가한다. 1에 가까울수록 예측 정확도가 높다.

| 평가방법 | 사이킷런 | Scoring 함수 적용 값 |

| MAE | metrics.mean_absolute_error | neg_mean_absolute_error |

| MSE | metrics.mean_squared_error | neg_mean_squared_error |

| R^2 | metrics.r2_score | r2 |

✔ scoring 함수 적용값이란 cross_val_score 나 GridSearchCV 에서 평가 시 사용되는 scoring 파라미터의 적용값이다.

✔ scoring 함수에 neg, 즉 음수를 반환하는 이유는 사이킷런의 Scoring 함수가 score 값이 클수록 좋은 평가 결과로 자동 평가하기 때문이다. 그런데 실제값과 예측값의 오류 차이를 기반으로 하는 회귀평가지표의 경우 값이 커지면 오히려 나쁜 모델이라는 의미이므로 음수값으로 보정해준 것이다. 그래서 작은 오류 값이 더 큰 숫자로 인식되게 한다.

👀 데이터 로드

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

from scipy import stats

from sklearn.datasets import load_boston

%matplotlib inline

# boston 데이타셋 로드

boston = load_boston()

# boston 데이타셋 DataFrame 변환

bostonDF = pd.DataFrame(boston.data , columns = boston.feature_names)

# boston dataset의 target array는 주택 가격임. 이를 PRICE 컬럼으로 DataFrame에 추가함.

bostonDF['PRICE'] = boston.target

print('Boston 데이타셋 크기 :',bostonDF.shape)

bostonDF.head()

# Boston 데이타셋 크기 : (506, 14)

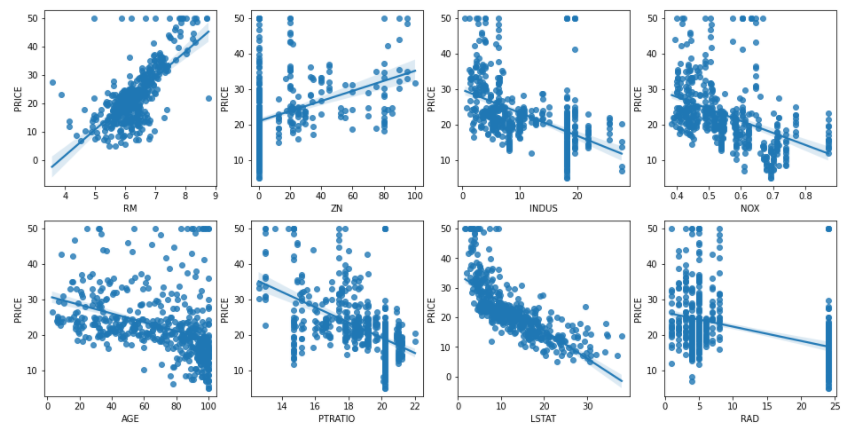

👀 종속변수와 설명변수 관계 파악 : sns.regplot

# PRICE 와 설명변수 사이의 영향정도를 시각화해보기

fig, axs = plt.subplots(figsize=(16,8), ncols=4, nrows=2)

lm_features = ['RM','ZN','INDUS','NOX','AGE', 'PTRATIO', 'LSTAT', 'RAD']

for i, feature in enumerate(lm_features) :

row = int(i/4)

col = i%4

sns.regplot(x=feature, y='PRICE', data = bostonDF, ax = axs[row][col])

# RM(방개수) 변수와 LSTAT 변수 (하위 계층의 비율) 가 Price 와의 영향도가 가장 두드러지게 나타남

👀 선형회귀 모델 학습/예측/평가

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error , r2_score

y_target = bostonDF['PRICE']

X_data = bostonDF.drop(['PRICE'],axis=1,inplace=False)

X_train , X_test , y_train , y_test = train_test_split(X_data , y_target ,test_size=0.3, random_state=156)

# Linear Regression OLS로 학습/예측/평가 수행.

lr = LinearRegression()

lr.fit(X_train ,y_train )

y_preds = lr.predict(X_test)

mse = mean_squared_error(y_test, y_preds)

rmse = np.sqrt(mse)

print('MSE : {0:.3f} , RMSE : {1:.3F}'.format(mse , rmse))

print('Variance score : {0:.3f}'.format(r2_score(y_test, y_preds)))

# MSE : 17.297 , RMSE : 4.159

# Variance score : 0.757

👀 절편과 회귀계수 값 확인

print('절편 값 : ', lr.intercept_)

print('회귀 계수 값 : ',np.round(lr.coef_ , 1))

# 절편 값 : 40.995595172164755

# 회귀 계수 값 : [ -0.1 0.1 0. 3. -19.8 3.4 0. -1.7 0.4 -0. -0.9 0. -0.6]

👀 교차검증

# 교차검증

from sklearn.model_selection import cross_val_score

y_target = bostonDF['PRICE']

X_data = bostonDF.drop(['PRICE'], axis = 1, inplace = False)

lr = LinearRegression()

# 5개 폴드 세트로 MSE 를 구한 뒤 이를 기반으로 다시 RMSE 를 구함

neg_mse_scores = cross_val_score(lr, X_data, y_target, scoring = 'neg_mean_squared_error', cv = 5)

rmse_scores = np.sqrt(-1*neg_mse_scores)

avg_rmse = np.mean(rmse_scores)

# cross_val_score(scoring = 'neg_mean_squared_error') 로 반환된 값은 모두 음수

print('5 folds 의 개별 Negative MSE scores : ', np.round(neg_mse_scores,2))

print('5 folds 의 개별 RMSE scores : ', np.round(rmse_scores,2))

print('5 folds 의 평균 RMSE : {0:.3f}'.format(avg_rmse))

# 5 folds 의 개별 Negative MSE scores : [-12.46 -26.05 -33.07 -80.76 -33.31]

# 5 folds 의 개별 RMSE scores : [3.53 5.1 5.75 8.99 5.77]

# 5 folds 의 평균 RMSE : 5.829

05. 다항회귀

📌 개요

💡 다항회귀

- 단항식이 아닌 2차, 3차 방정식과 같은 다항식으로 표현되는 것을 다항회귀라고 한다.

- 주의) 다항회귀는 비선형회귀라고 혼동하기 쉬운데, 선형회귀이다. 선형/비선형을 나누는 기준은 회귀계수가 선형/비선형인지에 따른 것이지 독립변수의 선형/비선형 여부와는 무관하다.

- 다항회귀는 선형회귀이기 때문에 비선형함수를 선형 모델에 적용시키는 방법을 사용해 구현한다 👉PolynomialFeatures

👀 비선형함수 생성 예시

from sklearn.preprocessing import PolynomialFeatures

import numpy as np

# 다항식으로 변환한 단항식 생성

X = np.arange(4).reshape(2,2)

print('일차 단항식 계수 피처 : \n', X) #[[0,1],[2,3]]

# degree = 2 인 2차 다항식으로 변환하기 위해 PolynomialFeatures 를 이용해 변환

poly = PolynomialFeatures(degree=2) # [1, x1, x2, x1^2, x1x2, x2^2]

poly.fit(X)

poly_ftr = poly.transform(X)

print('변환된 2차 다항식 계수 피처 : \n', poly_ftr)

# [[1. 0. 1. 0. 0. 1.],

# [1. 2. 3. 4. 6. 9.]]

👀 다항회귀 구현

# 다항회귀 구현해보기

from sklearn.pipeline import Pipeline

import numpy as np

def polynomial_func(X) :

y = 1 + 2*X[:,0] + 3*X[:,0]**2 + 4*X[:,1]**3 # y = 1 + 2*x1 + 3*x1^2 + 4*x2^3

return y

# Pipeline 객체로 다항변환과 선형회귀를 연결

model = Pipeline([('poly', PolynomialFeatures(degree=3)),

('linear', LinearRegression())])

X = np.arange(4).reshape(2,2) # ((x1,x2),(x1,x2))

y = polynomial_func(X)

model = model.fit(X,y)

print('Polynomial 회귀계수\n', np.round(model.named_steps['linear'].coef_,2))

# [0. 0.18 0.18 0.36 0.54 0.72 0.72 1.08 1.62 2.34]

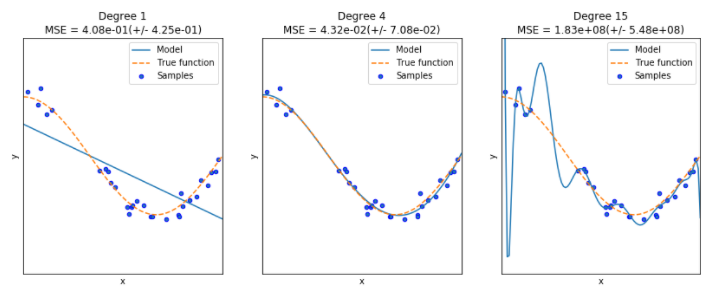

📌 과소적합 과대적합의 이해

- 학습 데이터의 패턴을 잘 반영하면서도 복잡하지 않은 균형잡힌 모델이 가장 좋다.

📌 편향 분산 트레이드 오프

- Degree1 같은 모델을 '고편향' (high Bias) 성을 가졌다고 표현 👉 모델이 지나치게 한 방향성으로 치우쳐짐

- Degree15 같은 모델을 '고분산' (high variance) 성을 가졌다고 표현 👉 학습 데이터의 세부적인 특성까지 학습하게 되면서 지나치게 높은 변동성을 가지게 됨

- 편향이 높으면 분산이 낮아지고 (과소적합), 분산이 높으면 편향이 낮아진다. (과적합)

06. 규제 선형 모델 - 릿지, 라쏘, 엘라스틱넷

📌 개요

💡 규제가 필요한 이유

- 회귀 모델은 적절히 데이터에 적합하면서도 회귀 계수가 기하급수적으로 커지는 것을 제어할 수 있어야 한다.

- 최적 모델을 위한 Cost 함수 = 학습 데이터 잔차 오류 최소화 ➕ 회귀계수 크기 제어

비용함수 목표 = MIN( RSS(W) + alpha * |W|2 ) 를 최소화

✨ alpha 는 튜닝 파라미터

👉 비용함수를 최소화하는 W 벡터를 찾기

- alpha 를 크게 하면 비용함수는 cost 를 최소화해야하기 때문에 회귀 계수 W의 값을 0에 가깝게 최소화하여 과적합을 개선할 수 있다.

- alpha 를 작게하면 회귀계수 W 값이 커져도 어느정도 상쇄가 가능하므로 학습 데이터 적합을 개선할 수 있다.

💡 규제

- 비용함수에 알파값으로 페널티를 부여해 회귀 계수 값의 크기를 감소시켜 과적합을 개선하는 방식

- L1 방식과 L2 방식으로 구분된다.

- L1 규제는 W 의 절대값에 패널티를 부여하는 방식으로, '라쏘' 회귀라 부른다. L1 규제를 적용하면 영향력이 크지 않은 회귀 계수 값을 0으로 변환한다.

- L2 규제는 W의 제곱에 대해 패널티를 부여하는 방식으로 '릿지' 회귀라 부른다.

📌 릿지회귀

💡 Ridge

- 주요 파라미터 : alpha

💡 실습

👀 alpha = 10 으로 설정해 릿지회귀 수행

from sklearn.linear_model import Ridge

from sklearn.model_selection import cross_val_score

# alpha = 10 으로 설정해 릿지회귀 수행

ridge = Ridge(alpha=10)

neg_mse_scores = cross_val_score(ridge, X_data, y_target, scoring = 'neg_mean_squared_error', cv=5)

rmse_scores = np.sqrt(-1*neg_mse_scores)

avg_rmse = np.mean(rmse_scores)

print('5 folds 의 개별 Negative MSE scores : ', np.round(neg_mse_scores,3))

print('5 folds의 개별 RMSE scores : ', np.round(rmse_scores, 3))

print('5 folds의 평균 RMSE : {0:.3f}'.format(avg_rmse))

# 규제가 없는 회귀는 5.829 였음 --> 규제가 더 뛰어난 예측성능을 보임

# 5 folds 의 개별 Negative MSE scores : [-11.422 -24.294 -28.144 -74.599 -28.517]

# 5 folds의 개별 RMSE scores : [3.38 4.929 5.305 8.637 5.34 ]

# 5 folds의 평균 RMSE : 5.518

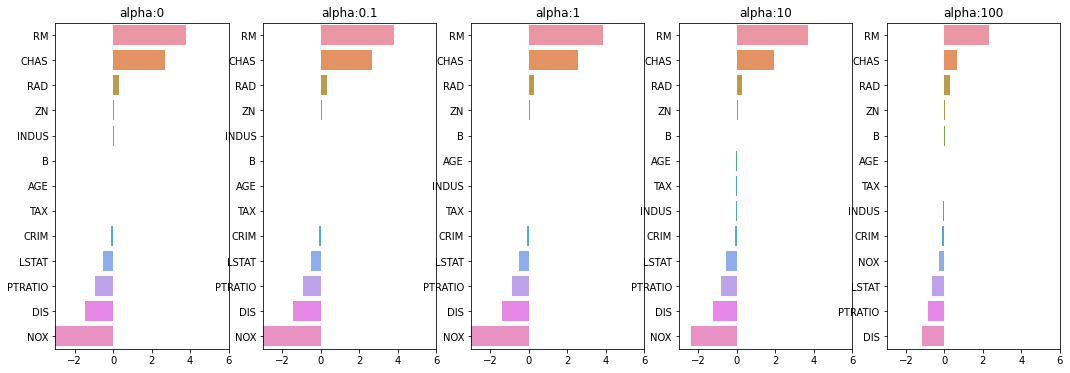

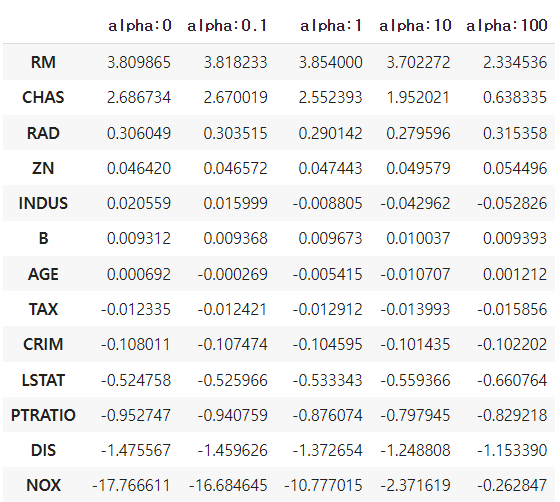

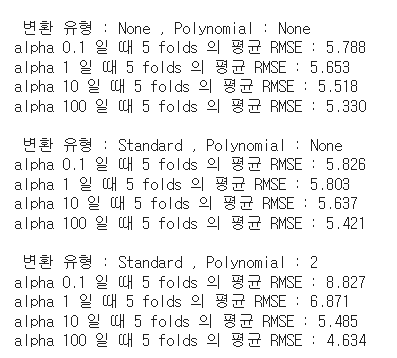

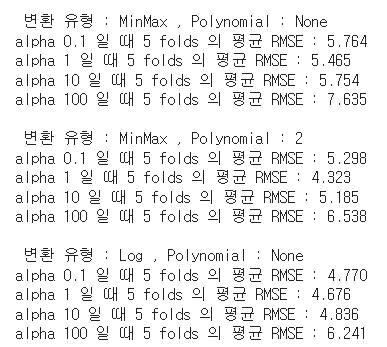

👀 여러개의 alpha 값으로 rmse 측정해보기

# 알파값을 바꿔가며 측정해보기

alphas = [0,0.1,1,10,100]

# alpha 에 따른 평균 rmse 를 구함

for alpha in alphas :

ridge = Ridge(alpha = alpha)

# cross_val_scores 를 이용해 5 폴드의 평균을 계산

neg_mse_scores = cross_val_score(ridge , X_data, y_target, scoring='neg_mean_squared_error', cv =5)

avg_rmse = np.mean(np.sqrt(-1*neg_mse_scores))

print('alpha {0} 일 때 5 folds 의 평균 RMSE : {1:.3f}'.format(alpha, avg_rmse))

# alpha 가 100일 때 가장 좋음

# alpha 0 일 때 5 folds 의 평균 RMSE : 5.829

# alpha 0.1 일 때 5 folds 의 평균 RMSE : 5.788

# alpha 1 일 때 5 folds 의 평균 RMSE : 5.653

# alpha 10 일 때 5 folds 의 평균 RMSE : 5.518

# alpha 100 일 때 5 folds 의 평균 RMSE : 5.330

👀 alpha 값에 따른 회귀 계수 크기 변화 살펴보기

📌 라쏘 회귀

💡 Lasso

- 주요 파라미터 : alpha

- L2 규제는 회귀계수의 크기를 감소시키는데 반하여 L1 규제는 불필요한 회귀계수를 급격하게 감소시켜 0으로 만들고 제거해버린다 👉 적절한 피처만 회귀에 포함시키는 피처 선택의 특성을 가지고 있다.

💡 실습

👀 모델 적용 함수 만들기

from sklearn.linear_model import Lasso, ElasticNet

# 알파값에 따른 회귀 모델의 폴드 평균 RMSE 를 출력하고 회귀 계수 값들을 DataFrame 으로 반환

def get_linear_reg_eval(model_name , params = None, X_data_n = None, y_target_n = None, verbose =True) :

coeff_df = pd.DataFrame()

if verbose : print('#####', model_name, '#####')

for param in params :

if model_name == 'Ridge' : model = Ridge(alpha=param)

elif model_name == 'Lasso' : model = Lasso(alpha=param)

elif model_name == 'ElasticNet' : model = ElasticNet(alpha = param, l1_ratio = 0.7)

neg_mse_scores = cross_val_score(model, X_data_n, y_target_n, scoring = 'neg_mean_squared_error', cv =5)

avg_rmse = np.mean(np.sqrt(-1*neg_mse_scores))

print('alpha {0} 일 때 5 folds 의 평균 RMSE : {1:.3f}'.format(param, avg_rmse))

# cross_val_score 는 evaluation metric 만 반환하므로 모델을 다시 학습하여 회귀 계수를 추출한다.

model.fit(X_data, y_target)

# alpha에 따른 피처별 회귀 계수를 Series 로 변환하고 df 칼럼 추가

coeff = pd.Series(data = model.coef_, index = X_data.columns)

colname = 'alpha:' + str(param)

coeff_df[colname] = coeff

return coeff_df

👀 여러가지 알파 값에 따른 결과

lasso_alphas = [0.07, 0.1, 0.5, 1, 3]

coeff_lasso_df = get_linear_reg_eval('Lasso', params = lasso_alphas, X_data_n = X_data, y_target_n = y_target)

##### Lasso #####

# alpha 0.07 일 때 5 folds 의 평균 RMSE : 5.612

# alpha 0.1 일 때 5 folds 의 평균 RMSE : 5.615

# alpha 0.5 일 때 5 folds 의 평균 RMSE : 5.669

# alpha 1 일 때 5 folds 의 평균 RMSE : 5.776

# alpha 3 일 때 5 folds 의 평균 RMSE : 6.189

👀 회귀 계수 출력

# 회귀계수 출력

sort_column = 'alpha:' + str(lasso_alphas[0])

coeff_lasso_df.sort_values(by=sort_column, ascending = False)

📌 엘라스틱넷 회귀

💡 Elastic Net

- L2 규제와 L1 규제를 결합한 회귀

- 라쏘회귀(L1) 의 상관관계가 높은 피처들의 경우에 이들 중 중요 피처만을 선택하고 다른 피처들은 모두 계수를 0으로 만드는 성질 때문에 alpha 값에 따라 회귀 계수의 값이 급격히 변동할 수 있는데, 이를 완화하기 위해 L2 규제를 라쏘 회귀에 추가했다.

- 단점 : 수행시간이 오래 걸림

💡 파라미터

- alpha 👉 (a+b) 값이다 : a*L1 + b*L2 👉 a 는 L1 규제의 알파값, b 는 L2 규제의 알파값이다.

- l1_ratio 👉 a/(a+b)

- l1_ration = 0 이면 a = 0 이므로 L2 규제와 동일하다. 1이면 b=0 이므로 L1 규제와 동일하다.

💡 실습

👀 모델 학습

elastic_alphas = [0.07, 0.1, 0.5, 1, 3]

coeff_elastic_df = get_linear_reg_eval('ElasticNet', params = elastic_alphas, X_data_n = X_data, y_target_n = y_target)

##### ElasticNet #####

# alpha 0.07 일 때 5 folds 의 평균 RMSE : 5.542

# alpha 0.1 일 때 5 folds 의 평균 RMSE : 5.526

# alpha 0.5 일 때 5 folds 의 평균 RMSE : 5.467

# alpha 1 일 때 5 folds 의 평균 RMSE : 5.597

# alpha 3 일 때 5 folds 의 평균 RMSE : 6.068

👀 회귀계수 살펴보기

sort_column = 'alpha:'+str(elastic_alphas[0])

coeff_elastic_df.sort_values(by=sort_column, ascending=False)

📌 선형 회귀 모델을 위한 데이터 변환

💡 선형회귀에선 최적의 파라미터를 찾아내는 것 못지않게 데이터 분포도의 정규화와 인코딩 방법이 중요하다.

- 선형회귀 모델은 피처과 타깃 값 간에 '선형' 관계를 가정

- 피처값과 타깃값의 분포가 '정규분포'인 형태를 매우 선호 👉 skewness 형태의 분포도 (심하게 왜곡됬을 경우) 일 경우 예측 성능에 부정적인 영향을 미칠 가능성이 높다. 따라서 모델 적용 이전에 '스케일링/정규화' 작업을 수행한다.

💡 피처변수 스케일링/정규화

- StandardScaler : 평균이 0, 분산이 1인 표준 정규 분포로 데이터 세트를 변환

- MinMaxScaler : 최솟값이 0, 최댓값이 1인 값으로 정규화를 수행

- Scaler 를 적용해도 예측 성능이 향상되지 않았다면 스케일링/정규화를 수행한 데이터 셋에 다시 '다항특성' 을 적용하여 변환하기도 한다. 그러나 다항 특성을 이용할 땐 과적합을 주의해야 한다.

- 로그변환 : 원래 값에 log 함수를 적용하면 보다 정규분포에 가까운 형태로 값이 분포된다. ⭐⭐

💡 타깃변수 스케일링/정규화

- 일반적으로 로그 변환을 적용한다. (정규화를 하면 다시 원본 타깃값으로 원복하기가 어렵기 때문)

💡 실습

👀 데이터 변환 파이프라인 만들기

# Standard, MinMax, Log 중 결정

# p_degree 는 다항식 특성을 추가할 때 적용 2 이상 부여하지 않음

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import PolynomialFeatures

def get_scaled_data(method='None', p_degree=None, input_data = None) :

if method == 'Standard' :

scaled_data = StandardScaler().fit_transform(input_data)

elif method == 'MinMax' :

scaled_data = MinMaxScaler().fit_transform(input_data)

elif method == 'Log' :

scaled_data = np.log1p(input_data)

else :

scaled_data = input_data

if p_degree != None :

scaled_data = PolynomialFeatures(degree=p_degree, include_bias = False).fit_transform(scaled_data)

return scaled_data

👀 릿지회귀 모델 학습

# 릿지회귀

alphas = [0.1, 1, 10, 100]

scale_methods = [(None, None), ('Standard', None), ('Standard',2), ('MinMax',None), ('MinMax',2), ('Log',None)]

for scale_method in scale_methods :

X_data_scaled = get_scaled_data(method = scale_method[0], p_degree = scale_method[1], input_data = X_data)

print('\n 변환 유형 : {0} , Polynomial : {1}'.format( scale_method[0], scale_method[1]))

get_linear_reg_eval('Ridge', params = alphas, X_data_n = X_data_scaled, y_target_n = y_target, verbose = False)

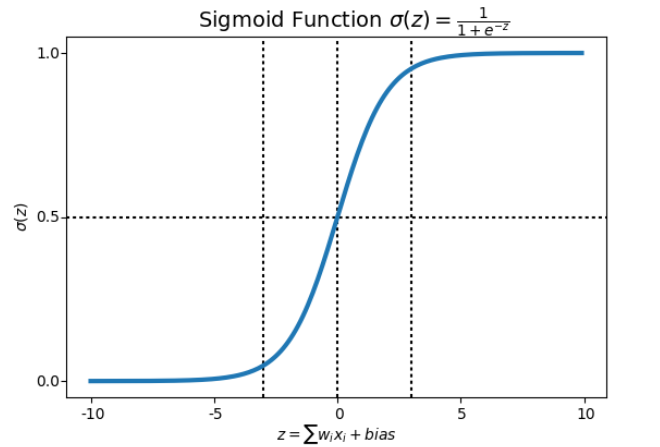

07. 로지스틱 회귀

📌 개요

💡 로지스틱 회귀

- 선형회귀 방식을 분류에 적용한 알고리즘으로 선형회귀 계열의 알고리즘이다.

- 선형회귀계열의 로지스틱 회귀는 데이터의 정규 분포도에 따라 예측 성능 영향을 받을 수 있으므로 데이터에 먼저 정규 분포 형태의 표준 스케일링을 적용하는게 좋다.

- 가볍고 빨라 이진 분류 예측의 기본 모델로 많이 사용하고, 희소한 데이터 세트 분류에도 뛰어난 성능을 보여 텍스트 분류에서도 자주 사용된다.

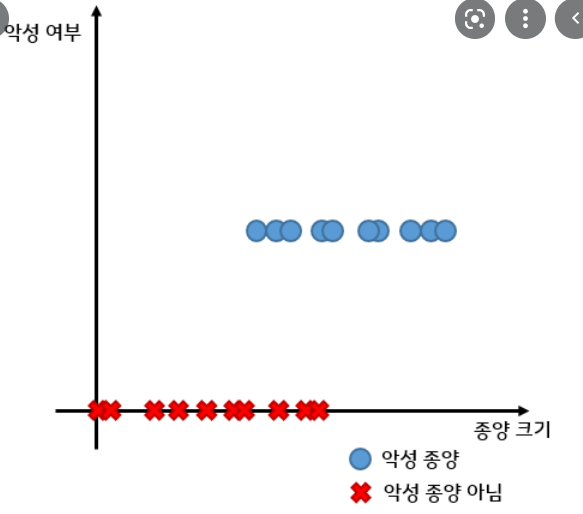

🧐 선형회귀와 다른점

- 학습을 통해 선형 함수의 회귀 최적선을 찾는 것이 아니라 시그모이드 함수 최적선을 찾고 시그모이드 함수의 반환 값을 확률로 간주에 확률에 따라 분류를 결정한다.

- 예시. 악성종양 분류 문제

💡 주요 파라미터

- penalty : 규제의 유형을 설정한다. l1 이면 L1 규제, l2 이면 L2 규제를 적용한다

- C : 규제 강도를 조절하는 alpha 값의 역수이다. 값이 작을수록 규제 강도가 크다.

📌 실습

👀 위스콘신 유방암 데이터 예제

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn.datasets import load_breast_cancer

from sklearn.linear_model import LogisticRegression

cancer = load_breast_cancer()

👀 변수 스케일링

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

scaler = StandardScaler()

data_scaled = scaler.fit_transform(cancer.data)

X_train, X_test, y_train, y_test = train_test_split(data_scaled, cancer.target, test_size = 0.3, random_state=0)

👀 모델 학습 및 성능 평가

# 모델 학습 및 성능 평가

from sklearn.metrics import accuracy_score, roc_auc_score

# 로지스틱 회귀를 이용해 학습 및 예측 수행

lr_clf = LogisticRegression()

lr_clf.fit(X_train, y_train)

lr_pred = lr_clf.predict(X_test)

# 정확도와 roc_auc 측정

print('accuracy : {:0.3f}'.format(accuracy_score(y_test, lr_pred)))

print('roc_auc : {:0.3f}'.format(roc_auc_score(y_test, lr_pred)))

# accuracy : 0.977

# roc_auc : 0.972

👀 하이퍼 파라미터 최적화

from sklearn.model_selection import GridSearchCV

params = {'penalty' : ['l1','l2'], 'C' : [0.01, 0.1, 1, 1, 5, 10]}

grid_clf = GridSearchCV(lr_clf, param_grid = params, scoring = 'accuracy', cv=3)

grid_clf.fit(data_scaled, cancer.target)

print('최적 하이퍼 파라미터:{0}, 최적 평균 정확도 {1:.3f}'.format(grid_clf.best_params_, grid_clf.best_score_))

# 최적 하이퍼 파라미터:{'C': 1, 'penalty': 'l2'}, 최적 평균 정확도 0.975

08. 회귀 트리

📌 개요

💡 '회귀함수' 를 기반으로 하는 회귀 VS '트리' 를 기반으로 하는 회귀

- 선형 회귀는 회귀 계수의 관계를 모두 선형으로 가정하여, 회귀 계수를 선형으로 결합하는 회귀 함수를 구해, 독립변수를 입력하여 결과값을 예측한다. 비선형 회귀는 회귀 계수의 결합을 비선형으로 하고, 비선형 회귀 함수를 세워 결과값을 예측한다.

➕ 비선형 회귀 함수와 곡선을 모형화 하는 것 : https://blog.minitab.com/ko/adventures-in-statistics-2/what-is-the-difference-between-linear-and-nonlinear-equations-in-regression-analysis

- 이 파트에서는 회귀 함수를 기반으로 하지 않고 결정 트리와 같이 트리 기반의 회귀 방식을 소개하고자 한다.

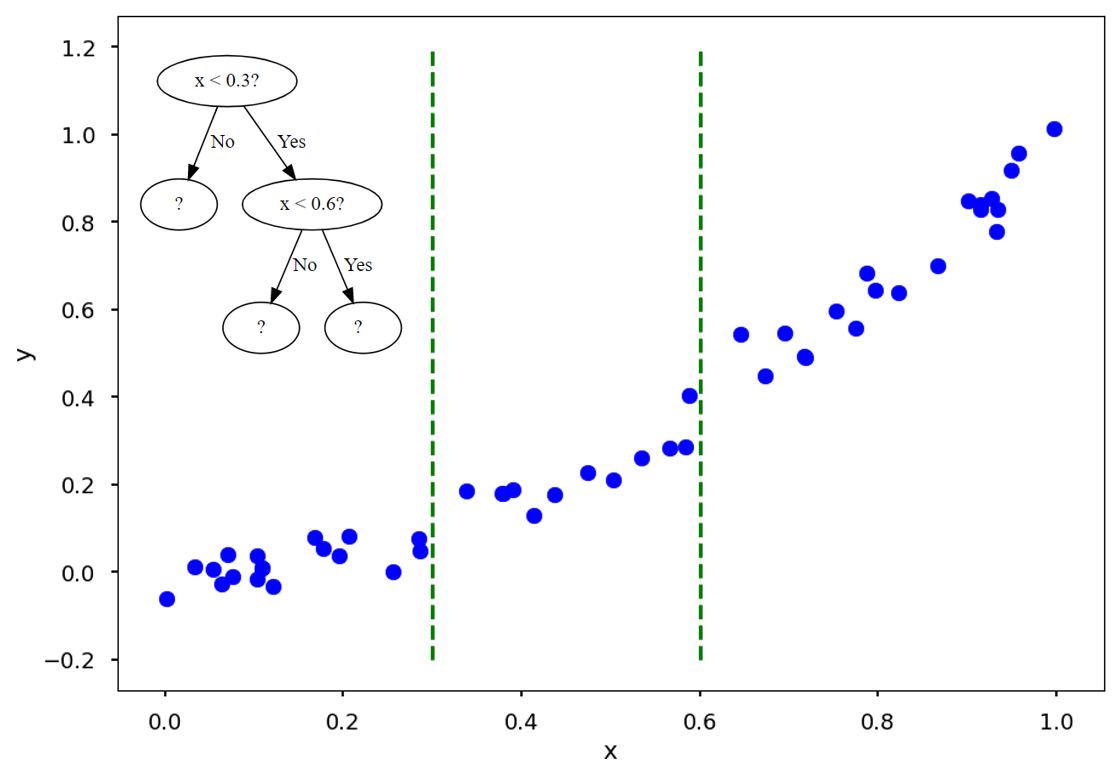

💡 회귀 트리

- 리프노드에 속한 데이터 값의 평균값을 구해 회귀 예측값을 계산한다.

- 하이퍼 파라미터는 분류 트리 Classifier 하이퍼 파라미터와 동일하다.

💡 CART (classification and regression Trees)

- 트리기반 알고리즘 (결정트리, 랜덤 포레스트, GBM, XGBoost, LightGBM 등) 은 분류 뿐 아니라 회귀도 가능하다. 트리 생성이 CART 알고리즘에 기반하고 있기 때문이다.

| 알고리즘 | 회귀 Estimator | 분류 Estimator |

| Decision Tree | DecisionTreeRegressor | DecisionTreeClassifier |

| Gradient Boosting | GraidnetBoostingRegressor | GradientBoostingClassifier |

| XGBoost | XBGRegressor | XGBClassifier |

| RandomForest | RandomForestRegressor | RandomForestClassifier |

| LightGBM | LGBMRegressor | LGBMClassifier |

💡 실습

👀 보스턴 주택 가격 예측 예제

from sklearn.datasets import load_boston

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestRegressor

import pandas as pd

import numpy as np

# boston 데이타셋 로드

boston = load_boston()

# boston 데이타셋 DataFrame 변환

bostonDF = pd.DataFrame(boston.data , columns = boston.feature_names)

# boston dataset의 target array는 주택 가격임. 이를 PRICE 컬럼으로 DataFrame에 추가함.

bostonDF['PRICE'] = boston.target

y_target = bostonDF['PRICE']

X_data = bostonDF.drop(['PRICE'],axis=1,inplace=False)

👀 랜덤 포레스트 회귀 모델 훈련/평가

rf = RandomForestRegressor(random_state=0, n_estimators = 1000)

neg_mse_scores = cross_val_score(rf, X_data, y_target, scoring = 'neg_mean_squared_error', cv=5)

rmse_scores = np.sqrt(-1*neg_mse_scores)

avg_rmse = np.mean(rmse_scores)

print(np.round(neg_mse_scores,2)) # [ -7.88 -13.14 -20.57 -46.23 -18.88]

print(np.round(rmse_scores,2)) # [2.81 3.63 4.54 6.8 4.34]

print(avg_rmse) # 4.422538982804892

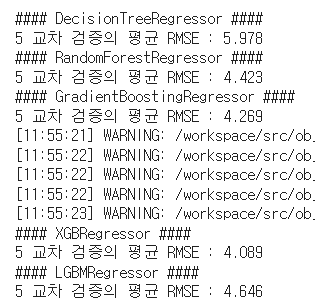

👀 랜덤 포레스트, 결정트리, GBM, XGBoost, LightGBM Regressor 모두 이용하여 예측해보기

# 함수 정의

def get_model_cv_prediction(model, X_data, y_target) :

neg_mse_scores = cross_val_score(model, X_data, y_target, scoring = 'neg_mean_squared_error', cv=5)

rmse_scores = np.sqrt(-1*neg_mse_scores)

avg_rmse = np.mean(rmse_scores)

print('####', model.__class__.__name__, '####')

print('5 교차 검증의 평균 RMSE : {0:.3f}'.format(avg_rmse))

# 적용

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import GradientBoostingRegressor

from xgboost import XGBRegressor

from lightgbm import LGBMRegressor

dt_reg = DecisionTreeRegressor(random_state = 0, max_depth = 4)

rf_reg = RandomForestRegressor(random_state = 0, n_estimators = 1000)

gb_reg = GradientBoostingRegressor(random_state = 0 , n_estimators = 1000)

xgb_reg = XGBRegressor(n_estimators=1000)

lgb_reg = LGBMRegressor(n_estimators=1000)

# 트리 기반의 회귀 모델을 반복하면서 평가 수행

models = [dt_reg, rf_reg, gb_reg, xgb_reg, lgb_reg]

for model in models :

get_model_cv_prediction(model, X_data, y_target)

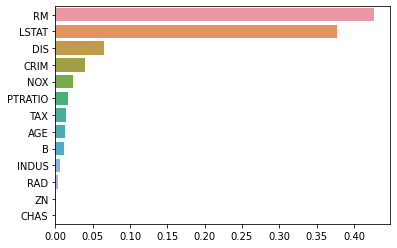

💡 회귀트리의 피처별 중요도

- 회귀 트리의 Regressor 클래스는 선형 회귀와 다른 처리 방식이므로 회귀 계수를 제공하는 coef_ 속성이 없다. 대신 feature_importances_ 를 이용해 피처별 중요도를 알 수 있다.

import seaborn as sns

%matplotlib inline

rf_reg = RandomForestRegressor(n_estimators=1000)

rf_reg.fit(X_data, y_target)

feature_series = pd.Series(data = rf_reg.feature_importances_, index = X_data.columns)

feature_series = feature_series.sort_values(ascending = False)

sns.barplot(x = feature_series, y = feature_series.index)

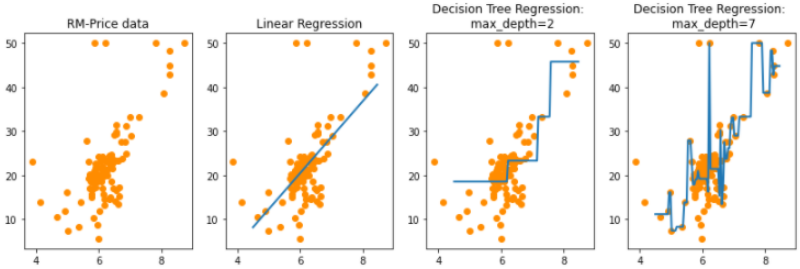

💡 선형회귀와 회귀 트리 비교해보기

- 선형 회귀는 직선으로 예측 회귀선을 표현

- 회귀 트리는 분할되는 데이터 지점에 따라 브랜치를 만들면서 계단 형태로 회귀선을 만든다. max_depth = 2 인 그래프를 보면 각 계단 구간별로 y 값의 평균값을 예측치로 반환한 부분을 확인해볼 수 있다.

- 트리의 최대 깊이를 7로 설정한 경우엔 학습 데이터 세트의 이상치도 학습하게 되면서 과적합 되었음을 확인해볼 수 있다.

'1️⃣ AI•DS > 📕 머신러닝' 카테고리의 다른 글

| [05. 클러스터링] K-means, 평균이동, GMM, DBSCAN (0) | 2022.05.07 |

|---|---|

| [06. 차원축소] PCA, LDA, SVD, NMF (0) | 2022.04.24 |

| [04. 분류] LightGBM, 스태킹 앙상블, Catboost (0) | 2022.03.20 |

| [04. 분류] GBM, XGboost (0) | 2022.03.14 |

| [01,02] 머신러닝 개요 (0) | 2022.03.13 |

댓글