https://colab.research.google.com/drive/1uB-7ckV-Mrh0Zfugv9OIm7QuM_j2OLg5?usp=sharing

[딥러닝 파이토치 교과서] chapter 05 합성곱 신경망.ipynb

Colaboratory notebook

colab.research.google.com

1️⃣ 합성곱 신경망

🔹 합성곱 층의 필요성

🌠 연산량 감소

• 이미지 전체를 한 번에 계산하는 것이 아닌, 국소적인 부분을 계산함으로써 시간과 자원을 절약하고 이미지의 세밀한 부분까지 분석할 수 있는 신경망

🌠 이미지/영상 처리에 유용한 구조

• 1차원 벡터로 펼쳐서 가중치로 계산하지 않고, 이미지 데이터의 공간적 구조 (예. 3x3) 를 유지하기 위해 합성곱층이 존재한다.

• 다차원 배열 데이터를 처리하도록 구성 👉 컬러 이미지 같은 다차원 배열 처리에 특화되어 있음

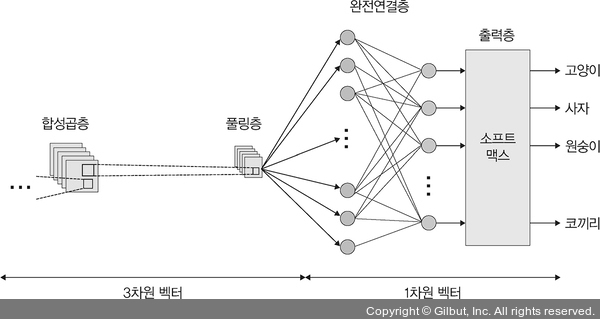

🔹 합성곱 신경망 구조

1. 합성곱층과 풀링층을 거치며 입력 이미지의 주요 feature vector 를 추출한다.

2. 추출된 주요 특성 벡터들이 완전연결층을 거치며 1차원 벡터로 변환된다.

3. 출력층에서 활성화 함수인 소프트맥스 함수를 사용해 최종 결과가 출력된다.

① 입력층

• 입력 이미지가 최초로 거치게 되는 계층

• 3차원 데이터 : 높이, 너비, 채널

• 채널은 이미지가 gray scale 이면 1 값을 가지고, 컬러 RGB 이면 3 값을 가진다.

→ (4, 4, 3) 형태로 표현할 수 있다.

② 합성곱층

• 입력 데이터에서 특성을 추출하는 역할

• 필터 ( = 커널) 를 사용하여 이미지의 특성을 추출하게 되는데, 추출한 결과물을 feature map 이라 부른다.

• 커널이 이미지의 모든 영역을 훑으며 특성을 추출하는데, 3x3 , 5x5 크기로 적용하는 것이 일반적이다.

• stride 라는 지정된 간격에 따라 순차적으로 이동한다.

(이동 과정은 교재 그림 참고하기)

• stride 간격만큼 순회하면서 모든 입력값과 합성곱 연산으로 새로운 특성 맵을 만들게 되며, 원본 이미지에 비해 크기가 줄어든다.

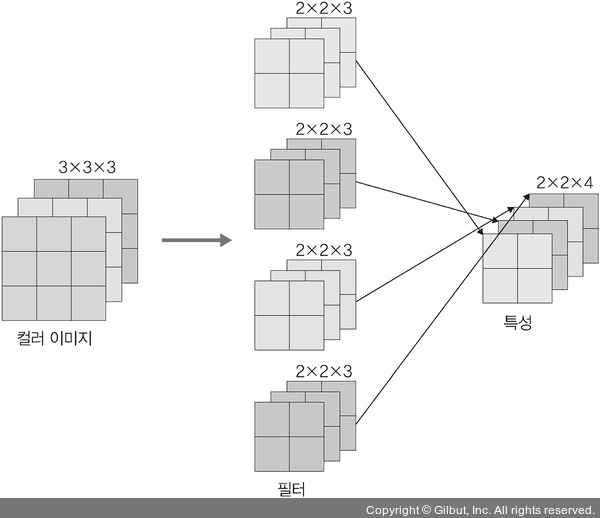

• 컬러 이미지 합성곱

→ 필터 채널이 3

→ RGB 각각에 서로 다른 가중치로 합성곱을 적용한 후 이를 더해준다는 점 ⭐⭐

→ 필터 채널이 3이라고 필터 개수도 3개라고 오해하기 쉽지만, 필터 개수는 한 개라는점!! (이미지 1개가 RGB 로 표현된 것과 비슷하다고 생각하면 됨)

• 필터 개수가 2개 이상인 경우

→ 필터 각각은 특성 추출 결과의 채널이 된다. (4개의 필터 👉 특성 2x2x4)

③ 풀링층

• 합성곱과 유사하게 특성 맵의 차원을 다운 샘플링 (이미지 크기를 축소하는 것) 하여 연산량을 감소시키고 주요 특성 벡터를 추출하여 학습을 효과적으로 할 수 있게 하는 레이어

(1) Max pooling : 대상 영역에서 최대값을 추출

(2) Average pooling : 대상 영역에서 평균을 반환

→ 대부분 합성곱 연산에서 최대 풀링이 사용

→ 평균 풀링은 각 커널 값을 평균화 시키어 중요한 가중치를 갖는 값의 특성이 희미해질 수 있기 때문

④ 완전연결층

• 합성층+풀링층으로 차원이 축소된 feature map 은 최종적으로 fully-connected layer 로 전달되어 이미지를 3차원 벡터에서 1차원 벡터로 펼친다.

⑤ 출력층

• 소프트맥스 활성화 함수가 사용되어, 입력값을 0~1 사이의 값으로 출력한다. 따라서 이미지가 각 레이블에 속할 확률 값을 출력하여, 가장 높은 확률값을 갖는 레이블이 최종 값으로 선정된다.

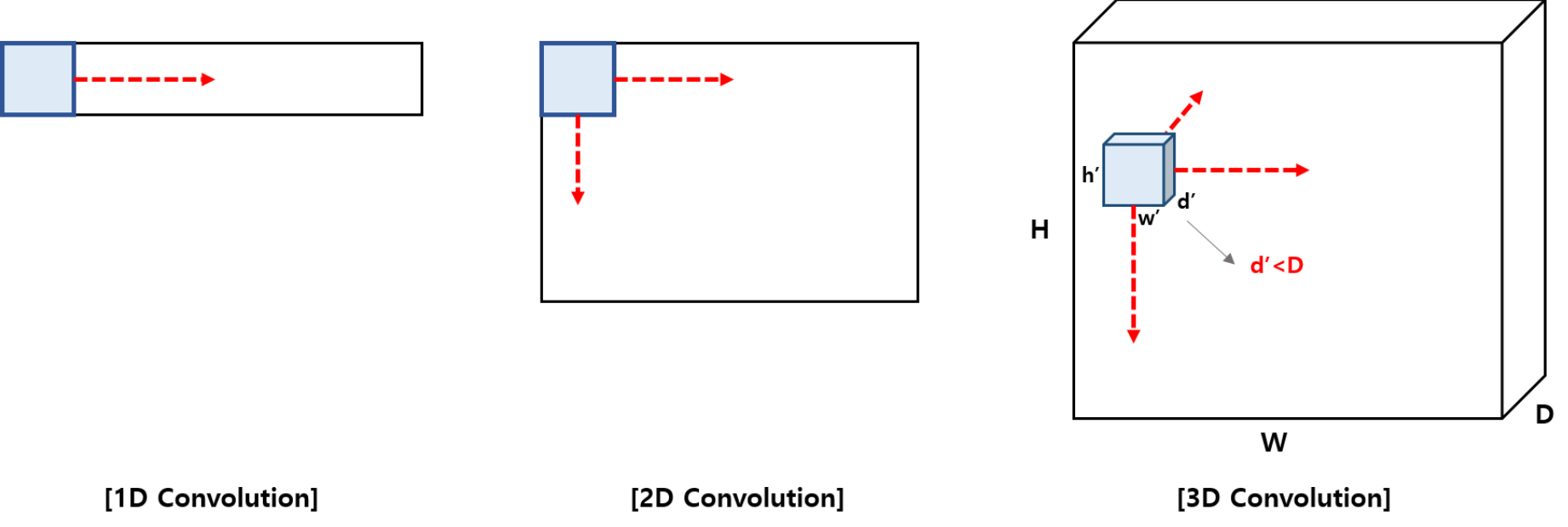

🔹 1D, 2D, 3D 합성곱

이동하는 방향의 수와 출력 형태에 따라 1D, 2D, 3D 로 합성곱을 분류할 수 있다.

입력데이터의 차원이 아닌, 필터의 진행 방향의 차원의 수에 따라 1D, 2D, 3D 를 구분한다!

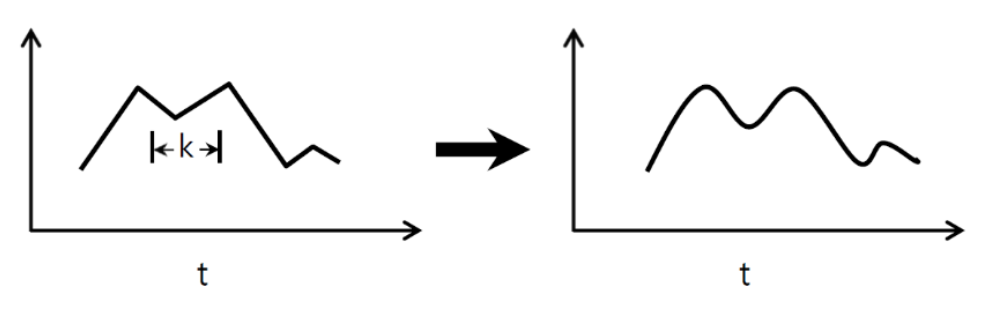

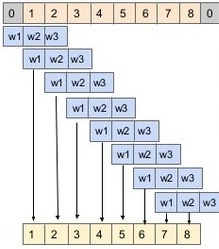

① 1D 합성곱

• 필터가 시간을 축으로 좌우로만 이동할 수 있는 합성곱

• 주로 NLP 분야에서 sequence data 에 적용하여 쓰인다.

• 입력 : [1,1,1,1,1] , 필터 : [0.25, 0.5, 0.25]

→ stride = 1 이라면 필터가 각각 오른쪽으로 이동하면서 합성곱을 하여 출력을 [1,1,1] 로 도출한다. 출력 형태가 1D 의 배열이 되며 그래프 곡선을 완화하고자 할 때 많이 사용한다.

• 입력 : W , 필터 : kxk , 출력 : W

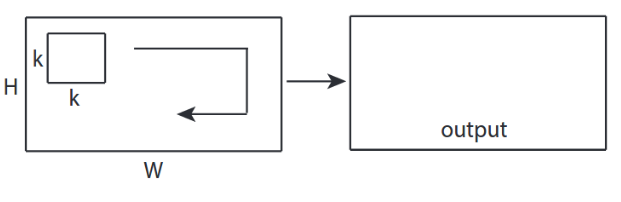

② 2D 합성곱

• 보통 이미지 데이터에 적용하는 합성곱 형태이다.

• 필터가 높이와 너비 방향 두 개로 움직이는 형태를 의미한다.

• 입력 : (W,H) , 필터 : (k,k) , 출력 (W,H)

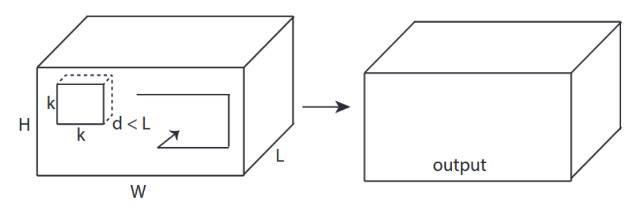

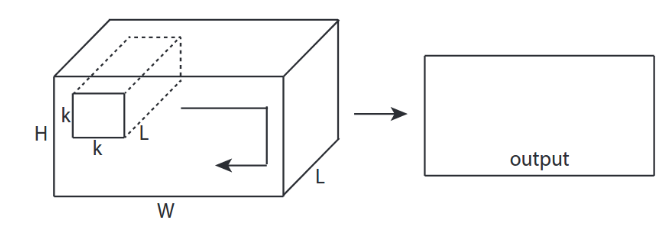

③ 3D 합성곱

• 필터가 높이, 너비, 깊이 방향 (x,y,z) 으로 움직일 수 있는 형태를 의미한다.

• 입력 : (W,H,L) , 필터 : (k,k,d) , 출력 : (W,H,L) , d< L

• They are helpful in event detection in videos, 3D medical images : 3차원 메디컬 이미지, 비디오에서 이벤트 감지에 효과적인 형태

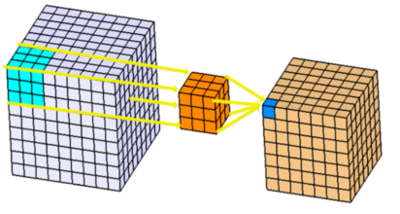

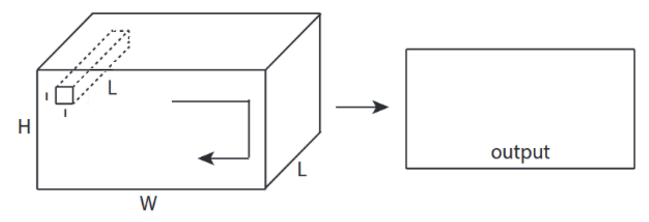

④ 3D 입력을 갖는 2D 합성곱

• 입력이 3D 형태임에도 출력 형태가 2D 행렬 형태를 취하는 경우

• 입력 : (W,H,L) , 필터 : (k,k,L) , 출력 : (W,H)

• 대표적인 사례 : LeNet-5, VGG

⑤ 1x1 합성곱

• 3D 형태로 입력된 이미지를, 필터 (1x1xL) 를 적용하여 2D 형태를 출력한다.

• 입력 : (W,H,L) , 필터 : (1,1,L) , 출력 : (W,H)

• 1x1 합성곱에서 채널 수를 조정해 연산량이 감소되는 효과가 있으며, 대표적으로 사용하는 네트워크 사례는 GoogLeNet 이 있다.

2️⃣ 합성곱 신경망 맛보기

🔹 데이터 셋팅

✔ fashion_mnist 데이터셋 : torchvision 에 내장된 예제 데이터로 운동화, 셔츠, 샌들 같은 작은 이미지의 모음으로 10가지 분류 기준이 있고 28x28 픽셀의 이미지 7만개로 구성되어 있다.

- train_images : 0~255 사이의 값을 갖는 28x28 크기의 넘파이 배열

- train_labels : 0에서 9까지 정수값을 갖는 배열

① 라이브러리 호출

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.nn.functional as F

import torchvision

import torchvision.transforms as transforms # 데이터 전처리를 위해 사용하는 라이브러리

from torch.utils.data import Dataset, DataLoader

# GPU 혹은 CPU 장치 확인

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

• GPU 사용

(1) 하나의 GPU 를 사용할 때 코드

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = Net()

model.to(device)

(2) 다수의 GPU 를 사용할 떄 코드 : nn.DataParallel

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = Net()

if torch.cuda.device_count() > 1 :

model = nn.DataParallel(net)

model.to(device)

→ nn.DataParallel 을 사용할 경우 배치크기가 알아서 각 GPU 로 분배되는 방식으로 작동한다. 따라서 배치 크기도 GPU 수만큼 늘려주어야 한다.

② 데이터셋 내려받기

train_dataset = torchvision.datasets.FashionMNIST('../sample', download = True,

transform = transforms.Compose([transforms.ToTensor()]))

test_dataset = torchvision.datasets.FashionMNIST('../sample', download = True, train=False,

transform = transforms.Compose([transforms.ToTensor()]))

• 첫번째 파라미터 : fashionMNIST 내려받을 위치를 지정

• download 를 True 로 변경해주면 첫 번째 파라미터의 위치에 해당 데이터셋이 있는지 확인 후 내려받는다.

• transform : 이미지를 텐서 (0~1) 로 변경한다.

③ 데이터 로더에 전달

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size = 100)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size = 100)

• DataLoader 를 사용해 원하는 크기의 배치 단위로 데이터를 불러오거나 순서가 무작위 (shuffle)로 섞이도록 할 수 있다.

• batch+size = 100 : 데이터를 100개 단위로 묶어서 불러온다.

④ 분류에 사용될 클래스 정의

labels_map = {0 : 'T-Shirt', 1 : 'Trouser', 2 : 'Pullover', 3 : 'Dress', 4 : 'Coat', 5 : 'Sandal', 6 : 'Shirt',

7 : 'Sneaker', 8 : 'Bag', 9 : 'Ankle Boot'}

fig = plt.figure(figsize=(8,8)); # 출력할 이미지의 가로세로 길이로 단위는 inch

columns = 4;

rows = 5;

for i in range(1, columns*rows +1) :

img_xy = np.random.randint(len(train_dataset));

# 0 ~ (train_dataset 길이) 값을 갖는 분포에서 랜덤한 숫자 한 개를 생성하라는 의미

img = train_dataset[img_xy][0][0,:,:]

# 3차원 배열 생성

# 이미지 출력

fig.add_subplot(rows, columns, i)

plt.title(labels_map[train_dataset[img_xy][1]])

plt.axis('off')

plt.imshow(img, cmap='gray')

plt.show()

🌠 rand, randint, randn 비교

| np.random.randint( )

- np.random.randint(10) : 0~10의 임의의 숫자를 출력

- np.random.randint(1, 10) : 1~9 사이의 임의의 숫자를 입력

| np.random.rand( )

- np.random.rand(8) : 0~1 사이의 정규표준분포 난수를 행렬 (1x8) 로 출력

- np.random.rand(4,2) : 0~1 사이의 정규표준분포 난수를 행렬 (4x2) 로 출력

| np.random.randn( )

- np.random.randn(8) : 평균이 0이고 표준편차가 1인 가우시안 정규분포 난수를 행렬 (1x8) 로 출력

- np.random.randn(4,2) : 평균이 0이고 표준편차가 1인 가우시안 정규분포 난수를 행렬 (4x2) 로 출력

🔹 모델 생성

① 심층신경망 모델 생성 (ConvNet 이 적용되지 않은 네트워크)

class FashionMNIST(torch.nn.Module) :

# 클래스 형태의 모델은 항상 torch.nn.Module 을 상속받는다.

def __init__(self) : # 객체가 갖는 속성값을 초기화 , 객체가 생성될 떄 자동으로 호출됨

super(FashionMNIST,self).__init__() # FasionMNIST 라는 부모 클래스를 상속받겠다는 의미

self.fc1 = nn.Linear(in_features = 784, out_features = 256)

# 선형 회귀 모델 : nn.Linear()

# in_features : 입력의 크기 👉 forward 부분에서 이부분만 넘겨줌

# out_features : 출력의 크기 👉 forward 연산의 결과에 해당하는 값

self.drop = nn.Dropout(0.25)

# 0,25 만큼의 비율로 텐서의 값이 0이 된다.

# 0.75 만큼의 비율의 텐서의 값은 (1/(1-p)) 만큼 곱해져서 커진다.

self.fc2 = nn.Linear(in_features = 256, out_features = 128)

self.fc3 = nn.Linear(in_features = 128, out_features = 10)

def forward(self, input_data) : # 순전파 : 입력 x 로부터 예측된 y 를 얻는 것

# 반드시 forward 라는 이름의 함수여야 한다.

# 객체를 데이터와 함께 호출하면 자동으로 실행된다.

out = input_data.view(-1,784) # 파이토치 view : reshape 같은 역할

# 2차원 텐서로 변경하되, 첫번째 차원의 길이는 알아서 계산해주고, 두번째 차원의 길이는 784를 갖도록 해달라는 의미

out = F.relu(self.fc1(out))

out = self.drop(out)

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

• 클래스 형태의 모델은 항상 torch.nn.Module 을 상속받는다.

• __init__() 은 객체가 갖는 속성 값을 초기화 하는 역할을 한다. 객체가 생성될 때 자동으로 호출된다.

• nn : 딥러닝 모델 구성에 필요한 모듈이 모여있는 패키지

• nn.dropout(p) : p만큼의 비율로 텐서의 값이 0이 되고, 나머지 비율 값은 (1/(1-p)) 만큼 곱해져 커진다.

• forward( ) : 객체를 데이터와 함께 호출하면 자동으로 실행되며 순전파 연산을 진행한다. 반드시 forward 라는 이름의 함수여야 한다.

• data.view( ) : 파이토치에서 reshape 같은 역할로 차원을 바꿔준다.

🌠 활성화 함수를 지정하는 2가지 방법

1. forward() 에서 정의 → F.relu() = nn.functional.relu()

import torch.nn.functional as F

inputs = torch.randn(64,3,224,224)

weights = torch.randn(64,3,3,3)

bias = torch.randn(64)

outputs = F.conv2d(inputs, weight, bias, padding = 1)

∘ 입력과 가중치 자체를 직접 넣어주어야 한다. 가중치를 전달해야 할 때마다 가중치 값을 새로 정의해야 함

2. __init()__ 에서 정의 → nn.ReLU()

import torch.nn as nn

inputs = torch.randn(64,3,224,224)

conv = nn.Conv2d(in_channels = 3, out_channels = 64, kernel_size = 3, padding = 1)

outputs = conv(inputs)

layer = nn.Conv2d(1,1,3)

② 파라미터 정의

• 모델 학습 전에 손실함수, 학습률, 옵티마이저에 대해 정의

learning_rate = 0.001 # 학습률

model = FashionDNN()

model.to(device)

criterion = nn.CrossEntropyLoss() # 분류문제 손실함수

optimizer = torch.optim.Adam(model.parameters(), lr = learning_rate) # 옵티마이저

print(model)

🔹 모델 학습

① 심층 신경망 DNN 모델 학습

• 파라미터 정의

learning_rate = 0.001 # 학습률

model = FashionMNIST()

model.to(device)

criterion = nn.CrossEntropyLoss() # 분류문제 손실함수

optimizer = torch.optim.Adam(model.parameters(), lr = learning_rate) # 옵티마이저

print(model)

• 모델 학습

num_epochs = 5

count = 0

loss_list = [] # 오차

iteration_list = [] # 반복횟수

accuracy_list = [] # 정확도

predictions_list = [] # 예측값

labels_list = [] # 실제값

for epoch in range(num_epochs) : # 전체 데이터를 5번 훈련

for images, labels in train_loader :

images, labels = images.to(device), labels.to(device) #🚩

train = Variable(images.view(100,1,28,28)) #🚩 자동미분

labels = Variable(labels)

outputs = model(train)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

count += 1

if not (count%50) : # 50번 반복마다 예측

total = 0

correct = 0

for images, labels in test_loader :

images, labels = images.to(device) , labels.to(device)

labels_list.append(labels)

test = Variable(images.view(100,1,28,28))

outputs = model(test)

predictions = torch.max(outputs, 1)[1].to(device)

predictions_list.append(predictions)

correct += (predictions == labels).sum()

total += len(labels)

accuracy = correct*100/total #🚩

loss_list.append(loss.data)

iteration_list.append(count)

accuracy_list.append(accuracy)

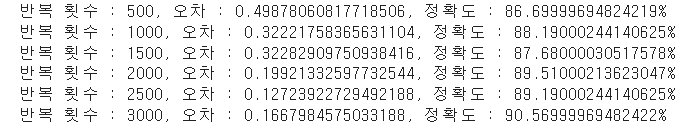

if not (count % 500) : # 500 반복 마다

print('반복 횟수 : {}, 오차 : {}, 정확도 : {}%'.format(count, loss.data, accuracy))

↪ 모델과 데이터는 동일한 장치에 있어야 한다. model.to(device) 가 GPU 를 사용했다면, images.to(device), labels.to(device) 도 GPU 에서 처리되어야 한다.

↪ torch.autograd 패키지의 Variable : 역전파를 위한 미분 값을 자동으로 계산하는 함수로, 나동 미분을 계산하기 위해서 꼭 지정해주어야 한다.

↪ accuracy 는 전체 예측에 대한 정확한 예측의 비율로 표현할 수 있다.

② 합성곱 네트워크 생성 및 학습

class FashionCNN(nn.Module) :

def __init__(self) :

super(FashionCNN, self).__init__()

self.layer1 = nn.Sequential( # 🚩

nn.Conv2d(in_channels = 1, out_channels = 32, kernel_size =3, padding = 1), # 🚩

nn.BatchNorm2d(32), # 🚩

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2) # 🚩

)

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels = 32, out_channels = 64, kernel_size =3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.fc1 = nn.Linear(in_features = 64*6*6, out_features = 600)

self.drop = nn.Dropout2d(0.25)

self.fc2 = nn.Linear(in_features = 600, out_features = 120)

self.fc3 = nn.Linear(in_features = 120, out_features = 10)

def forward(self, x) :

out = self.layer1(x)

out = self.layer2(out)

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.drop(out)

out = self.fc2(out)

out = self.fc3(out)

return out

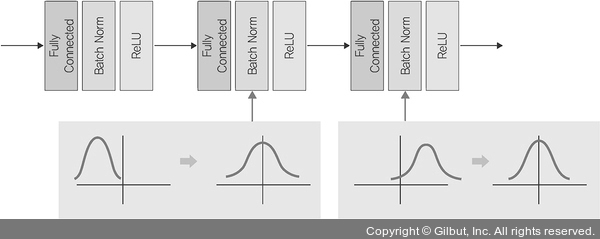

• nn.Sequential : __init__ 에서 사용할 네트워크 모델을 정의하고, forward 함수에서 구현될 순전파를 layer 형태로 가독성이 뛰어난 코드로 작성할 수 있게 해준다. 데이터가 각 계층을 순차적으로 지나갈 때 사용하면 좋은 방법이다.

• 합성곱층 : 합성곱 연산을 통해 이미지 특징을 추출

nn.Conv2d( in_channels = 1, out_channels = 32, kernel_size = 3, padding = 1 )

👉 in_channels : 입력 채널의 수로, 흑백은 1, 컬러는 3을 가진 경우가 많다.

※ 채널은 3차원으로 생각하면 '깊이' 를 의미한다고 볼 수 있다.

👉 out_channels : 출력 채널의 수

👉 kernel_size : 커널은 이미지 특징을 찾아내기 위한 공용 파라미터로, CNN 에서의 학습 대상이 바로 커널의 파라미터이다. 3으로 지정했기 때문에 커널의 크기는 (3x3) 정사각형 모양이라 생각하면 된다.

👉 padding : 출력 크기를 조정하기 위해 입력 데이터 주위에 0을 채우는 과정을 의미하며, 패딩 값이 클수록 출력 크기가 커진다.

• BatchNorm2d : 각 배치 단위별로 데이터가 다양한 분포를 가지더라도 평균과 분산을 이용해 정규화 하는 것을 의미한다. 평균은 0 분산은 1인 가우시안 형태로 조정된다.

• MaxPool2d : 이미지 크기를 축소시키는 용도로 사용되며, stride 크기가 커지면 출력 크기가 작아진다.

nn.MaxPool2d(kernel_size=2, stride =2)

👉 kernel_size : mxn 행렬로 구성된 가중치

👉 stride : 입력 데이터에 커널을 적용할 떄 이동할 간격을 의미하며 스트라이드 값이 커지면 출력 크기는 작아진다.

• nn.Linear : 클래스 분류를 위해 이미지 형태의 데이터를 배열 형태로 변환하여 작업하는 과정으로, Conv2d 에서 사용하는 패딩, 스트라이드 값에 따라 출력 크기가 달라지기 때문에 in_features 값을 계산해 입력해 주어야 한다. (공식은 교재를 참고)

• 파라미터 정의

learning_rate = 0.001

model = FashionCNN()

model.to(device)

criterion = nn.CrossEntropyLoss() # 손실함수

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate) # 옵티마이저

print(model)

• 모델 학습 결과 (코드는 DNN과 동일)

3️⃣ 전이학습

💡 큰 데이터셋을 확보하는데 드는 현실적인 어려움을 해결하기 위해 등장한 방법

ImageNet 처럼 아주 큰 데이터셋을 사용하여 훈련된 모델 (Pre-trained model) 의 가중치를 가져와 해결하려는 과제에 맞게 보정하여 사용하는 것을 의미한다.

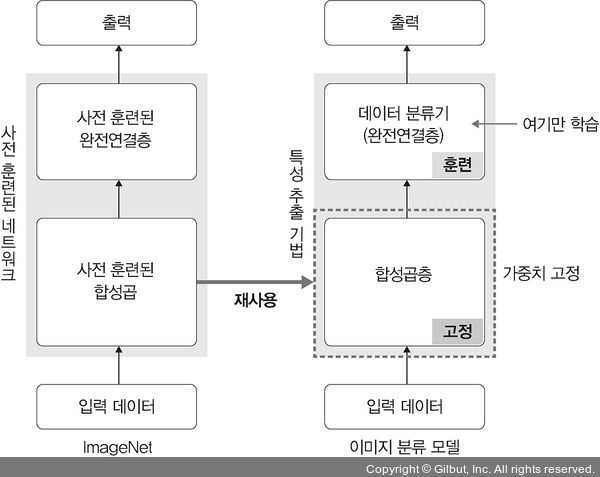

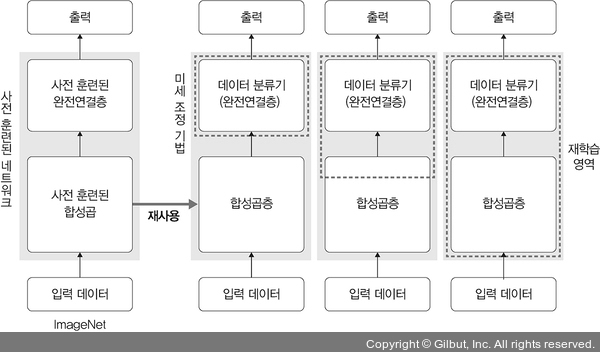

⭐ 전이학습 방법

(1) Feature extractor

(2) Fine-tuning

🔹 이미지 데이터 전처리 방법

data_path = 'catanddog/train/'

transform = transforms.Compose(

[

transforms.Resize([256,256]),

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor()

]

)

train_dataset = torchvision.datasets.ImageFolder(

data_path, transform = transform

)

train_loader = torch.utils.data.DataLoader(

train_dataset,

batch_size = 32,

num_workers = 8,

shuffle = True

)

print(len(train_dataset)) # 385

• torchvision.transform : 이미지 데이터를 변환해 모데르이 입력으로 사용할 수 있도록 해줌

↪ Resize : 이미지 크기 조정

↪ RandomResizedCrop : 이미지를 랜덤한 크기 및 비율로 자름 (for data augmentation)

↪ RandomHorizontalFlip : 이미지를 랜덤하게 수평으로 뒤집음

↪ ToTensor : 이미지 데이터를 텐서로 변환

🔹 특성 추출 방법

🌠 feature extractor

• 이미지 넷으로 사전 훈련된 모델을 가져온 후 마지막에 완전연결층 부분만 새로 만든다.

• 사전 훈련된 네트워크의 합성곱층 (가중치 고정) 에 새로운 데이터를 통과 시키고 그 출력을 데이터 분류기(fully connected layer) 에서 훈련시킨다.

• 사용 가능한 분류 모델

| Xception |

| Inception V3 |

| ResNet50 |

| VGG16 |

| VGG19 |

| MobileNet |

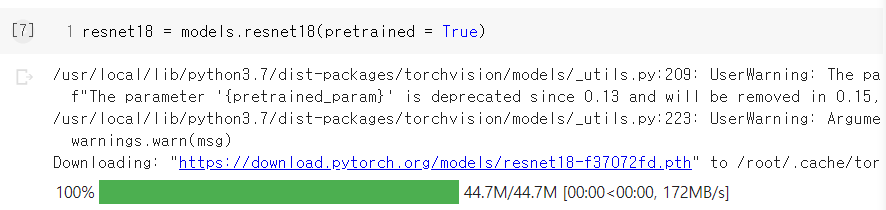

🌠 사전 훈련된 ResNet18 모델 사용

• 사전 훈련된 모델 내려받기

resnet18 = models.resnet18(pretrained = True)

• 사전 훈련된 모델 파라미터 학습 유무 지정

param.requires_grad = False

👉 역전파 중 파라미터들에 대한 변화를 계산할 필요가 없음을 나타냄

# 사전훈련된 모델의 파라미터 학습 유무 지정

def set_parameter_requires_grad(model, feature_extracting = True) :

if feature_extracting :

for param in model.parameters() :

param.requires_grad = False # 📌 역전파 중 파라미터들에 대한 변화를 계산할 필요가 없음

set_parameter_requires_grad(resnet18)

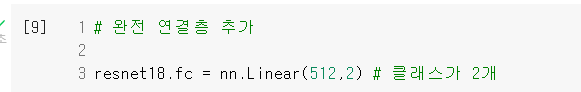

• ResNet18 에 완전 연결층 추가

resnet18.fc = nn.Linear(512, 2) # 2는 클래스가 2개라는 의미

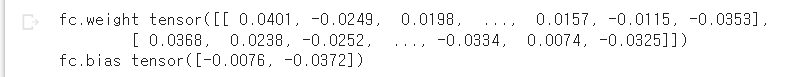

• 모델 파라미터 값 확인

# 모델 파라미터 값 확인

for name, param in resnet18.named_parameters() :

if param.requires_grad :

print(name, param.data)

• 모델 객체 생성 및 손실함수 정의

# 모델 객체 및 손실함수

model = models.resnet18(pretrained = True)

for param in model.parameters() :

param.requires_grad = False

model.fc = torch.nn.Linear(512,2)

for param in model.fc.parameters() :

# 완전연결층은 학습

param.requires_grad = True

optimizer = torch.optim.Adam(model.fc.parameters())

cost = torch.nn.CrossEntropyLoss() # 손실함수

print(model)

🔹 미세조정 기법

🌠 Fine-Tuning

• 특성 추출 기법에서 나아가 Pre-trained 모델과 합성곱층, 데이터 분류기의 가중치를 업데이트 하여 훈련 시키는 방식 → 사전 학습된 모델을 목적에 맞게 재학습 하거나 가중치 일부를 재학습 하는 것

• 특성 추출 → ImageNet 데이터의 이미지 특징과 가령, 전자상거래 물품 이미지 특징이 비슷한, 그러니까 목표 특성을 잘 추출했다는 가정 하에 좋은 성능을 낼 수 있는 방법이다. 만약 특성이 잘못 추출되었다면 미세조정 기법으로 새로운 이미지 데이터를 사용해 네트워크 가중치를 업데이트하여 특성을 다시 추출한다.

• 많은 연산량이 필요하므로 꼭 GPU 사용하기!

| 데이터셋 크기 | 사전 훈련된 모델과의 유사성 | fine tuning 학습 방법 |

| 크다 | 작다 | 모델 전체를 재학습 |

| 크다 | 크다 | 완전연결층과 가까운 합성곱층의 뒷부분과 데이터 분류기를 학습시킨다. |

| 작다 | 작다 | 데이터가 적어서 일부 계층에 fine-tuning 을 하여도 효과가 없을 수도 있다. 합성곱층의 어느 부분까지 새로 학습시킬지 적당히 설정해야 한다. |

| 작다 | 크다 | 많은 계층에 적용하면 과적합이 발생할 수 있으므로, 완전 연결층에 대해서만 적용한다. |

4️⃣ 설명 가능한 CNN

🔹 Explainable CNN

• 딥러닝 처리 결과를 사람이 이해할 수 있는 방식으로 제시하는 기술

• 블랙박스 : 딥러닝 모델들은 내부에서 어떻게 동작하는지 설명하기 어렵다 👉 처리 과정에 대한 이해를 위해 이를 시각화 할 필요성이 있다

• 시각화 방법 : (1) 필터에 대한 시각화 (2) 특성 맵에 대한 시각화

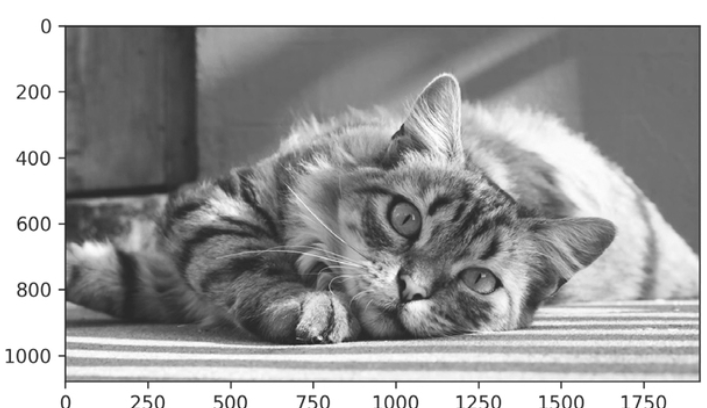

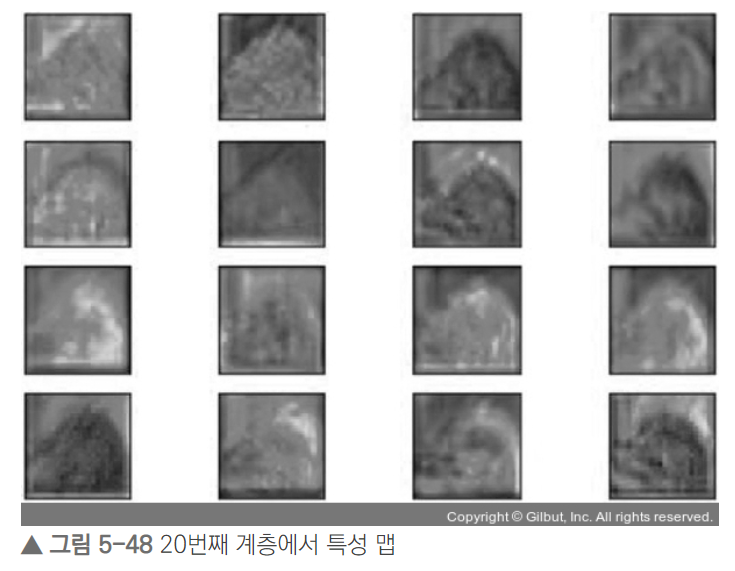

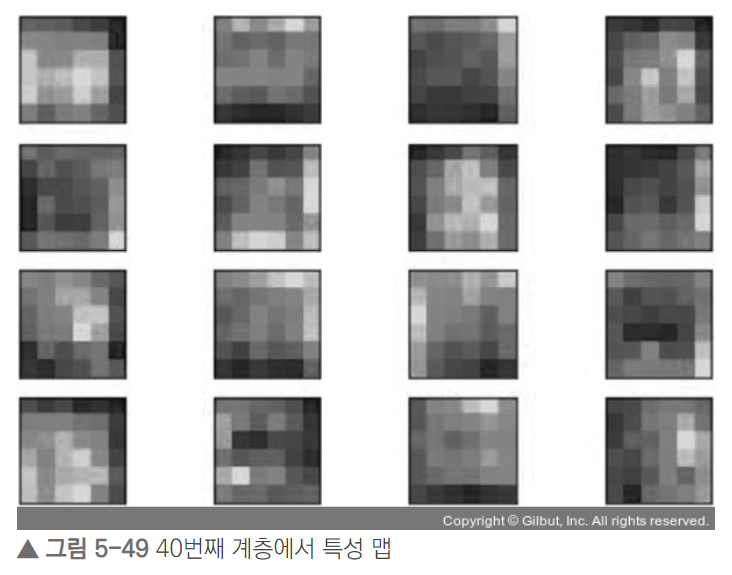

🌠 featuremap 시각화

• feature map : 필터를 입력에 적용한 결과

• feature map 을 시각화 하는 것은 특성 맵에서 입력 특성을 감지하는 방법을 이해할 수 있도록 돕는 것

class LayerActivations:

features=[]

def __init__(self, model, layer_num):

self.hook = model[layer_num].register_forward_hook(self.hook_fn)

#register_forward_hook : 순전파중 각 네트워크 모듈의 입출력을 가져옴

# 💡 hook 을 사용하여 중간 결과값들을 확인할 수 있다 - 특성맵 시각화

def hook_fn(self, module, input, output):

output = output

#self.features = output.to(device).detach().numpy()

self.features = output.detach().numpy()

def remove(self):

self.hook.remove()

✔ hook

👉 초반 계층에서는 입력 이미지의 형태가 많이 유지되다가 중반 계층에서는 고양이 이미지 형태가 점차 사라지고, 출력층에 가까울수록 원래 형태는 사라지고 이미지의 특징들만 전달되는 것을 확인해볼 수 있다.

💡 CNN 은 필터와 특성 맵을 시각화 하여 CNN 결과의 신뢰성을 확보할 수 있다.

5️⃣ 그래프 합성곱 네트워크

🔹 Graph Convolutional Network

🌠 그래프

• 노드 : 원소

• 엣지 : 결합 방법을 의미

→ 풀고자 하는 문제에 대한 전문가 지식이나 직관 등으로 구성된다.

🌠 그래프 신경망 GNN

∘ 그래프 데이터 표현 방법

(1) 인접행렬 : 관련성 여부에 따라 1,0 으로 표현

(2) 특성행렬 : 인접 행렬만으로 특성을 파악하기 어려워 단위 행렬을 적용한다. 입력 데이터에서 이용할 특성을 선택한다. 각 행은 선택된 특성에 대해 각 노드가 가지는 값을 의미한다.

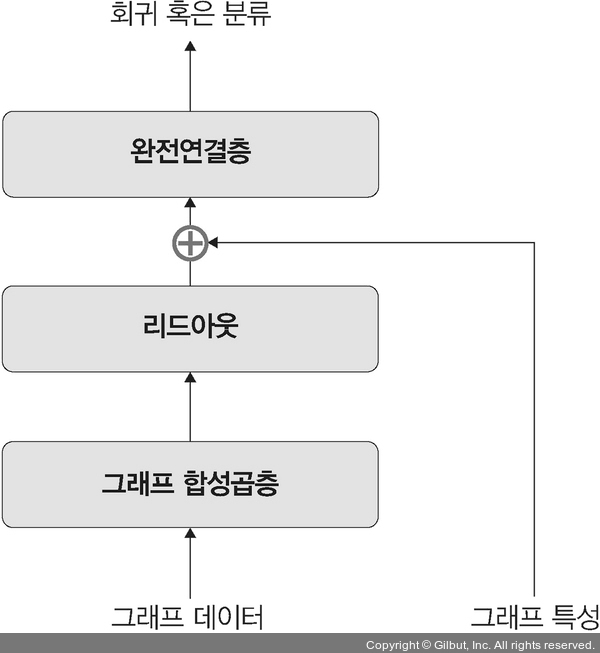

🌠 그래프 합성곱 네트워크 GCN

• 이미지에 대한 합성곱을 그래프 데이터로 확장한 알고리즘

👉 그래프 합성곱층 : 그래프 형태의 데이터를 행렬 형태로 변환한다.

👉 리드아웃 : 특성 행렬을 하나의 벡터로 변환하는 함수 , 특성 벡터에 대해 평균을 구하고 그래프 전체를 표현하는 하나의 벡터를 생성한다.

• 활용 : SNS 관계 네트워크, 학술 연구의 인용 네트워크, 3D Mesh

'1️⃣ AI•DS > 📒 딥러닝' 카테고리의 다른 글

| [딥러닝 파이토치 교과서] 자연어처리를 위한 임베딩 (0) | 2022.12.30 |

|---|---|

| [딥러닝 파이토치 교과서] 7장 시계열 I (1) | 2022.11.10 |

| [딥러닝 파이토치 교과서] 4장 딥러닝 시작 (1) | 2022.10.04 |

| [딥러닝 파이토치 교과서] 2장 실습 환경 설정과 파이토치 기초 (1) | 2022.09.23 |

| [딥러닝 파이토치 교과서] 1장 머신러닝과 딥러닝 (0) | 2022.09.22 |

댓글