✅ 파이토치 기초

https://colab.research.google.com/drive/1ki4W3rwTExhmZp5E-Ic81ab2NMe8iRHB?usp=sharing

[딥러닝 파이토치 교과서] chapter 02 파이토치 기초.ipynb

Colaboratory notebook

colab.research.google.com

1️⃣ 파이토치 개요

🔹 특징 및 장점

∘ 연산을 위한 라이브러리 → 딥러닝 프레임워크로 발전

∘ GPU 에서 텐서 조작 및 동적 신경망 구축이 가능한 프레임워크

✔ GPU : 연산을 빠르게 하는 역할, 내부적으로 CUDA, cuDNN 같은 API 를 통해 연산 가능

✔ 텐서 : 파이토치의 데이터 형태로, 다차원 행렬 구조를 가진다. .cuda() 를 사용해 GPU 연산을 수행할 수 있게 할 수 있다.

- axis 0 : 벡터, 1차원 축

- axis 1 : 행렬, 2차원 축

- axis 2 : 텐서, 3차원 축

✔ 동적 신경망 : 훈련할 때마다 네트워크 변경이 가능한 (은닉층 추가 및 제거) 신경망

import torch

torch.tensor([1,-1], [1,-1])

🔹 아키텍처

∘ 파이토치 API - 파이토치 엔진 - 연산처리

∘ 파이토치 API : 사용자가 사용하는 라이브러리로 아키텍쳐 가장 윗계층에 위치해있다.

∘ 파이토치 엔진 : 다차원 텐서 및 자동 미분을 처리한다

∘ 연산처리 : 텐서에 대한 연산을 처리한다.

∘ API

- torch : GPU 를 지원하는 텐서 패키지, 빠른 속도로 많은 양의 계산이 가능하다.

- torch.autograd : 자동 미분 패키지. 텐서플로, Caffe 와 가장 차별되는 지점으로, 일반적으로 은닉층 노드 수 변경과 같이 신경망에 사소한 변경이 있다면 신경망 구축을 처음부터 다시 시작해야 하는데, 파이토치는 자동 미분 기술을 택하여 실시간으로 네트워크 수정이 반영된 계산이 가능해 사용자는 다양한 신경망을 적용해볼 수 있다.

- torch.nn : CNN, RNN, 정규화 등이 포함되어 있어 손쉽게 신경망을 구축할 수 있다.

- torch.multiprocessing : 파이토치에서 사용하는 프로세스 전반에 걸쳐 텐서의 메모리 공유가 가능하다. 서로 다른 프로세스에서 동일한 데이터 (텐서) 에 대한 접근 및 사용이 가능하다.

∘ 텐서를 메모리에 저장하기

- 스토리지 : 텐서는 어떤 차원을 가지든 메모리에 저장할 때는 1차원 배열 형태가 된다. 변환된 1차원 배열을 스토리지라고 부른다.

- 오프셋 offset : 텐서에서 첫번째 요소가 스토리지에 저장된 인덱스이다.

- 스트라이드 stride : 차원에 따라 다음 요소를 얻기 위해 건너뛰기가 필요한 스토리지의 요소 (인덱스의) 개수 (메모리에서의 텐서 레이아웃) . 요소가 연속적으로 저장되기 때문에 행 중심으로 스트라이드는 항상 1이다.

→ stride = (열방향으로 다음에 위치한 원소에 접근할 때 건너가야 할 인덱스 수 , 행방향으로 다음에 위치한 원소에 접근할 때 건너가야 할 인덱스 수)

2️⃣ 파이토치 기초 문법

🔹 텐서 다루기

A. 텐서 생성 및 변환

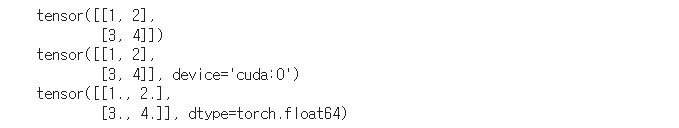

• torch.tensor( )

- device = 'cude:0' : GPU 에 텐서 생성하는 옵션

- dtype = torch.float64 : dtype 을 사용하여 텐서 생성

# 텐서 생성 및 변환

import torch

print(torch.tensor([[1,2],[3,4]])) # 2차원 형태의 텐서 생성

print(torch.tensor([[1,2],[3,4]], device = 'cuda:0')) # GPU 에 텐서 생성

print(torch.tensor([[1,2],[3,4]], dtype = torch.float64)) # dtype 을 이용해 텐서 생성

• .numpy()

- 텐서를 ndarray 로 변경

- .to('cpu').numpy() : GPU 상의 텐서를 CPU 의 텐서로 변환한 후 ndarray 로 변환

# 텐서를 ndarray 로 변환

temp = torch.tensor([[1,2],[3,4]])

print(temp.numpy())

temp = torch.tensor([[1,2],[3,4]] , device = 'cuda:0')

print(temp.to('cpu').numpy())

B. 텐서 인덱스 조작

• 배열처럼 인덱스를 바로 지정하거나 슬라이스 등을 사용할 수 있다.

• torch.FloatTensor : 32비트의 부동 소수점

• torch.DoubleTensor : 64비트의 부동 소수점

• torch.LongTensor : 64비트의 부호가 있는 정수

• 인덱스 조작 : [ ] 중괄호를 통해 원하는 원소를 가져온다.

# 인덱싱 & 슬라이싱

temp = torch.FloatTensor([1,2,3,4,5,6,7])

print(temp[0],temp[1],temp[-1]) # 인덱스로 접근

print('------------------------------------------')

print(temp[2:5], temp[4:-1]) # 슬라이스로 접근

C. 텐서 연산 및 차원 조작

• 텐서 간의 타입이 다르면 연산이 불가능하다 (FloatTensor 와 DoubleTensor 간에 사칙 연산을 수행하면 오류가 발생)

• view( ) : 텐서의 차원을 변경하는 방법 (reshape 기능과 유사)

• stack, cat : 텐서를 결합

• t, transpose : 차원을 교환

# 연산

temp = torch.tensor([[1,2],[3,4]])

print(temp.shape)

print()

print(temp.view(4,1))

print()

print(temp.view(-1)) # 2x2 행렬을 1차원 벡터로 변형

print()

print(temp.view(1,-1)) # 1x4 행렬로 변환

🔹 데이터 준비

∘ 데이터 호출 방법 : pandas 를 이용하는 방법 or 파이토치에서 제공하는 데이터를 이용하는 방법

∘ 이미지 데이터 : 분산된 파일에서 데이터를 읽은 후 전처리를 하고 배치 단위로 분할하여 처리한다.

∘ 텍스트 데이터 : 임베딩 과정을 거쳐 서로 다른 길이의 시퀀스를 배치 단위로 분할하여 처리한다.

∘ Custom dataset 커스텀 데이터셋 : 데이터를 한번에 다 부르지 않고 조금씩 나누어 불러서 사용하는 방식

- torch.utils.data.Dataset → 이를 상속받아 메소드들을 오버라이드 하여 커스텀 데이터셋을 만들 수 있다.

- torch.utils.data.DataLoader → 학습에 사용될 데이터 전체를 보관했다가 모델 학습을 할 때 배치크기 만큼 데이터를 꺼내 사용한다. 데이터를 미리 잘라놓는 것은 아니고, 내부적으로 반복자에 포함된 인덱스를 사용하여 배치 크기 만큼 데이터를 반환한다.

- 미니 배치 학습, 데이터 셔플(shuffle), 병렬 처리까지 간단히 수행가능

import pandas as pd

import torch

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

class CustomDataset(Dataset) :

# csv_file 파라미터를 통해 데이터셋을 불러온다.

def __init__(self, csv_file) :

self.label = pd.read_csv(csv_file)

# 전체 데이터셋의 크기를 반환

def __len__(self) :

return len(self.label)

# 전체 x 와 y 데이터 중에 해당 idx 번째의 데이터를 가져온다.

def __getitem__(self,idx) :

sample = torch.tensor(self.label.iloc[idx, 0:3]).int()

label = torch.tensor(self.label.iloc[idx,3]).int()

return sample, label

tensor_dataset = CustomDataset('../covtype.csv')

dataset = DataLoader(tensor_dataset, batch_size = 4, shuffle = True)

# 데이터셋을 torch.utils.data.DataLoader 파라미터로 전달한다.

∘ MNIST 데이터 불러오기

- torchvision : 파이토치에서 제공하는 데이터셋들이 모여있는 패키지로 ImageNet, MNIST 를 포함한 유명한 데이터셋들을 제공하고 있다.

import torchvision.transforms as transforms

mnist_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,),(1.0,)) # 평균이 0.5, 표준편차가 1이 되도록 데이터 분포를 조정

])

from torchvision.datasets import MNIST

import requests

download_root = '/content/sample_data/mnist_dataset'

train_dataset = MNIST(download_root, transform = mnist_transform , train=True, download=True)

valid_dataset = MNIST(download_root, transform = mnist_transform , train=False, download=True)

test_dataset = MNIST(download_root, transform = mnist_transform , train=False, download=True)

🔹 모델정의

파이토치 구현체들은 기본적으로 Class 라는 개념을 애용한다.

∘ 모델과 모듈의 차이

- 계층 Layer : 모듈을 구성하는 한 개의 계층으로 convolutional layer, linear layer 등이 있다.

- 모듈 module : 한 개 이상의 계층이 모여서 구성된 것으로 모듈이 모여서 새로운 모듈을 만들 수도 있다.

- 모델 model : 최종적으로 원하는 네트워크로 한 개의 모듈이 모델이 될 수도 있다.

A. 단순 신경망을 정의하는 방법

- nn.Module 을 상속받지 않는 매우 단순한 모델을 만들 때 사용한다.

- 구현이 쉽고 단순하다.

# 딘순 신경망 정의

import torch.nn as nn

model = nn.Linear(in_features = 1, out_features = 1, bias = True)

B. nn.Module() 을 상속하여 정의하는 방법

- __init__() : 모델에서 사용될 모듈 (nn.Linear , nn.Conv2d) , 활성화 함수 등을 정의

- forward() : 모델에서 실행되어야 하는 연산을 정의

import torch

import torch.nn as nn

class MLP(nn.Module) : # 모듈 상속

def __init__(self, inputs) :

super(MLP, self).__init__()

self.layer = Linear(inputs, 1) # 계층 정의

self.activation = Sigmoid() # 활성화 함수 정의

def forward(self, X) :

X = self.layer(X)

X = self.activation(X)

return X

• 선형회귀 모델 생성 예시

class LinearRegressionModel(nn.Module) :

def __init__(self) :

super().__init__()

self.linear = nn.Linear(1,1)

def forward(self, x) :

return self.linear(x)

C. nn.Sequential 신경망을 정의하는 방법

- nn.Sequential : 이를 사용하면 __init__() 에서 사용할 네트워크 모델들을 정의해 줄 뿐 아니라 forward() 함수에서는 모델에서 실행되어야 할 계산을 좀 더 가독성 뛰어나게 코드로 작성할 수 있다.

- layer 를 여러개 정의할 수 있고, sequential 객체는 그 안에 포함된 각 모듈을 순차적으로 실행해 준다.

- nn.Sequential 은 모델의 계층이 복잡할수록 효과가 뛰어나다.

import torch.nn as nn

class MLP(nn.Module) :

def __init__(self) :

super(MLP, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels = 3, out_channels = 64, kernel_size = 5),

nn.ReLU(inplace = True),

nn.MaxPool2d(2)

)

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels = 64, out_channels = 30, kernel_size = 5),

nn.ReLU(inplace=True),

nn.MaxPool2d(2)

)

self.layer3 = nn.Sequential(

nn.Linear(in_features = 30*5*5, out_features = 10, bias = True),

nn.ReLU(inplace=True)

)

def forward(self,x) :

x = self.layer1(x)

x = self.layer2(x)

x = x.view(x.shape[0],-1)

x = self.layer3(x)

return x

model = MLP() # 모델에 대한 객체 생성

print("printing children \n ---------------------")

print(list(model.children()))

print("\n\nprinting Modules\n-------------------------")

print(list(model.modules()))

✔ model.modules() : 모델의 네트워크에 대한 모든 노드들을 반환

✔ model.children() : 같은 수준 level 의 하위 노드를 반환

D. 함수로 신경망을 정의하는 방법

∘ Sequential 이용과 동일하지만, 함수로 선언할 경우 변수에 저장해놓은 계층들을 재사용 할 수 있는 장점이 있다. 그러나 모델이 복잡해지는 단점도 있다. 복잡한 모델은 함수를 이용하는 것보다 nn.Module 을 상속받아 사용하는 것이 편리하다.

def MLP(in_features = 1, hidden_features = 20, out_features = 1) :

hidden = nn.Linear(in_features = in_features, out_features = hidden_features, bias = True)

activation = nn.ReLU()

output = nn.Linear(in_features = hidden_features, out_features = out_features, bias = True)

net = nn.Sequential(hidden, activation, output)

return net

🔹 모델 파라미터 정의

💨 손실함수

∘ 학습하는 동안 출력과 실제 값 사이의 오차를 측정하여 모델의 정확성을 측정한다.

∘ BCELoss : 이진 분류를 위해 사용

∘ CrossEntropyLoss : 다중 클래스 분류를 위해 사용

∘ MSELoss : 회귀 모델에서 사용

👉 손실 함수의 값을 최소화 하는 가중치와 바이어스를 찾는 것이 학습의 목표 👈

💨 옵티마이저

∘ 손실함수를 바탕으로 모델의 업데이트 방법을 결정한다.

∘ step() 메서드를 통해 전달받은 파라미터를 업데이트 한다.

∘ 파라미터별로 다른 기준 (ex. 학습률) 을 적용시킬 수 있다.

∘ torch.optim.Optimizer(params, defaults) : 옵티마이저의 기본 클래스

∘ zero_grad() : 파라미터의 gradient 를 0 으로 만드는 메서드

∘ torch.optim.lr_scheduler : 에포크에 따라 학습률을 조정할 수 있다.

∘ 종류

- optim.Adadelta , optim.Adagrad, optim.Adam, optim.SparseAdam, optim.Adamax

- optim.ASGD, optim.LBFGS

- optim.RMSProp, optimRprop, optim.SGD

💨 학습 스케쥴러 learning rate scheduler

∘ 지정한 횟수의 에포크를 지날 때마다 학습률을 감소 (decay) 시켜준다.

∘ 이를 사용하면 학습 초기에는 빠른 학습을 진행하다가 global minimum 근처에 오면 학습률을 줄여 최적점을 찾아갈 수 있도록 해준다.

- optim.lr_scheduler.LambdaLR : 람다 함수를 이용해 함수의 결과를 학습률로 설정

- optim.lr_scheduler.StepLR : 특정 단계마다 학습률을 감마 비율만큼 감소시킨다.

- optim.lr_scheduler.MultiStepLR : stepLR 과 비슷하지만 특정 단계가 아닌 지정된 에포크에만 감마 비율로 감소시킨다.

- optim.lr_scheduler.ExponentialLR : 에포크마다 이전 학습률에 감마만큼 곱한다.

- optim.lr_scheduler.CosineAnnealingLR : 학습률을 코사인 함수의 형태처럼 변화시킨다. 학습률이 커지기도 작아지기도 한다.

- optim.lr_scheduler.ReduceLROnPlateau : 학습이 잘되고 있는지 아닌지에 따라 동적으로 학습률을 변화시킬 수 있다.

💨 지표 metrics

∘ 훈련과 테스트 단계를 모니터링 한다.

💨 최소점 Minimum

∘ Global minimum : 오차가 가장 작을 때의 값을 의미하며, 우리가 최종적으로 찾고자 하는것 (최적점)

∘ local minimum : 전역 최소점을 찾아가는 과정에서 만나는 구멍 같은 곳으로 옵티마이저가 지역 최소점에서 학습을 멈추면 최솟값을 갖는 오차를 찾을 수 없는 문제가 발생한다.

# 모델 파라미터 정의 예시 코드

from torch.optim import optimizer

criterion = torch.nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01, momentum = 0.9)

scheduler = torch.optim.lr_scheduler.LamdaLR(optimizer = optimizer,

lr_lambda = lambda epoch : 0.95**epoch)

for epoch in range(1, 100+1) : # 에포크 수만큼 데이터를 반복하여 처리

for x, y in dataloader : # 배치 크기만큼 데이터를 가져와서 학습 진행

optimizer.zero_grad()

loss_fn(model(x), y).backward()

optimizer.step()

scheduler.step()

🔹 모델훈련

💨 학습

∘ 모델을 학습시킨다는 것은 y = wx + b 라는 함수에서 w 와 b 의 적절한 값을 찾는다는 것의 의미이다.

∘ w 와 b 에 임의의 값을 적용하여 시작해 오차가 줄어들어 전역 최소점에 이를때까지 파라미터를 계속 수정해 나아간다.

| 딥러닝 학습절차 | 파이토치 학습 절차 |

| 모델, 손실함수, 옵티마이저 정의 | • 모델, 손실함수, 옵티마이저 정의 • optimizer.zero_grad() : 전방향 학습, 기울기 초기화 |

| 전방향 학습 (입력 → 출력 계산) | output = model(input) : 출력 계산 |

| 손실 함수로 출력과 정답의 차이 (오차) 계산 | loss = loss_fn(output, target) : 오차 계산 |

| 역전파 학습 (기울기 계산) | loss.backward() : 역전파 학습 |

| 기울기 업데이트 | optimizer.step() : 기울기 업데이트 |

∘ loss.backward( ) : 파이토치에서 기울기 값을 계산하기 위해 사용하는 것인데, 이는 새로운 기울기값이 이전 기울기 값에 누적하여 계산되므로, RNN 같은 모델을 구현할 때에는 필요하지만, 그렇지 않은 딥러닝 아키텍쳐에서는 불필요하다. 따라서 기울기 값의 누적 계산이 필요하지 않을 때에는 입력 값을 모델에 적용하기 전에 optimizer.zero_grad() 를 호출하여 미분값 (기울기를 구하는 과정에서 미분을 사용) 이 누적되지 않게 초기화 해주어야 한다.

∘ 모델 훈련 코드

for epoch in range(100) :

yhat = model(x_train)

loss = criterion(yhat, y_train) # criterion = torch.nn.MSELoss()

optimizer.zero_grad() # 오차가 중첩적으로 쌓이지 않도록 초기화

loss.backward() # 역전파 학습

optimizer.step() # 기울기 업데이트

🔹 모델평가

💨 evaluation

∘ 주어진 테스트 데이터셋을 사용해 모델을 평가

∘ torchmetrics 라이브러리 사용

💨 함수를 이용하여 모델을 평가하는 코드

∘ torchmetrics.functional.accuracy( )

# 패키지 설치 : pip install torchmetrics

# 모델을 평가하는 코드 : 함수를 이용한 코드

import torch

import torchmetrics

preds = torch.randn(10,5).softmax(dim = -1) # 예측값 : softmax()

target = torch.randint(5, (10,)) # 실제값

acc = torchmetrics.functional.accuracy(preds, target) # ⭐모델 평가

💨 모듈을 이용하여 모델을 평가하는 코드

∘ torchmetrics.Accuracy()

# 모델을 평가하는 코드 : 모듈을 이용한 코드

metric = torchmetrics.Accuracy() # 모델 평가 초기화

n_batches = 10

for i in range(n_batches) :

preds = torch.randn(10,5).softmax(dim = -1)

target = torch.randint(5, (10,))

acc = metric(preds, target) # ⭐

print(f'{i} 번째 배치에서의 정확도 : {acc}') # 현재 배치에서의 모델 평가

############################

acc = metric.compute()

print(f'전체 데이터에 대한 정확도 : {acc}') # 모든 배치에서의 모델 평가

∘ 사이킷런에서 제공하는 metrics 모듈을 사용할 수도 있다 → confusion_matrix , accuracy_score, classification_report

🔹 훈련과정 모니터링

💨 모니터링

∘ 학습이 진행되는 과정에서 각 파라미터 값들이 어떻게 변화하는지 살펴보는 것

∘ 텐서보드 : 학습에 사용되는 각종 파라미터값이 어떻게 변화하는지 손쉽게 시각화하여 볼 수 있음 + 성능을 추적하거나 평가하는 용도로 사용

1. 텐서보드 설정 Set up

2. 텐서보드에 기록 write

3. 텐서보드를 사용해 모델 구조를 살펴본다.

pip install tensorboard

import torch

from torch.utils.tensorboard import SummaryWriter #⭐

writer = SummaryWriter('../chap02/tensorboard') # 📌 모니터링에 필요할 값들이 저장될 위치

for epoch in range(num_epochs) :

model.train() # 학습 모드로 전환 (dropout=True)

batch_loss = 0.0

for i, (x,y) in enumerate(dataloader) :

x, y = x.to(device).float(), y.to(device).float()

outputs = model(x)

loss = criterion(outputs, y)

writer.add_scalar('Loss', loss, epoch) # 📌 스칼라 값 (오차) 을 기록

optimizer.zero_grad()

loss.backward()

optimizer.step()

writer.close() # SummaryWriter 가 더이상 필요하지 않으면 close() 호출

tensorboard -- logdir = ../chap02/tensorboard --port=6006

# 위의 명령을 입력하면 텐서보드를 실행할 수 있게 되고 웹 브라우저에

http://localhost:6006 을 입력하면 아래와 같은 웹페이지가 뜬다.

💨 model.train()

∘ 훈련 데이터셋에 사용, 모델 훈련이 진행될 것임을 알림

∘ dropout 이 활성화됨

∘ model.train 과 model.eval 을 선언해야 모델의 정확도를 높일 수 있다.

💨 model.eval()

∘ 모델을 평가할 때 모든 노드를 사용하겠다는 의미

∘ 검증과 테스트셋에 사용한다.

# model.eval()

model.eval() # 검증 모드로 전환 (dropout = False) ⭐

with torch.no_grad() :

valid_loss = 0

for x,y in valid_dataloader :

outputs = model(x)

loss = F.cross_entropy(outputs, y.long(),squeeze())

valid_loss += float(loss)

y_hat += [outputs]

valid_loss = valid_loss/len(valid_loss)

→ 검증 과정에는 역전파가 필요하지 않기 때문에 with torch.no_grad() 를 사용하여 기울기 값을 저장하지 않도록 한다. (메모리, 연산 시간 줄이기)

✔ python with 절

∘ 자원을 획득하고, 사용하고, 반납할 때 주로 사용한다.

∘ 파이썬에서 open() 함수를 통해 파일을 열면 꼭 close() 를 해주어야 하는데, with 구문을 쓰면 close() 를 자동으로 호출해주기 때문에 close 문을 쓰지 않아도 되는 장점이 있다.

🔹 코드 맛보기

💨 분류 문제

∘ car_evaluation dataset : output 은 차의 상태에 관한 범주형 값으로 4개의 범주를 갖는다.

(1) 데이터 불러오기

import torch

import torch.nn as nn

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

# 데이터 호출

dataset = pd.read_csv('/content/car_evaluation.csv')

dataset.head()

→ 단어를 벡터로 바꾸어주는 임베딩 처리가 필요하다.

(2) 데이터 분포 시각화

# target 분포 시각화

fig_size = plt.rcParams["figure.figsize"]

fig_size[0] = 8

fig_size[1] = 6

plt.rcParams["figure.figsize"] = fig_size

dataset.output.value_counts().plot(kind = 'pie', autopct = '%0.05f%%',

colors = ['lightblue', 'lightgreen', 'orange','pink'],

explode = (0.05,0.05,0.05,0.05))

(3) 데이터 전처리

범주형 변수에 대해 범주 형변환 astype('category') → 넘파이 배열 (numpy array) → Tensor

# 전처리 : 범주형 타입으로 바꾸기

categorical_columns = ['price', 'maint', 'doors', 'persons', 'lug_capacity', 'safety']

for category in categorical_columns :

dataset[category] = dataset[category].astype('category') # 📌 범주형으로 형변환

# 📌 범주형 데이터 (단어) 를 숫자 (넘파이 배열)로 변환 : cat.codes

price = dataset['price'].cat.codes.values

maint = dataset['maint'].cat.codes.values

doors = dataset['doors'].cat.codes.values

persons = dataset['persons'].cat.codes.values

lug_capacity = dataset['lug_capacity'].cat.codes.values

safety = dataset['safety'].cat.codes.values

print(price)

print(price.shape)

categorical_data = np.stack([price, maint, doors, persons, lug_capacity, safety], axis=1)

# (1728,1) --> axis=1 --> (1728,6)

categorical_data[:10] # 10개의 행만 출력해본다.

✔ np.concatenate 와 np.stack 의 차이

: stack 은 지정한 axis 를 완전히 새로운 axis 로 생각하여 연결하므로 반드시 두 넘파이 배열의 차원이 동일해야 오류가 발생하지 않는다.

※ stack 참고 : https://everyday-image-processing.tistory.com/87

→ axis 축을 지정하는 위치대로 차원이 1로 증가한 것을 확인해볼 수 있다.

∘ 배열을 텐서로 변환 : torch.tensor( )

# 배열을 텐서로 변환

categorical_data = torch.tensor(categorical_data, dtype = torch.int64)

categorical_data[:10]

∘ target 변수에 대해서도 텐서로 변환 : get_dummies 를 사용하여 넘파이 배열로 변환한 후, 1차원 텐서로 변환한다.

# target 칼럼도 텐서로 변환

outputs = pd.get_dummies(dataset.output)

outputs = outputs.values

outputs = torch.tensor(outputs).flatten() # 1차원 텐서로 변환

print(categorical_data.shape)

print(outputs.shape)

✔ ravel(), reshape(), flatten() 은 텐서의 차원을 바꿀 때 사용한다.

(4) 워드 임베딩

∘ 유사한 단어끼리 유사하게 인코딩되도록 표현하는 방법

∘ 높은 차원의 임베딩일수록 단어 간의 세부 관계를 잘 파악할 수 있게 된다.

∘ 단일 숫자로 변환된 넘파이 배열을 N 차원으로 변경한다.

∘ 임베딩 크기 (벡터 차원) 에 대한 정확한 규칙은 없으나, 칼럼의 고유값 수 (범주 개수) 를 2로 나누는 것을 많이 사용한다.

# 워드 임베딩 차원 정의

## 범주 개수 (고유값 개수)

categorical_column_sizes = [len(dataset[column].cat.categories) for column in categorical_columns]

## 임베딩 크기 = (고유값 수, 차원의 크기)

categorical_embedding_sizes = [(col_size, min(50, (col_size+1)//2)) for col_size in categorical_column_sizes]

print(categorical_embedding_sizes)

(5) 데이터셋 분리

# 데이터셋 분리

total_records = 1728

test_records = int(total_records*0.2) # 전체 데이터 중 20%를 테스트 용도로 사용

categorical_train_data = categorical_data[:total_records - test_records]

categorical_test_data = categorical_data[total_records - test_records : total_records]

train_outputs = outputs[:total_records - test_records]

test_outputs = outputs[total_records - test_records : total_records]

(6) 모델 네트워크 생성

# 모델 네트워크 생성

class Model(nn.Module) : # 1️⃣

def __init__(self, embedding_size, output_size, layers, p = 0.4) : # 2️⃣

super().__init__() # 3️⃣

self.all_embeddings = nn.ModuleList(

[nn.Embedding(ni,nf) for ni,nf in embedding_size])

self.embedding_dropout = nn.Dropout(p)

all_layers = []

num_categorical_cols = sum((nf for ni, nf in embedding_size))

input_size = num_categorical_cols # 입력층의 크기를 찾기 위해 범주형 칼럼의 개수를 저장

for i in layers : # 4️⃣

all_layers.append(nn.Linear(input_size, i))

all_layers.append(nn.ReLU(inplace=True))

all_layers.append(nn.BatchNorm1d(i))

all_layers.append(nn.Dropout(p))

input_size = i

all_layers.append(nn.Linear(layers[-1], output_size))

self.layers = nn.Sequential(*all_layers)

# 신경망의 모든 계층이 순차적으로 실행되도록 모든 계층에 대한 목록을 Sequential 클래스로 전달

def forward(self, x_categorical): # 5️⃣

embeddings = []

for i,e in enumerate(self.all_embeddings):

embeddings.append(e(x_categorical[:,i]))

x = torch.cat(embeddings, 1)

x = self.embedding_dropout(x)

x = self.layers(x)

return x

① class 형태로 구현되는 모델은 nn.Module 을 상속받는다.

② __init__ : 모델에서 사용될 파라미터와 신경망을 초기화하기 위한 용도로 사용되며, 객체가 생성될 때 자동으로 호출된다.

- self : 첫번째 파라미터로, 생성되는 객체 자기 자신을 의미한다.

- embedding_size : 범주형 칼럼의 임베딩 크기

- output_size : 출력층의 크기

- layers : 모든 계층에 대한 목록

- p : 드롭아웃 비율 (기본값은 0.5)

③ super().__init__() : 부모 클래스에 접근할 때 사용하며 super 안에는 self 를 사용하지 않는다.

④ 모델의 네트워크를 구축하기 위해 for 문을 사용하여 각 계층을 all_layers 목록에 추가한다.

⑤ forward() : 학습 데이터를 입력받아 연산을 진행한다.

(7) 객체 생성

∘ 객체를 생성하면서 파라미터 값을 입력한다.

# 모델 객체 생성

model = Model(categorical_embedding_sizes, 4, [200,100,50], p =0.4)

# (범주형 칼럼의 임베딩 크기, 출력크기, 은닉층의 뉴런, 드롭아웃)

# [200,100,50] : 다른 크기로 지정해서 테스트 해도 괜찮음

(8) 손실함수, 옵티마이저 정의, 자원 할당

∘ 분류 문제이기 때문에 손실함수는 크로스 엔트로피를 사용하고, 옵티마이저는 아담을 사용한다.

loss_function = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr = 0.001)

∘ 파이토치는 GPU 에 최적화된 딥러닝 프레임워크이므로 GPU 사용이 가능하다면 자원을 할당해준다.

# GPU / CPU 사용 설정

if torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

(9) 모델 학습

# 모델 학습

epochs = 500

aggregated_losses = []

train_outputs = train_outputs.to(device=device, dtype=torch.int64)

for i in range(epochs):# 각 반복마다 손실함수가 오차를 계산한다.

i += 1

y_pred = model(categorical_train_data).to(device)

single_loss = loss_function(y_pred, train_outputs)

aggregated_losses.append(single_loss) # 반복할때마다 오차를 추가

if i%25 == 1:

print(f'epoch: {i:3} loss: {single_loss.item():10.8f}')

optimizer.zero_grad()

single_loss.backward() # 가중치 업데이트

optimizer.step() # 기울기 업데이트

print(f'epoch: {i:3} loss: {single_loss.item():10.10f}') # 25 에포크마다 오차 출력

(10) 테스트 데이터셋으로 예측

# 예측

test_outputs = test_outputs.to(device = device, dtype = torch.int64)

with torch.no_grad() :

y_val = model(categorical_test_data)

loss = loss_function(y_val, test_outputs)

print(f'Loss : {loss:.8f}')

print(y_val[:5]) # 5개의 값을 춫력

# 모델 네트워크에서 output size = 4 로 지정했기 때문에, 각 행은 하나의 output 에 대한 네 개의 뉴런 값을 보여줌

→ 기존 target 값이 범주가 4개로 이루어진 값이었기 때문에 모델 네트워크에서도 output size 를 4로 지정했고, 각 목록에서 가장 큰 값에 해당하는 인덱스 위치가 해당 범주로 예측되는 값이다.

※ argmax

- axis=1 : 각 가로축 원소들끼리 비교해서 최대값의 위치(인덱스) 를 반환

- axis=0 : 각 세로축 원소들끼리 비교해서 최대값의 위치(인덱스) 를 반환

- axis 를 지정하지 않으면 모든 원소를 순서대로 1차원 array 로 간주했을 때 최대값의 인덱스를 반환한다.

(11) 모델성능 평가

import warnings

warnings.filterwarnings('ignore')

from sklearn.metrics import classification_report, confusion_matrix, accuracy_score

test_outputs=test_outputs.cpu().numpy()

print(confusion_matrix(test_outputs,y_val))

print(classification_report(test_outputs,y_val))

print(accuracy_score(test_outputs, y_val))

→ 파라미터 (훈련/테스트 데이터셋 분할, 은닉층 개수 및 크기 등) 을 변경하면서 더 나은 성능 찾아보기

'1️⃣ AI•DS > 📒 딥러닝' 카테고리의 다른 글

| [딥러닝 파이토치 교과서] 5장 합성곱 신경망 Ⅰ (0) | 2022.10.06 |

|---|---|

| [딥러닝 파이토치 교과서] 4장 딥러닝 시작 (1) | 2022.10.04 |

| [딥러닝 파이토치 교과서] 1장 머신러닝과 딥러닝 (0) | 2022.09.22 |

| [Pytorch 딥러닝 입문] 파이토치 기초 (0) | 2022.09.14 |

| [인공지능] 딥러닝 모델 경량화 (0) | 2022.06.21 |

댓글