💡 주제 : Subword Models

📌 핵심

- Task : Character level models

- BPE, WordPiece model, SentencePiece model, hybrid models

1️⃣ Linguistic Knowledge

1. 언어학 개념 정리

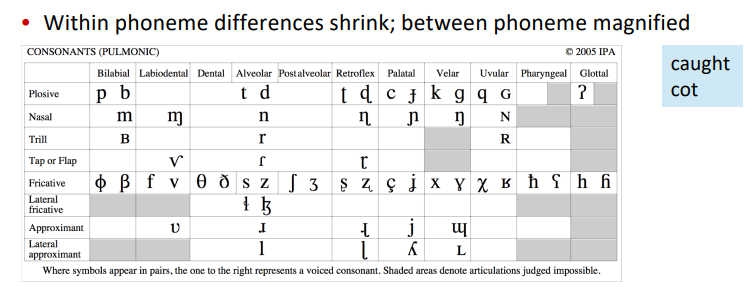

✔ 음운론 Phonology

◽ 언어의 '소리' 체계를 연구하는 분야 → 사람의 입으로 무한의 소리를 낼 수 있지만, 언어로 표현될 때는 연속적인 소리가 범주형으로 나눠져서 인식된다.

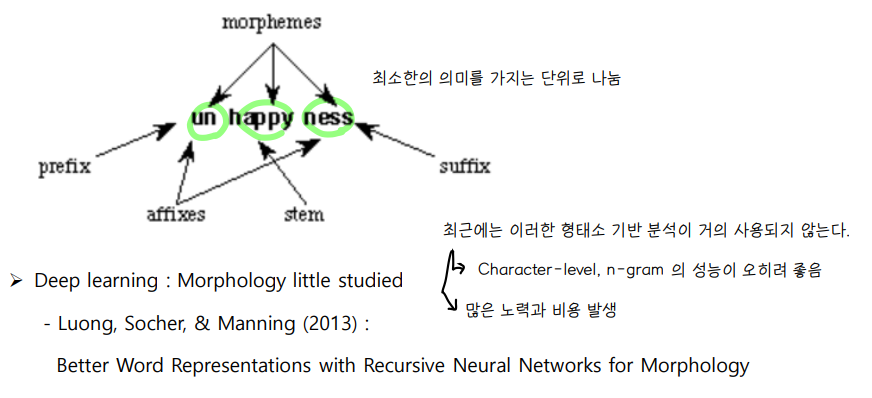

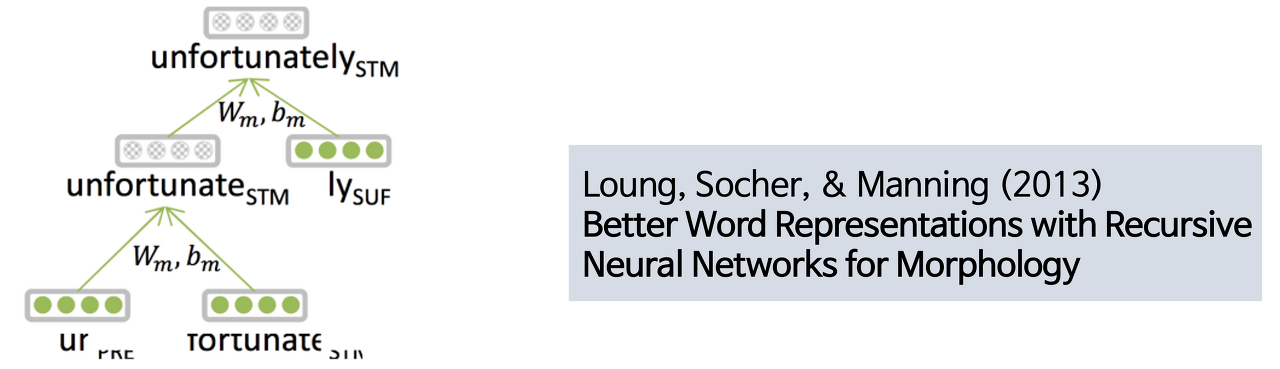

✔ 형태론 Morphology

◽ 최소한의 의미를 가지는 구조

◽ 단어의 어형 변화를 연구하는 문법의 한 분야 → 작은 단위의 단어들이 모여 하나의 의미를 완성

👉 형태소 단위의 단어들을 딥러닝에 사용하는 경우는 거의 없다. 단어를 형태소 단위로 쪼개는 과정 자체가 어렵고, 이 방법을 사용하지 않더라도 character, n-gram 으로 중요한 의미 요소를 충분히 잘 잡아낼 수 있다.

2. Words in writing systems

✔ Writing systmes vary in how they represents words

사람의 언어표기 체계는 국가마다 차이가 존재하며 하나로 통일되어 있지 않다.

◽ No segmentation : 중국어, 아랍어처럼 띄어쓰기 구분이 없는 언어들도 존재한다.

◽ Compounds 합성어 : 띄어쓰기가 있음에도 하나의 명사로 인식되는 경우 , 띄어쓰기 없이 한 단어로 인식해야하는 경우가 존재한다.

◽ Written from

3. Models below the word level 단어 기반 모델

✔ Need to handle large, open vocabulary

단어 기반의 모델을 만들면 커버해야할 단어가 너무 많으므로 무한한 단어 사전 공간이 필요해 비효율적이다.

◽ Rich morphology : 체코어와 같이 풍부한 형태학을 가진 언어

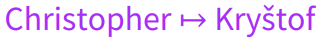

◽ Transliteration : 외래어 표기

◽ Informal Spelling : 축약어, 맞춤법에 맞지 않는 철자표기, 신조어들이 생겨남

2️⃣ Pure Character-level models

1. Character level 로 접근하는 이유

✔ (복습) 단어 기반 모델의 문제점

① 언어별로 특성이 상이하다.

② Need to handle large , open vocabularly

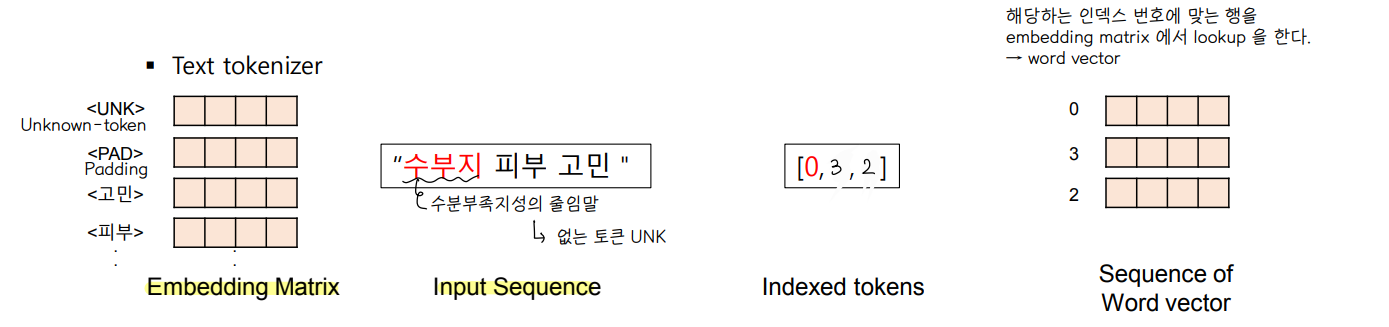

- 한정된 단어사전 크기에 의해 단어사전에 없는 단어는 UNK 토큰으로 분류되는 문제 : OOV

- 기계번역의 경우 숫자나 이름같이 단어가 무한대로 증가하는 경우가 발생 : open-vocabulary problem

- multi-task learning 의 경우 모델이 도메인마다 커버해야할 단어가 더 많아진다.

✔ 글자 기반 모델의 좋은점

💨 Unkown word 파악 가능 : OOV 문제 해결

💨 비슷한 스펠링 구성의 단어는 비슷한 임베딩 벡터를 가질 수 있음

💨 합성어와 같은 Connected language 도 분석 가능

💨 character n-grams 으로 의미 추출 : 문법, 의미적으로는 적절치 않을 수 있으나, 결과는 좋음

📌 OOV 문제 : NLP 에서 빈번히 발생하는 문제로 input language 가 database 혹은 input of embedding 에 없어서 처리를 못 하는 문제

https://acdongpgm.tistory.com/223

[NLP] . OOV 를 해결하는 방법 - 1. BPE(Byte Pair Encoding)

컴퓨터가 자연어를 이해하는 기술은 크게 발전했다. 그 이유는 자연어의 근본적인 문제였던 OOV문제를 해결했다는 점에서 큰 역할을 했다고 본다. 사실 해결이라고 보긴 어렵고 완화가 더 맞는

acdongpgm.tistory.com

2. Pure Character level seq2seq LSTM NMT system

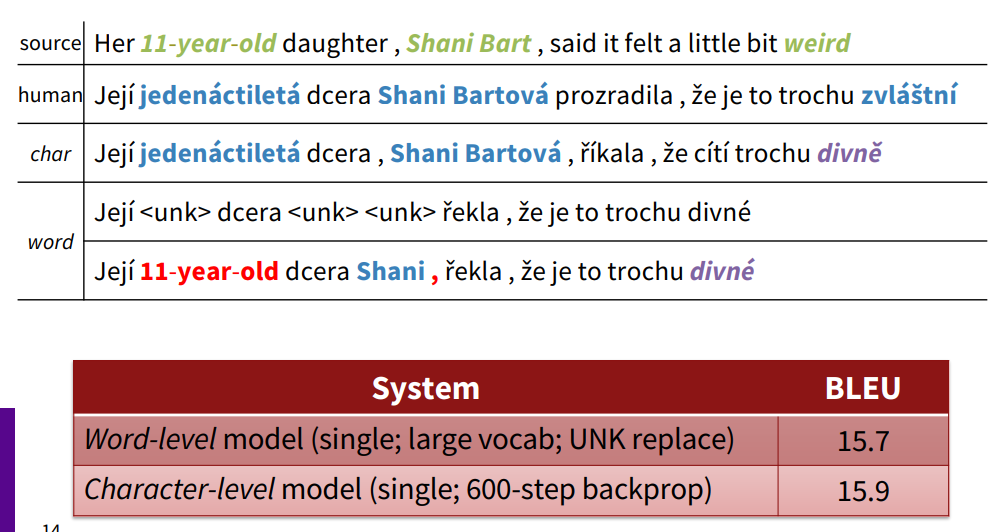

✔ English - Czech WMT (2015)

◽ 체코어는 character level 로 이것저것 실험해보기 좋은 언어이다.

◽ word level 에 비해 char level 이 사람 이름을 잘 구분해내는 모습을 볼 수 있다. word level 은 unk 토큰에 대해 원문을 그대로 copy 한다는 단점이 있다.

◽ character level 모델을 훈련하는데 3주나 소요되었다.

✔ Fully Character-level Neural Machine Transliation without Explicit segmentation (2017)

Encoder

(1) 문장 전체에 대해 character 단위의 임베딩을 진행한다.

(2) Filter size 를 다양하게 주어서 합성곱 연산을 진행하여 피처를 추출한다.

(3) stride=5 로 maxpooling 을 진행한다. (글자가 3~7개로 구성되면 하나의 단어가 만들어짐)

(4) segmentation embeddings : feature

(5) Highway Network

(6) Bidirectional GRU

Decoder 는 일반적인 character level의 sequence model

📌 highway network : 깊이가 증가할수록 최적화가 어렵기 때문에 모델을 깊게 만들면서 정보의 흐름을 통제하고 학습 가능성을 극대화할 수 있도록 해주는 역할 (Resnet / LSTM 과 비슷함)

💨 Transform gate, Carry gate

https://lyusungwon.github.io/studies/2018/06/05/hn/

Highway Network

WHY? 일반적으로 뉴럴 네트워크의 층이 깊어지면 성능이 향상되지만 그만큼 학습하기는 더욱 어려워진다.

lyusungwon.github.io

https://datacrew.tech/resnet-1-2015/

Resnet (1) – 2015 | DataCrew

LSTM과 Highway Network로 살펴보는 Resnet.2015년을 종결 지어버린 ResNet 리뷰 1편! 2015.12월 나온 이 논문의 이름은 Deep Residual Learning for Image Recognition입니다. Microsoft의 Kaiminig He가 1저자로, 이 논문으로 엄

datacrew.tech

✔ Character-Based Neural Machine Translation with Capacity and Compression (2018)

◽ Bi-LSTM Seq2Seq 모델을 적용

◽ char seq2seq 모델과 word level 의 BPE 모델의 성능을 비교했을 때, 체코어-영어 번역에서 character based 모델이 BPE 보다 우수한 성능을 보인다. 그러나 연산량은 훨씬 더 많다.

👀 character-level model 의 성능이 좀 더 우수하지만, 연산량이 많아 시간과 비용이 훨씬 많이 드는 문제

3️⃣ Sub-word Models

👀 Subword model 은 word level 모델과 동일하나, 더 작은 word 인 word pieces 를 사용한다.

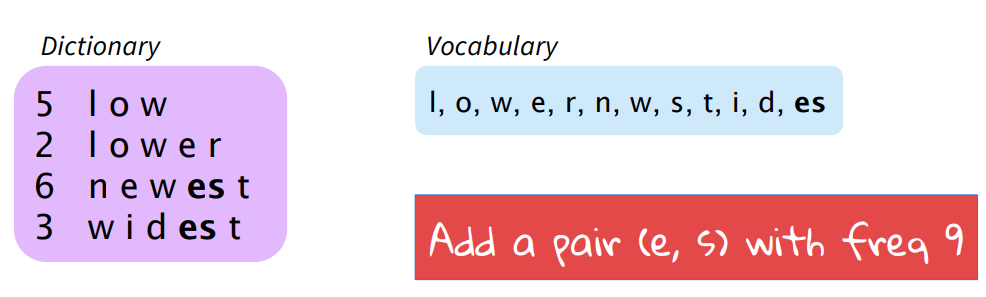

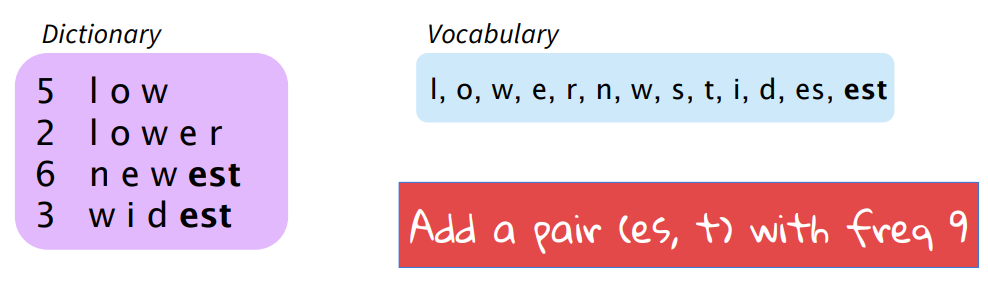

1. BPE

✔ Byte Pair Encoding

* 아이디어 기반이 된 선행논문 : https://aclanthology.org/P16-1162.pdf → 시퀀스에서 가장 빈번하게 발생되는 byte pair 를 새로운 byte 로 clustering 하여 추가하는 방식

◽ word level model 과 비슷하나, word piece 로 접근한다.

◽ 본래 압축 알고리즘으로 연구가 시작되었다가, 이에 착안하여 Word segmentation 알고리즘으로 발전했다. 딥러닝과 무관한 아이디어를 사용하고 있다.

◽ 가장 빈번하게 발생되는 pair 를 character 단위로 설정

◽ Purely data-driven and multi-lingual : 모든 데이터에 대해 적용할 수 있고 언어에 대해 독립적

💨 dictionary 에서 왼쪽 숫자들은 단어의 등장 횟수

💨 es 는 6+3 = 9 총 9번 등장함

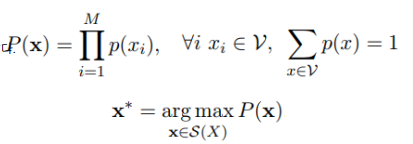

2. wordpiece model

단어 내에서 토큰화를 진행

◽ BPE 변형 알고리즘

◽ Pre-segmentation + BPE : 빈번하게 등장하는 단어들에 대해 먼저 단어사전에 추가하고 이후에 BPE 를 적용

◽ BPE 는 빈도수에 기반해 가장 많이 등장한 쌍을 병합했는데, wordpiece model 에서는 병합되었을 때 코퍼스의 likelihood 우도를 가장 높이는 쌍을 병합한다.

◽ 자주 등장하는 piece 는 unit 으로 묶음, 자주 등장하지 않는 것은 분리

◽ Transformer, ELMo, BERT, GPT-2 최신 딥러닝 모델에서 이 알고리즘 원리가 사용됨

3. SentencePiece Model

Raw text 에서 바로 작동

◽ 구글에서 2018년 공개한 비지도학습 기반 형태소 분석 패키지 : https://github.com/google/sentencepiece

GitHub - google/sentencepiece: Unsupervised text tokenizer for Neural Network-based text generation.

Unsupervised text tokenizer for Neural Network-based text generation. - GitHub - google/sentencepiece: Unsupervised text tokenizer for Neural Network-based text generation.

github.com

◽ 중국어 등 단어로 구분이 어려운 언어의 경우 raw text 에서 바로 character-level 로 나뉘어진다.

◽ 토큰화 사전작업 없이 문장 단위의 input 단어 분리 토큰화를 수행하는 단어 분리 패키지이므로 언어에 종속되지 않고 사용할 수 있다.

◽ 기존 BPE 방식과 달리 bigram 각각에 대해 co-occurence 확률을 계산하고 가장 높은 값을 가지는 것을 추가한다.

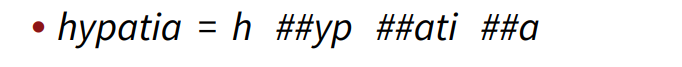

4. BERT

◽ vocab size 가 크지만 매우 크진 않으므로 word piece 를 부분적으로 사용한다.

◽ 상대적으로 빈도가 높은 단어 + wordpiece 를 이용

👉 사전에 없는 Hypatia 라는 단어의 경우엔 4개의 word vector piece 로 쪼개진다.

👀 subword 로 표현된 것들을 어떻게 다시 단어 단위로 표현하는가?

→ 임베딩된 벡터들을 평균해서 word vector 로 만듦

→ cnn,rnn 계열로 인해 더 고차원의 임베딩된 벡터 학습

4️⃣ Hybrid Models

👀 기본적으로 word 단위로 처리하고, 고유명사나 사전에 없는 단어는 character 단위로 취급한다.

1. Character-level POS

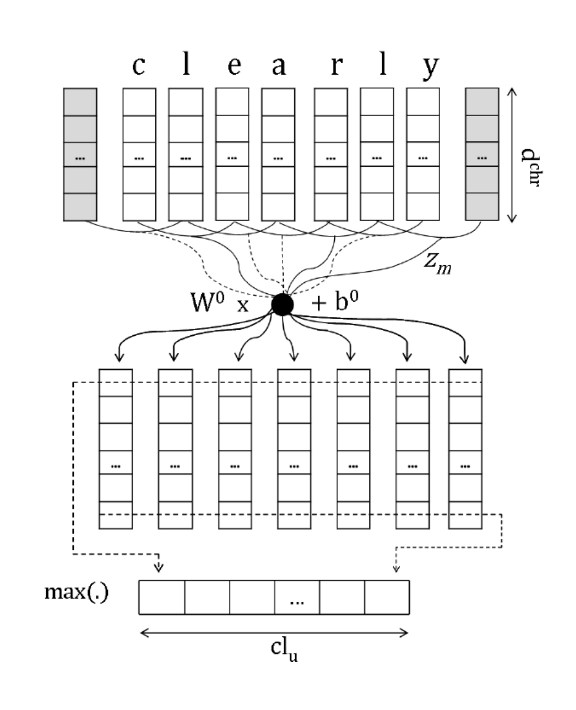

◽ Conv 연산을 취하여 단어 임베딩을 생성하고 더 높은 level 에 이 임베딩을 적용해 POS 태깅

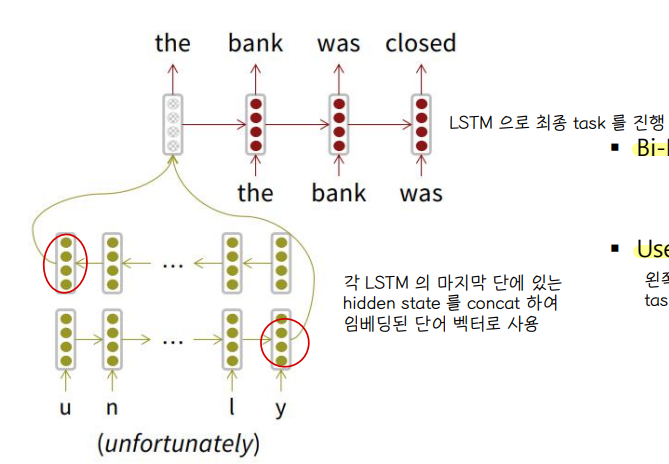

2. character-based LSTM (2015)

◽ character 단위로 NMT 신경망 기계번역 task 를 진행한 연구 사례

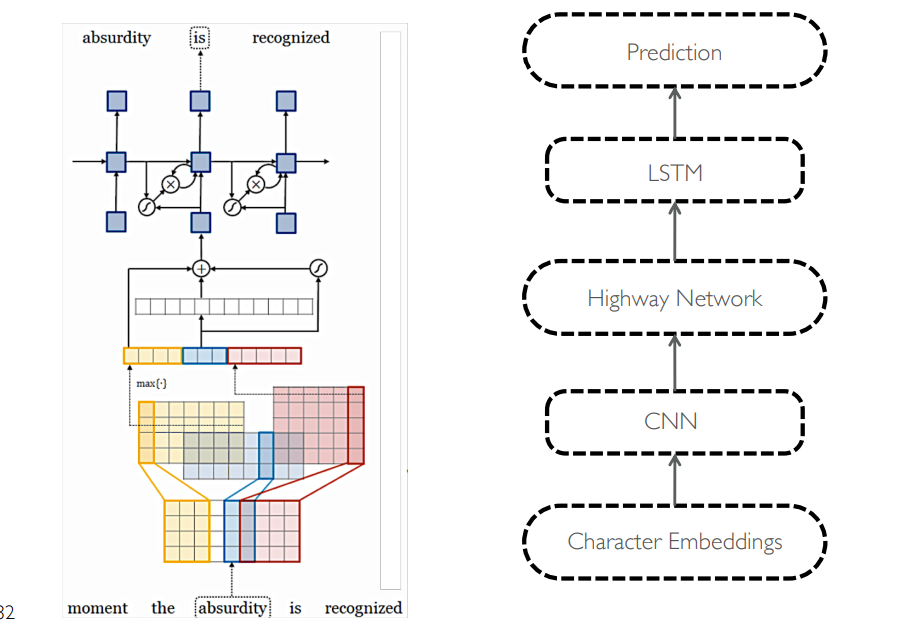

3. Character-Aware Neural Language models (2015)

◽ subword 의 관계성을 모델링 : eventful ~ eventufully ~ uneventful 처럼 공통된 subword 에 의해 의미적 관계가 존재하는 경우 유용하다.

◽ Char 단위로 구분 → Conv layer with various filter size (feature representation) → maxpooling (어떤 ngram 이 단어의 뜻을 가장 잘 나타내는지 고르는 과정) → highyway network → Word level LSTM

◽ 훨씬 적은 파라미터수로 비슷한 성능을 낸다.

◽ highway 이전에는 사람 이름의 의미를 담은 것이 아닌 철자가 유사한 단어들이 가장 비슷한 단어로 출력된 반면, highway network 이후에는 의미를 고려해 다른 사람의 이름을 출력해준다 👉 semantic 을 반영하여 더 의미있는 단어들을 학습

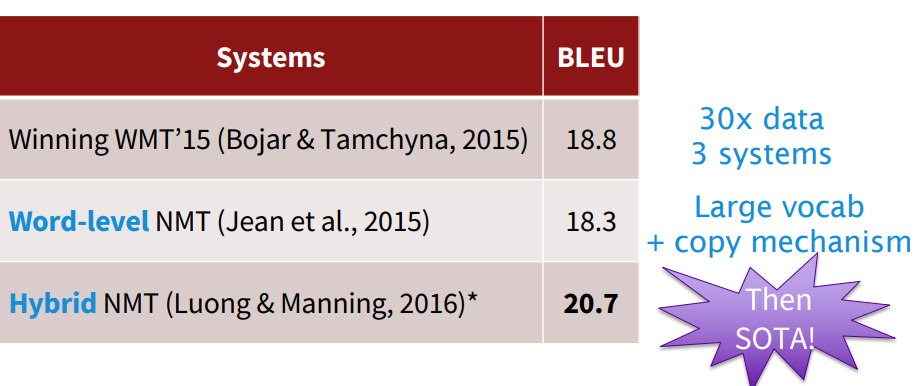

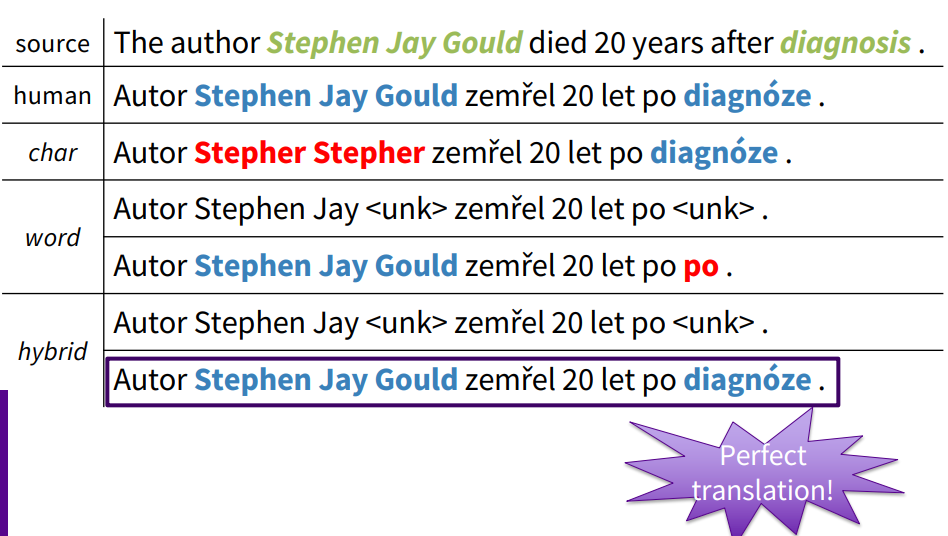

3. hybrid NMT

◽ 대부분 word level 로 접근하고 필요할때만 character level 로 접근한다.

◽ 기본적으로 seq2seq 구조를 가진다.

◽ 성능도 가장 좋았다.

◽ char-based 번역은 이름에 대해 잘못 번역함

◽ word-based 번역은 diagnosis 단어를 잃어버려 po 직전에 등장한 단어를 그대로 출력하고 unk 토큰을 그냥 원문에서 copy 해서 사용함

◽ 번역 결과도 hybrid 결과가 가장 우수

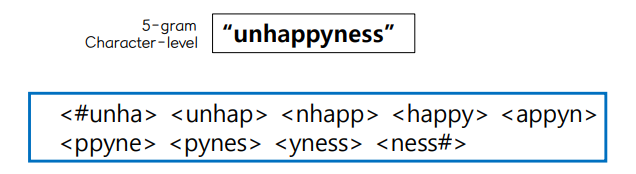

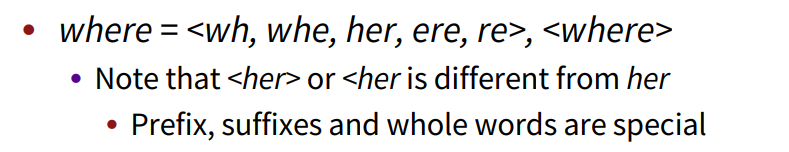

4. FastText embedding

◽ word2vec 을 이을 차세대 word vector learning library 로 하나의 단어에 여러 단어들이 존재하는 것으로 간주하여 학습한다. (n-gram)

◽ 형태소가 풍부한 언어나 희귀한 단어들을 다룰 때 더 좋은 성능을 보이는 모델

◽ 한 단어의 n-gram 과 원래의 단어를 모두 학습에 사용한다.

👉 모르는 단어에 대해서도 subword 를 활용해 다른 단어와의 유사도를 계산할 수 있고 등장 빈도수가 적은 희귀한 단어도 다른 단어와 n-gram 을 비교해 임베딩 값을 계산할 수 있다.

ex. 어쩔티비 → 어쩌라고

📌 실습 링크

06) 패스트텍스트(FastText)

단어를 벡터로 만드는 또 다른 방법으로는 페이스북에서 개발한 FastText가 있습니다. Word2Vec 이후에 나온 것이기 때문에, 메커니즘 자체는 Word2Vec의 확장 ...

wikidocs.net

01) 바이트 페어 인코딩(Byte Pair Encoding, BPE)

기계에게 아무리 많은 단어를 학습시켜도 세상의 모든 단어를 알려줄 수는 없는 노릇입니다. 만약 기계가 모르는 단어가 등장하면 그 단어를 단어 집합에 없는 단어란 의미에서 해 ...

wikidocs.net

02) 센텐스피스(SentencePiece)

앞서 서브워드 토큰화를 위한 BPE(Byte Pair Encoding) 알고리즘과 그 외 BPE의 변형 알고리즘에 대해서 간단히 언급했습니다. BPE를 포함하여 기타 서브워 ...

wikidocs.net

4. https://wikidocs.net/86792 : IMDB 실습

03) 서브워드텍스트인코더(SubwordTextEncoder)

SubwordTextEncoder는 텐서플로우를 통해 사용할 수 있는 서브워드 토크나이저입니다. BPE와 유사한 알고리즘인 Wordpiece Model을 채택하였으며, 패 ...

wikidocs.net

5. https://wikidocs.net/99893 : 자연어 처리 스타트업 허깅페이스가 개발한 패키지 tokenizers는 자주 등장하는 서브워드들을 하나의 토큰으로 취급하는 다양한 서브워드 토크나이저를 제공

04) 허깅페이스 토크나이저(Huggingface Tokenizer)

자연어 처리 스타트업 허깅페이스가 개발한 패키지 tokenizers는 자주 등장하는 서브워드들을 하나의 토큰으로 취급하는 다양한 서브워드 토크나이저를 제공합니다. 이번 실습 ...

wikidocs.net

댓글